Probability and Computing, Oxford 2018-19

Lectures

Table of Contents

- Lecture 1: A randomised algorithm for string equality

- Lecture 2: Min-cut contraction algorithm

- Lecture 3: The secretary problem and the prophet inequality

- Lecture 4: Linearity of expectations

- Lecture 5: Derandomisation

- Lecture 6: Further applications of linearity of expectations

- Lecture 7: Markov’s and Chebyshev’s inequalities

- Lecture 8: Chernoff bounds

- Lecture 9: Applications of Chernoff bounds

- Lecture 10: Martingales and stopping times

- Lecture 11: The Azuma-Hoeffding inequality

- Lecture 12: Applications of the Azuma-Hoeffding inequality

- Lecture 13: Markov chains: 2SAT and 3SAT

- Lecture 14: Markov chains and random walks

- Lecture 15: The Monte Carlo method

- Lectures 16-17: Mixing time

- Lecture 18: Lovász Local Lemma

- Lecture 19: Interactive proofs

- Lecture 20: Online algorithms - Paging

Lecture 1: A randomised algorithm for string equality

Why randomised algorithms?

Randomised algorithms make random choices. How can this help? The simple reason is that for a well-designed randomised algorithm, it is highly improbable that the algorithm will make all the wrong choices during its execution.

The advantages of randomised algorithms are:

- simplicity

- randomised algorithms are usually simpler than their deterministic counterparts

- efficiency

- they are faster, use less memory, or less communication than the best known deterministic algorithm

- dealing with incomplete information

- randomised algorithms are better in adversarial situations

Although there is a general consensus that randomised algorithms have many advantages over their deterministic counterparts, it is mainly based on evidence rather than rigorous analysis. In fact, there are very few provable cases in which randomised algorithms perform better than deterministic ones. These include the following:

- we will see an example (string equality) in which randomised

algorithms have provably better communication complexity than

deterministic algorithms

- for the similar problem of equality of multivariate polynomials, we know good randomised algorithms but no polynomial-time deterministic algorithm

- randomised algorithms provide provably better guarantees in online algorithms and learning

We have no rigorous proof that randomised algorithms are actually better than deterministic ones. This may be either because they are not really better or because we don’t have the mathematical sophistication to prove it. This is one of the outstanding open problems in computational complexity.

String equality

- String equality problem

Alice has a binary string \(a\) and Bob has a binary string \(b\), both of length \(n\). They want to check whether their strings are the same with minimal communication between them.

The straightforward method is for Alice to transmit her string to Bob who performs the check and informs Alice. This requires a communication of \(\Theta(n)\) bits. There is a better way to do it, which is based on the following reduction.

- Alice and Bob create two polynomials out of their strings: \(f(x)=\sum_{i=0}^{n-1} a_i x^i\) and \(g(x)=\sum_{i=0}^{n-1} b_i x^i\).

- In doing so, they reduce the string equality problem to the problem of checking equivalence of \(n\)-degree univariate polynomials, because the polynomials are the same if and only if they have the same coefficients.

- Checking equivalence of polynomials

Given two (univariate) polynomial \(f(x)\) and \(g(x)\) of degree \(n\), check whether they are the same.

- It helps to think that the degree of these polynomials is large (say in the billions)

- Think also of the more general problems in which the two polynomials:

- may not be in expanded form: e.g. \(f(x)=(x-3)(x^3-2x+1)^3-7\)

- may have multiple variables: e.g. \(f(x)=x_1^7(x_1^3+x_2^4)^2+2x_2\)

- Idea for algorithm

Select a large random integer \(r\) and check whether \(f(r)=g(r)\).

- Immediate question

How large \(r\) should be to achieve probability \(\epsilon\) of answering incorrectly?

- clearly there is a tradeoff between the range of \(r\) and the probability of error

- note the asymmetry (one-sided error): if the polynomials are the same, the algorithm is always correct

- if the polynomials are not the same, the algorithm returns the wrong answer exactly when \(r\) is a root of the polynomial \(f(x)-g(x)\)

- for a univariate nonzero polynomial of degree at most \(n\), there are at most \(n\) such roots1. If we select \(r\) to be a random integer in \(\{1,100n\}\), the probability of error is at most \(1/100\)

- Running time?

Note that the intermediate results of the computation may become very large. Since the degree is \(n\), \(f(r)\) may need \(\Omega(n\log n)\) bits. This is because the value of the polynomial can be as high as \(\Omega(n^n)\).

- would it not be better to transmit the original string instead of \(f(r)\)?

- the answer is no, because there is a clever way to reduce the size of numbers: do all computations in a finite field \(F_p\), for some prime \(p\geq 100n\)

- Algorithm

Here is an algorithm that implements this idea:

Select a prime \(p\) larger than \(100n\) and a random integer \(r\in \{0,\ldots,p-1\}\). Check whether

\begin{align*} f(r)=g(r) \pmod p. \end{align*}Using this algorithm, Alice and Bob can solve the string equality problem.

- Alice sends to Bob \((r, f(r) \pmod p)\). Bob checks whether \(f(r)=g(r) \pmod p\) and announces the result

- only \(O(\log n)\) bits are sent (optimal among all algorithms)

- the running time of this algorithm is polynomial in \(n\) (in fact linear, up to logarithmic factors)

- the argument for correctness is similar as above; we only replaced

the field of reals with \(F_p\)

- first notice that if the polynomials are equal, the algorithm is always correct

- otherwise, \(f(x)-g(x)\) is a nonzero polynomial of degree at most \(n\)

- but a polynomial of degree \(n < p\) has at most \(n\) roots in \(F_p\). Therefore the probability of an incorrect result is at most \(n/p\leq 1/100\)

- why do we need \(p\) to be prime? Because if \(p\) is not prime, a polynomial of degree \(n\) may have more than \(n\) roots. For example, the second-degree polynomial \(x^2-1\) has four roots \(\mod 8\)

- Loose ends

- Can we find a prime number efficiently? Yes, by repeatedly selecting

a random integer between say \(100n\) and \(200n\) until we find a

prime number

- we can check whether a number \(q\) is prime in time polynomial in \(\log q\)

- there are many prime numbers: roughly speaking, a random integer \(q\) is prime with probability approximately \(1/\ln q\) (Prime Number Theorem)

- Is probability \(1/100\) sufficient?

- we can increase the parameter \(100n\) to get a better probability; if we replace it with \(1000n\) the probability will drop to \(1/1000\)

- there is a much better way: run the test \(k\) times, with independent numbers \(r\). This will make the probability of error \(1/100^k\) (why?)

- in retrospect, and with this amplification of probability in mind, it is better to run the original test with a prime number \(p\) between \(2n\) and \(4n\) to achieve one-sided probability of error at most \(1/2\), and repeat as many times as necessary to achieve the desired bound on error

- We have seen that Alice and Bob can verify the equality of two \(n\)-bit strings by communicating \(O(\log n)\) bits. This is the best possible for randomised algorithms (why?). On the other hand, every deterministic algorithm requires \(n\) bits (why?).

- Can we find a prime number efficiently? Yes, by repeatedly selecting

a random integer between say \(100n\) and \(200n\) until we find a

prime number

Recap of useful ideas

- fingerprinting

- verify properties by appropriate random sampling

- useful trick: perform calculations modulo a prime number to keep the numbers small

- probability amplification

- run many independent tests to decrease

the probability of failure

- for one-sided error, we usually design algorithms with probability of error \(1/2\). Using probability amplification, we can easily decrease it to any desired probability bound

Further topics

- we can extend string equality to string matching (i.e. given two strings \(a\) and \(b\) check whether \(b\) is a substring of \(a\)), a very useful application. The obvious way is to check whether \(b\) appears in the first, second and so on position of \(a\). The clever trick is to find a way to combine the evaluation of the associated polynomials in an efficient way (check out the Karp-Rabin algorithm).

- it is not hard to extend the algorithm for checking polynomial identities to polynomials of multiple variables. The only difficulty is to argue about the number of roots of multi-variate polynomials (check out the Schwartz-Zippel lemma).

- applications that use efficient checking of polynomials come from unexpected domains. The perfect matching problem is such an example. The problem asks whether a given graph has a perfect matching, i.e., a set of disjoint edges that cover all vertices. The question is equivalent to whether the determinant of the Tutte matrix of the graph is not the zero polynomial (check out Tutte matrix).

Sources

- Mitzenmacher and Upfal, 2nd edition, Section 1.1

- Lap Chi Lau, L01.pdf (page 6)

- Nick Harvey, Lecture1Notes.pdf (page 3)

- James Lee, lecture1.pdf (page 1)

Lecture 2: Min-cut contraction algorithm

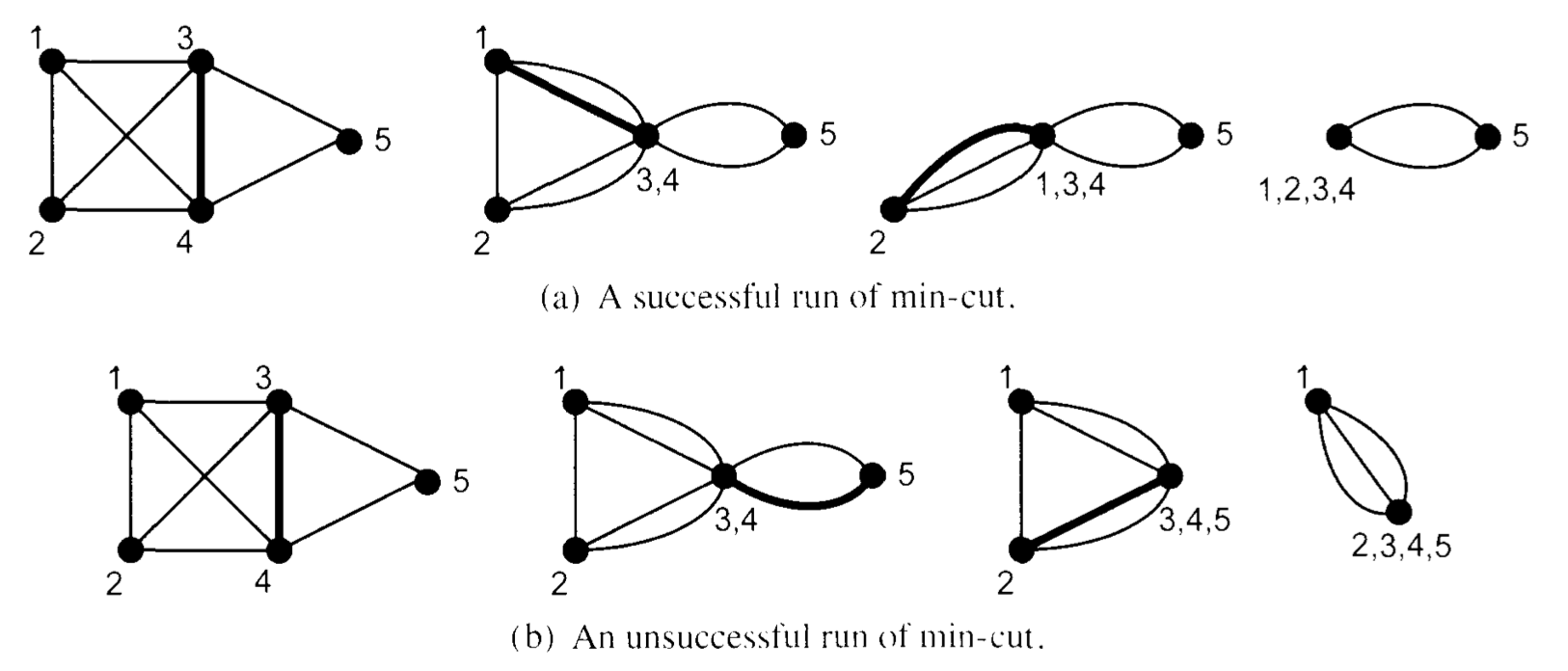

We will see now a strikingly simple randomised algorithm for a non-trivial graph problem.

Min-cut contraction algorithm

- The min-cut problem

Given a graph \(G(V,E)\), find a partition of the nodes into two non-empty parts in a way that minimises the number of edges from one part to the other.

- A cut of a graph \(G(V,E)\) is a non-empty subset of nodes \(S\subsetneq V\). The size of the cut is the number of edges from \(S\) to \(V\setminus S\).

- A mincut is a cut of minimum size

- Other algorithms

We can reduce this problem to the \(s-t\) maxflow-mincut problem to get a polynomial-time deterministic algorithm. Here we discuss a faster algorithm.

- Karger’s idea

Select a random edge of the graph and contract it. Most likely the new graph has the same mincut. Keep contracting random edges until the graph becomes small enough to compute the mincut directly

- note that the result of a contraction is in general a multigraph with self loops. We remove the loops but we leave all copies of the edges.

- Analysis

Fix a mincut \(S\). What is the probability that it will survive the first edge contraction?

- let \(k\) be the size of mincut \(S\)

- therefore the number \(m\) of edges of the multigraph is at least \(k n/2\) (Why?)2

- the probability that an edge of \(S\) is selected is \(k/m\), which is at most \(2/n\)

- the probability that \(S\) survives the first contraction is at least \(1-2/n=(n-2)/n\)

What is the probability that the mincut \(S\) will survive all contractions until only two nodes remain?

\begin{align*} \frac{n-2}{n} \frac{n-3}{n-1} \frac{n-4}{n-2}\cdots \frac{2}{4} \frac{1}{3}=\frac{2}{n(n-1)} \end{align*}- thus the probability that the contraction algorithm will be incorrect is at most \(1-\frac{2}{n(n-1)}\leq 1-\frac{2}{n^2}\)

this may seem very close to 1, but if we run the contraction algorithm \(n^2\) times and keep the best result, the probability that it will not return a mincut (in fact, the particular mincut \(S\)) will drop to 3

\begin{align*}\left(1-\frac{2}{n^2}\right)^{n^2} \leq \left(e^{-\frac{2}{n^2}}\right)^{n^2} = e^{-2} < \frac{1}{2} \end{align*}

Therefore we have:

Theorem: If we repeat the contraction algorithm \(n^2\) times, the probability that it will find a minimum cut is at least \(1/2\).

- Running time

The obvious running time is \(O(n^4)\) because we can implement every execution in \(O(n^2)\) time and we do \(n^2\) executions. This is not faster than the obvious maxflow-mincut computation, but with a little bit of effort we can significantly improve the running time of the contraction algorithm.

- this can be improved substantially if we notice that the probability that the cut \(S\) survives decreases faster as the graph becomes smaller

- to take advantage of this, we stop the contractions earlier – say when the graph has size \(r=r(n)\) – and switch to another mincut algorithm

- the probability of success becomes \(r(r-1)/n(n-1)\) and we need to repeat it only \(O(n^2/r^2)\) times to achieve probability of success at least \(1/2\)

- on the other hand, we must take into account the execution time of the algorithm we switch to when the graph reaches size \(r\)

- which algorithm should we switch to? But of course the same algorithm (recursion)

- Algorithm

Here is a variant of the above idea due to Karger and Stein:

Recursive-Contract(G)

- if n ≤ 6 use brute-force

- else repeat twice and return the best result

- G’ = Contract(G, \(n/\sqrt{2}\))

- Recursive-Contract(G’)

Contract(G, r)

- repeat until the graph has \(r\) nodes

- select a random edge and contract it

See the original paper “A new approach to the minimum cut problem” for details.

The running time of this algorithm is \(O(n^2 \log^3 n)\).

- Number of mincuts

As an extra bonus of the analysis of the contraction algorithm, we get that every graph has at most \(n(n-1)/2\) mincuts. Why? Show also that this is tight by finding a graph that has exactly \(n(n-1)/2\) mincuts.

Sources

- Mitzenmacher and Upfal, 2nd edition, Section 1.5

What is a randomised algorithm?

A randomised algorithm \(A(x,r)\) is a deterministic algorithm with two inputs. Informally, we say that \(A(x,r)\) computes a function \(f(x)\), when for a random $r\,$: \(A(x,r)=f(x)\) with significant probability.

The class Randomised-Polynomial-time (RP) consists of all languages \(L\) for which there exists a deterministic algorithm \(A(x,r)\) with running time a polynomial \(p(\cdot)\) in the length of \(x\), such that for \(r\) with \(|r|\leq p(|x|)\) and for every \(x\):

- if \(x\in L\), \(A(x,r)\) accepts for at least half of the \(r\)’s

- if \(x\not\in L\), \(A(x,r)\) rejects for all \(r\)’s.

We view this as a randomised algorithm because when we provide the algorithm with a random \(r\), it will accept with probability at least \(1/2\), when \(x\in L\), and probability \(1\), when \(x\not\in L\).

- Note the asymmetry between positive and negative instances. When an RP algorithm accepts, we are certain that \(x\in L\); when it rejects, we know that it is correct with probability at least \(1/2\).

- The class co-RP is the class with the same definition with RP, but with inverse roles of “accept” and “reject”. For example, the string equality problem is in co-RP, and the string inequality problem in RP.

- The bound \(1/2\) on the probability is arbitrary; any constant in \((0,1)\) defines the same class.

- The class NP has very similar definition. The only difference is that when \(x\in L\), the algorithm accepts not for at least half of the \(r\)’s but with at least a single \(r\).

- Similarly for the class P, the only difference is that when \(x\in L\), the algorithm accepts for all \(r\).

This shows

Theorem:

\begin{align*} P \subseteq RP \subseteq NP. \end{align*}For RP algorithms, if we want higher probability of success, we run the algorithm \(k\) times, so that the algorithm succeeds for \(x\in L\) with probability at least \(1-2^{-k}\).

Besides RP, there are other randomised complexity classes. The class ZPP (Zero-error Probabilistic Polynomial time) is the intersection of RP and its complement co-RP. Note that a language in ZPP admits an RP and a co-RP algorithm. We can repeatedly run both of them until we get an answer for which we are certain. The classes RP and ZPP correspond to the following types of algorithms

- Monte Carlo algorithm (RP)

- it runs in polynomial time and has probability of success at least \(1/2\) when \(x\in L\), and its is always correct when \(x\not\in L\).

- Las Vegas algorithm (ZPP)

- it runs in expected polynomial time and it always gives the correct answer.

Arguably, if we leave aside quantum computation, the class that captures feasible computation is the BPP class:

The class Bounded-error Probabilistic-Polynomial-time (BPP) consists of all languages \(L\) for which there exists a deterministic algorithm \(A(x,r)\) with running time a polynomial \(p(\cdot)\) in the length of \(x\), such that for \(r\) with \(|r|\leq p(|x|)\) and for every \(x\):

- if \(x\in L\), \(A(x,r)\) accepts for at least \(3/4\) of the \(r\)’s

- if \(x\not\in L\), \(A(x,r)\) rejects for at least \(3/4\) of the \(r\)’s.

- Again the constant \(3/4\) is arbitrary; any constant in \((1/2, 1)\) defines the same class.

- By repeating the algorithm many times and taking the majority, we can bring the probability of getting the correct answer very close to 1.

We don’t know much about the relation between the various classes. Clearly \(RP\subseteq BPP\), because if we run an RP algorithm twice, the probability of success is at least \(3/4\), for every input. One major open problem is whether \(BPP\subseteq NP\).

Sources

- Luca Trevisan’s notes lecture08.pdf

Lecture 3: The secretary problem and the prophet inequality

The secretary problem

We want to hire a secretary by interviewing \(n\) candidates. The problem is that we have to hire a candidate on the spot without seeing future candidates. Assuming that the candidates arrive in random order and that we can only compare the candidates that we have already seen, what is the strategy to maximise the probability to hire the best candidate?

More precisely, suppose that we see one-by-one the elements of a fixed set of \(n\) distinct numbers. Knowing only that the numbers have been permuted randomly, we have to stop at some point and pick the current number. What is the strategy that maximises the probability to pick the minimum number?

We will study the strategies that are based on a threshold \(t\): we simply interview the first \(t\) candidates but we don’t hire them. For the rest of the candidates, we hire the first candidate who is the best so far (if any).

Let \(\pi\) be a permutation denoting the ranks of the candidates. Let \(W_i^t\) denote the event that the best candidate among the first \(i\) candidates appears in the first \(t\) positions. Since the permutation is random \(\P(W_i^t)=t/i\), for \(i\geq t\).

The probability that we succeed on step \(i\), for \(i>t\), is \(\P(\pi_i=1 \wedge W_{i-1}^t)\). Since the events \(\pi_i=1 \wedge W_{i-1}^t\) are mutually exclusive, we can write the probability of success as

\begin{align*} \sum_{i=t+1}^n \P(\pi_i=1 \wedge W_{i-1}^t)&= \sum_{i=t+1}^n \frac{1}{n} \frac{t}{i-1} \\ &= \frac{t}{n} \sum_{i=t+1}^n \frac{1}{i-1} \\ &= \frac{t}{n} \left(\sum_{k=1}^{n-1} \frac{1}{k} -\sum_{k=1}^{t-1} \frac{1}{k}\right) \\ &= \frac{t}{n} (H_{n-1} - H_{t-1}) \\ &\approx \frac{t}{n} \ln \frac{n}{t}. \end{align*}This achieves its maximum value \(1/e\), when \(t=n/e\).

It is not very complicated to show that there is an optimal strategy which is a threshold strategy4. Thus

Theorem: The optimal strategy for the secretary problem, as \(n\rightarrow\infty\) is to sample \(\lceil n/e\rceil\) candidates and then hire the first candidate who is best so far (if there is any). The probability that it hires the best candidate approaches \(1/e\) as \(n\rightarrow\infty\).

The prophet inequality

Let \(X_1,\ldots,X_n\) be nonnegative independent random variables drawn from distributions \(F_1,\ldots,F_n\). We know the distributions but not the actual values. We see one-by-one the values \(X_1,\ldots,X_n\) and at some point we select one without knowing the future values. This is our reward. What is the optimal strategy to optimise the expected reward?

It is easy to see that we can find the optimal strategy by backwards induction. If we have reached the last value, we definitely select \(X_n\). For the previous step, we select \(X_{n-1}\) when \(X_{i-1}\geq \E[X_n]\), and so on. The following theorem says that we can get half of the optimal reward with a threshold value.

Theorem: Let \(X_1,\ldots,X_n\) be independent random variables drawn from distributions \(F_1,\ldots,F_n\). There is a threshold \(t\), that depends on the distributions but not the actual values, such that if we select the first value that exceeds \(t\) (if there is any), the expected reward is at least \(\E[\max_i X_i]/2\).

Proof:

We will use the notation \((a)^{+}=\max(0,a)\).

Let \(q_t\) denote the probability that no value is selected, i.e., \(q_t=P(\max_i X_i \leq t)\). Therefore with probability \(1-q_t\) the reward is at least \(t\). If only one value \(X_i\) exceeds the threshold \(t\), then the reward is this value \(X_i > t\). If more than one values exceed \(t\) then the reward is one of them, the first one. To simplify, we will assume that when this happens, we will count the reward as \(t\). To make the calculation precise, let’s first define \(q_t^i=P(\max_{j\neq i} X_j \leq t)\) and observe that \(q_t^i\geq q_t\) for all \(i\).

The expected reward \(\E[R_t]\) can be bounded by

\begin{align*} \E[R_t] &\geq (1-q_t) t + \sum_{i=1}^n q_t^i \E[(X_i-t)^+] \\ &\geq (1-q_t) t + \sum_{i=1}^n q_t \E[(X_i-t)^+] \\ &= (1-q_t) t + q_t \sum_{i=1}^n \E[(X_i-t)^+]. \end{align*}On the other hand we can find an upper bound of the optimum reward as follows:

\begin{align*} \E[\max_i X_i] & =\E[t+\max_i(X_i-t)] \\ & \leq t+\E[\max_i (X_i-t)^+] \\ & \leq t+\E\left[\sum_{i=1}^n (X_i-t)^+\right] \\ & =t+\sum_{i=1}^n \E[(X_i-t)^+]. \end{align*}By selecting \(t\) such that5 \(q_t=1/2\), we see that the threshold strategy achieves at least half the optimal value.

The above theorem is tight as the following example shows: Fix some \(\epsilon>0\) and consider \(X_1=\epsilon\) (with probability 1), \(X_2=1\) with probability \(\epsilon\), and \(X_2=0\) with probability \(1-\epsilon\). Then no strategy (threshold or not) can achieve a reward more than \(\epsilon\), while the optimal strategy has expected reward \(2\epsilon-\epsilon^2\) and the ratio tends to \(1/2\) as \(\epsilon\) tends to 0.

Sources

- Luca Trevisan’s notes on the secretary problem lecture17.pdf

- Tim Roughgarden’s notes on the prophet inequality l6.pdf

Lecture 4: Linearity of expectations

Linearity of expectations

Theorem: For every two random variables \(X\) and \(Y\):

\begin{align*} \E[X+Y]=\E[X]+\E[Y]. \end{align*}In general for a finite set of random variables \(X_1,\ldots, X_n\):

\begin{align*} E\left[\sum_{i=1}^{n} X_i\right]=\sum_{i=1}^n \E[X_i]. \end{align*}Note that linearity of expectations does not require independence of the random variables!

Remark: Most uses of probability theory can be seen as computational tools for exact and approximate calculations. For example, one can do all the math in this course using counting instead of probabilistic arguments. However, the probabilistic arguments give an intuitive and algorithmically efficient way to reach the same conclusions. During this course, we will learn a few “computational tricks” to calculate exactly or to bound random variables. On the surface, they seem simple and intuitive, but when used appropriately they can be turned into powerful tools. One such trick that we have already used is “independence”. And here we learn the “linearity of expectations” trick.

Max Cut

- The Max Cut problem

Given a graph \(G(V,E)\), find a cut of maximum size, i.e., find a set of vertices \(C\subset V\) that maximises

\begin{align*} \left|\{[u,v]\,:\, u\in C, v\not\in C\}\right|. \end{align*}This is a known NP-hard problem. It is also an APX-hard problem, meaning that unless P=NP, there is no approximation algorithm with approximate ratio arbitrarily close to 1.

With respect to approximability, an optimisation problem may

- have a polynomial time algorithm

- have a PTAS (polynomial-time approximation scheme): for every

\(\epsilon>0\), there is a polynomial time algorithm that finds a

solution within a factor \(\epsilon\) from the optimum. For example,

a PTAS can have running time \(O(n^{1/\epsilon})\)

- have a FPTAS (fully polynomial-time approximation scheme): a PTAS whose running time is also polynomial in \(1/\epsilon\), for example \(O(n/\epsilon^2)\)

- be APX-hard, i.e. it has no PTAS. This does not mean that an APX-hard problem cannot be approximated at all, but that it cannot be approximated to any desired degree. For example, we will now see that Max Cut can be approximated within a factor of \(1/2\); we say that Max Cut is APX-hard because it is known than unless P=NP, there is no polynomial-time algorithm to find solutions within a factor of \(1/17\) from the optimal solution.

- Approximate algorithm for Max Cut

There are many simple polynomial-time algorithms with approximation ratio \(1/2\). Here we will discuss a randomised algorithm:

Select a random cut, i.e., every node is put in \(C\) with probability \(1/2\).

This algorithm is so simple that it produces a cut without even looking at the graph!

- Analysis

Theorem: For every graph with \(m\) edges, the above algorithm returns a cut with expected number of edges equal to \(m/2\).

Proof: Let \(C\) be the cut produced by the algorithm. We will show that the expected number of edges crossing \(C\) is \(m/2\), where \(m\) is the number of edges of the graph. Since the optimum cut cannot have more than \(m\) edges, the approximation ratio is at least \(1/2\).

Fix an edge \([u,v]\). Let \(X_{u,v}\) be an indicator random variable that takes value 1 if \([u,v]\) is in cut \(C\) and 0 otherwise.

The probability that the edge is in the cut is

\begin{align*} \P((u\in C \wedge v\not\in C) \vee (u\not\in C \wedge v\in C))=\frac{1}{2}\cdot\frac{1}{2}+\frac{1}{2}\cdot\frac{1}{2}=\frac{1}{2}. \end{align*}Therefore \(\E[X_{u,v}]=\frac{1}{2}\cdot 1+\frac{1}{2}\cdot 0=\frac{1}{2}\).

Let \(X=\sum_{[u,v]\in E} X_{u,v}\) be the size of cut \(C\). The expected number of edges \(E[X]\) in cut \(C\) is

\begin{align*} \E\left[\sum_{[u,v]\in E} X_{u,v}\right] &= \sum_{[u,v]\in E} \E[X_{u,v}] \\ &=\sum_{[u,v]\in E} \frac{1}{2} \\ &=\frac{m}{2} \end{align*}Notice the use of the linearity of expectations.

The above analysis shows that the expected cut is of size \(m/2\), but it provides no information about the probability distribution of the size of the cut. An interesting question is how many times in expectation we need to repeat the algorithm to get a cut of size at least \(m/2\).

Here is a simple way to compute such a bound. Let \(p\) be the probability that we obtain a cut of size at least \(m/2\). Then we have

\begin{align*} \frac{m}{2} = \E[X] & = \sum_{i < m/2} i \cdot \P(X=i) + \sum_{i \geq m/2} i \cdot \P(X=i) \\ & \leq \left(\frac{m}{2}-1\right)\P\left(X \leq \frac{m}{2}-1\right) + m \P\left(X \geq \frac{m}{2}\right)\\ & = \left(\frac{m}{2}-1\right)\left(1-p\right) + mp\\ & = \left(\frac{m}{2}-1\right)+\left(\frac{m}{2}+1\right)p \end{align*}We conclude that \(p \geq \frac{1}{m/2+1}\), and hence that the expected number of trials before finding a cut of size of at least \(m/2\) is at most \(m/2+1\).

- The probabilistic method

The analysis shows that in every graph there exists a cut with at least half the edges of the graph.

The statement is a typical result of the probabilistic method: show existence of a special type object by showing either that

- a random object is of the special type with non-zero probability

- the expected number of the special type objects is positive

MAX-3-SAT

- The MAXSAT problem

Given a Boolean CNF formula, find a truth assignment that maximises the number of satisfied clauses.

This is a generalisation of the SAT problem and it is NP-hard. It is also APX-hard, i.e., there is no PTAS for this problem unless P=NP.

There are interesting special versions of the problem: MAX-2-SAT, MAX-3-SAT, and in general MAX-k-SAT in which the input is a k-CNF formula. All these special cases are NP-hard and APX-hard.

- Randomised algorithm for MAX-3-SAT

The following is a simple algorithm for the MAXSAT problem.

Select a random assignment, i.e., set every variable independently to true with probability \(1/2\).

Remark: Again, this is so simple that one can hardly consider it an algorithm; it decides the output without looking at the clauses. Not all randomised algorithms are so simple, but in general they are simpler than their deterministic counterparts.

- Analysis

We will analyse the performance of the algorithm for the special case of MAX-3-SAT. A similar analysis applies to the general MAXSAT problem, and we will revisit it later when we discuss how to “derandomise” this algorithm.

Theorem: For every 3-CNF formula with \(m\) clauses, the expected number of clauses satisfied by the above algorithm is \(\frac{7}{8}m\).

Proof: Let \(\varphi=C_1\wedge\cdots\wedge C_m\) be a formula with clauses \(C_1,\ldots,C_m\). Let \(X_i\), \(i=1,\ldots,m\), be an indicator random variable of whether a random assignment satisfies clause \(C_i\) or not. The probability that \(X_i\) is \(1\) is equal to \(7/8\), because out of the \(8\) possible (and equiprobable) assignments to the three distinct variables of \(C_i\) only one does not satisfy the clause, i.e. \(\P(X_i=1)=7/8\).

From this we get \(\E[X_i]=7/8\cdot 1 + 1/8\cdot 0=7/8\) and by linearity of expectations:

\begin{align*} \E\left[\sum_{i=1}^m X_i\right] &= \sum_{i=1}^m \E[X_i] \\ &=\sum_{i=1}^m \frac{7}{8} \\ &=\frac{7}{8}m \end{align*}Note the similarity of this proof and the proof for approximating Max Cut. Interpreting this result with the probabilistic method, we see that for every 3-CNF formula there is a truth assignment that satisfies at least \(7/8\) of its clauses.

Sources

- Mitzenmacher and Upfal, 2nd edition, Section 6.2

Lecture 5: Derandomisation

Derandomisation (method of conditional expectations)

Derandomisation is the process of taking a randomised algorithm and transform it into an equivalent deterministic one without increasing substantially its complexity. Is there a generic way to do this? We don’t know, and in fact, we have randomised algorithms that we don’t believe that can be directly transformed into deterministic ones (for example, the algorithm of verifying identity of multivariate polynomials). But we do have some techniques that work for many randomised algorithms. The simpler such technique is the method of conditional expectations. Other more sophisticated methods of derandomisation are based on reducing the number of required randomness and then try all possible choices.

Derandomisation using conditional expectations

To illustrate the idea of the method of conditional expectations, suppose that we have a randomised algorithm that randomly selects values for random variables \(X_1,\ldots,X_n\) with the aim of maximising \(\E[f(X_1,\ldots,X_n)]\). The method of conditional expectations fixes one-by-one the values \(X_1,\ldots,X_n\) to \(X_1^{*},\ldots,X_n^{*}\) so that \(f(X_1^{*},\ldots,X_n^{*}) \geq \E[f(X_1,\ldots,X_n)]\). To do this, we compute

\begin{align*} X_n^{*}&=\argmax_x \E[f(X_1,\ldots,X_n)\, | \,X_n=x] \\ X_{n-1}^{*}&=\argmax_x \E[f(X_1,\ldots,X_n)\, | \, X_n=X_n^{*}, X_{n-1}=x] \\ X_{n-2}^{*}&=\argmax_x \E[f(X_1,\ldots,X_n)\, | \, X_n=X_n^{*}, X_{n-1}=X_{n-1}^{*}, X_{n-2}=x] \\ \vdots \\ X_1^{*}&=\argmax_x \E[f(X_1,\ldots,X_n)\, | \, X_n=X_n^{*}, \ldots, X_2=X_2^{*},X_1=x] \ \end{align*}to obtain successively values \(X_n^{*},\ldots,X_1^{*}\) for which \(f\) takes a value at least as big as its expectation. If we can compute the above deterministically and efficiently, we obtain a deterministic algorithm that is as good as the randomised algorithm.

In many cases, the random variables \(X_i\) are indicator variables, which makes it easy to compute the argmax expression by computing the conditional expectation for \(X_i=0\) and \(X_i=1\) and selecting the maximum.

Derandomisation of the MAXSAT algorithm

Recall the MAXSAT algorithm which simply selects a random truth assignment. We now show how to derandomise this algorithm. When we analysed the approximation ratio for MAXSAT, we did it only for MAX-3-SAT because it was simpler. But for the discussion here, it is easier to consider general CNF formulas. In general, the inductive approach for derandomising a problem with the method of conditional expectations is facilitated by an appropriate generalisation of the problem at hand.

The crucial observation is:

Lemma: For every CNF formula with clauses of sizes \(c_1,\ldots,c_m\), the expected number of clauses satisfied by a random truth assignment is \(\sum_{i=1}^m (1-2^{-c_i})\).

Proof: The probability that the \(i\) -th clause is satisfied is \(1-2^{-c_i}\), because out of the \(2^{c_i}\) truth assignments to the variables of the clause6, only one does not satisfy the clause. If \(Z_i\) is an indicator random variable that captures whether clause \(i\) is satisfied, then \(\E[Z_i]=1-2^{-c_i}\) (we used a similar reasoning when we analysed the MAX-3-SAT algorithm). We want to compute \(\E[\sum_{i=1}^m Z_i]\). With the linearity of expectations this is \(\sum_{i=1}^m \E[Z_i]=\sum_{i=1}^m (1-2^{-c_i})\).

Given this, we can design an algorithm that satisfies at least so many clauses as in the lemma. It is best illustrated by an example.

Let \(\varphi(x_1,x_2,x_3)=(x_1 \vee \overline{x}_2)\wedge (\overline{x}_1 \vee \overline{x}_3)\wedge (x_1 \vee x_2 \vee x_3)\). A random truth assignment satisfies \(1-2^{-2}+1-2^{-2}+1-2^{-3}=19/8\) clauses, in expectation.

If we fix the value of \(x_3\) to

- true

- the resulting formula is \((x_1 \vee \overline{x}_2)\wedge (\overline{x}_1)\wedge \text{true}\). A random assignment satisfies \(1-2^{-2}+1-2^{-1}+1=9/4\) clauses.

- false

- the resulting formula is \((x_1 \vee \overline{x}_2)\wedge \text{true} \wedge (x_1 \vee x_2)\). A random assignment satisfies \(1-2^{-2}+1+1-2^{-2}=5/2\) clauses.

We must have \(\frac{1}{2}\frac{9}{4}+\frac{1}{2}\frac{5}{2}=\frac{19}{8}\), which implies that the maximum of the values \(9/4\) and \(5/2\) (the two conditional expectations) must be at least \(19/8\). Indeed, \(5/2\geq 19/8\). This suggests that we set \(x_3\) to false and repeat with the resulting formula \((x_1 \vee \overline{x}_2)\wedge \text{true} \wedge (x_1 \vee x_2)\).

We can generalise this approach to every CNF formula and we can turn it into the following algorithm:

max-sat(\(\varphi(x_1 ,\ldots, x_n)\))

- Let \(c_1^1, \ldots, c_k^1\) be the sizes of all clauses containing literal \(x_n\)

- Let \(c_1^0, \ldots, c_l^0\) be the sizes of all clauses containing literal \(\overline{x}_n\)

- If \(\sum_{i=1}^k 2^{-c_i^0}\geq \sum_{i=1}^l 2^{-c_i^1}\) then set \(x_n=\) true, else set \(x_n=\) false

- Repeat for the formula \(\varphi'(x_1, \ldots, x_{n-1})\) that results when we fix \(x_n\)

Note that we simplified the comparison of the expectations for the two cases \(x_n=\text{true}\) and \(x_n=\text{false}\) to \(\sum_{i=1}^k 2^{-c_i^0}\geq \sum_{i=1}^l 2^{-c_i^1}\).

Theorem: For every 3CNF formula, the above polynomial-time deterministic algorithm returns a truth assignment that satisfies at least \(7/8\) of the clauses.

It is known that unless P=NP, this is the best approximation ratio achievable by polytime algorithms.

Derandomisation of the Max Cut algorithm

Recall the randomised algorithm for Max Cut which partitions the vertices of the graph randomly. To derandomise it, we will fix one-by-one these random choices. To do this, let \(X_i\) be an indicator random variable of whether vertex \(i\) is in the cut, and let \(c(X_1, \ldots, X_n)\) be a random variable that gives the size of the cut. The derandomisation is based on the computation of \(\E[c(X_1, \ldots, X_n) \, |\, X_i=X_i^{*},\ldots, X_n=X_n^{*}]\), which is the expected size of the cut after we fix the position of vertices \(i,\ldots,n\). An edge contributes to this expectation:

- 1, if both vertices have been fixed and are in different parts

- 0, if both vertices have been fixed and are in the same part

- \(1/2\), if at least one of the vertices has not been fixed,

from which we get the following claim.

Claim:

\begin{align*} \E[c(X_1, \ldots, X_n) | X_i=X_i^{*},\ldots, X_n=X_n^{*}] &= \sum_{[u,v]\in E} \begin{cases} 1 & \text{$u,v\geq i$ and $X_u^{*}\neq X_v^{*}$} \\ 0 & \text{$u,v\geq i$ and $X_u^{*} = X_v^{*}$} \\ \frac{1}{2} & \text{otherwise}. \end{cases} \end{align*}The algorithm then is:

max-cut(G)

- For \(i=n,\ldots,1\)

- if \(\E[c(X_1, \ldots, X_n) | X_i=1, X_{i+1}=X_{i+1}^{*},\ldots, X_n=X_n^{*}] \geq\) \(\E[c(X_1,\ldots,X_n) | X_i=0, X_{i+1}=X_{i+1}^{*},\ldots, X_n=X_n^{*}]\), fix \(X_i^{*}=1\) and put vertex \(i\) into the cut,

- otherwise, fix \(X_i^{*}=0\) and do not put vertex \(i\) into the cut.

A closer look at this algorithm shows that it is essentially the greedy algorithm: it processes vertices \(n,\ldots,1\) and for each one of them, it puts it in the part that maximises the cut of the processed vertices.

Theorem: For every graph \(G\), the above polynomial-time deterministic algorithm returns a cut of size at least half the total number of edges.

Sources

- Mitzenmacher and Upfal, 2nd edition, Sections 6.3

Pairwise independence and derandomization

Recall that random variables \(X_1,\ldots,X_n\) are independent if \[\P(X_1=a_1 \cap \cdots \cap X_n=a_n) = \P(X_1=a_1) \cdots \P(X_n=a_n)\] for all \(a_1,\ldots,a_n\).

We say that \(X_1,\ldots,X_n\) are pairwise independent if

\begin{align*} \P(X_i=a_i \cap X_j=a_j) = \P(X_i=a_i) \cdot \P(X_j=a_j), \end{align*}for \(i \neq j\).

Bits for free!

A random bit is uniform if it assumes the values 0 and 1 with equal probability. Suppose \(X_1, \ldots, X_n\) are independent uniform random bits. Given a non-empty set \(S \subseteq \{1,\ldots,n\}\), define \(Y_S = \bigoplus_{i \in S} X_i\) (where \(\oplus\) denotes exclusive or). Clearly \(\P(Y_S=1) = 1/2\) 7. Similarly, given two different non-empty sets \(S, T \subseteq \{1,\ldots,n\}\), we have for every \(i,j\in\{0,1\}\):

\begin{align*} \P(Y_S=i \wedge Y_T=j)=1/4. \end{align*}Therefore

Claim: The \(2^n-1\) random variables \(Y_S=\bigoplus_{i \in S} X_i\), where \(S\) a non-empty subset of \(\{1,\ldots,n\}\) are pairwise independent.

Note though that the \(Y_S\)’s are not fully independent since, e.g., \(Y_{\{1\}}\) and \(Y_{\{2\}}\) determine \(Y_{\{1,2\}}\).

Application: Finding max cuts

Let \(G=(V,E)\) be an undirected graph with \(n\) vertices and \(m\) edges. Recall the argument that \(G\) has a cut of size at least \(m/2\). Suppose that we choose \(C\subseteq V\) uniformly at random. Let \(X\) be the number of edges with one endpoint in \(C\) and the other in \(V \setminus C\). Then \(\E[X] = m/2\) by linearity of expectations. We conclude that there must be some cut of size at least \(m/2\).

The above existence result is not constructive—it does not tell us how to find the cut. We have seen how to derandomise this algorithm using conditional expectations. Here we see another way to derandomise the algorithm using pairwise independence.

Let \(b = \lceil\log (n+1)\rceil\) and let \(X_1, \ldots, X_b\) be independent random bits. As explained above we can obtain \(n\) pairwise independent random bits \(Y_1, \ldots, Y_n\) from the \(X_i\). Now writing \(V=\{1,\ldots,n\}\) for the set of vertices of \(G\) we take \(A=\{ i : Y_i=1\}\) as our random cut. An edge \([i,j]\) crosses the cut iff \(Y_i \neq Y_j\), and this happens with probability \(1/2\) by pairwise independence. Thus, writing again \(X\) for the number of edges crossing the cut, we have \(\E[X] \geq m/2\). We conclude that there exists a choice of \(Y_1, \ldots, Y_n\) yielding a cut of size \(\geq m/2\). In turn this implies that there is a choice of \(X_1, \ldots, X_b\) yielding a cut of size \(\geq m/2\). But now we have a polynomial-time deterministic procedure for finding a large cut: try all \(2^b=O(n)\) values of \(X_1, \ldots, X_b\) until one generates a cut of size at least \(m/2\).

Sources

- Mitzenmacher and Upfal, 2nd edition, Sections 6.3

Lecture 6: Further applications of linearity of expectations

Coupon collector’s problem

The geometric distribution

Random processes in which we repeat an experiment with probability of success \(p\) until we succeed are captured by the geometric distribution.

A random variable \(X\) is geometric with parameter \(p\) if it takes values \(1,2,\ldots\) with

\begin{align*} \P(X=n)=(1-p)^{n-1}p. \end{align*}The following properties follow from the definition or directly from the intuitive memoryless property of independent repeated experiments.

Lemma: For a geometric random variable \(X\) with parameter \(p\)

\begin{align*} \P(X=n+k \, |\, X>k) &= \P(X=n) \\ \E[X] &= \frac{1}{p} \end{align*}Coupon collector’s problem - expectation

In the coupon collector’s problem, there are \(n\) distinct coupons. Each day we draw a coupon independently and uniformly at random with the goal of selecting all \(n\) different types of coupons. The question that we are interested in is: how many days on expectation do we need to select all coupons?

Let \(X_i\) be the number of days between the time we obtain \(i-1\) distinct coupons and the time we obtain \(i\) distinct coupons. We want to compute \(\E[\sum_{i=1}^n X_i]\). Note that if we have \(i-1\) coupons, the probability of obtaining a new coupon is

\begin{align*} p_i&=\frac{n-i+1}{n}. \end{align*}We repeat the experiment until we succeed, at which point we have one more coupon and the probability drops to \(p_{i+1}\). That is, the random variable \(X_i\) is geometric with parameter \(p_i\). By linearity of expectations, we can now compute the expected number of days to collect all coupons:

\begin{align*} \E[\sum_{i=1}^n X_i] &= \sum_{i=1}^n \E[X_i] \\ &= \sum_{i=1}^n 1/p_i \\ &= \sum_{i=1}^n \frac{n}{n-i+1} \\ &= n H_n, \end{align*}where \(H_n=1+\frac{1}{2}+\cdots+\frac{1}{n}\) is the \(n\) -th Harmonic number, approximately equal to \(\ln n\).

Sources

- Mitzenmacher and Upfal, 2nd edition, Section 2.4

Randomised quicksort

Quicksort is a simple and practical algorithm for sorting large sequences. The algorithm works as follows: it selects as pivot an element \(v\) of the sequence and then partitions the sequence into two parts: part \(L\) that contains the elements that are smaller than the pivot element and part \(R\) that contains the elements that are larger than the pivot element. It then sorts recursively \(L\) and \(R\) and puts everything together: sorted \(L\), \(v\), sorted \(R\).

Randomised Quicksort selects the pivot elements independently and uniformly at random. We want to compute the expected number of comparisons of this algorithm.

Theorem: For every input, the expected number of comparisons made by Randomised Quicksort is \(2n\ln n+O(n)\).

Proof: Let \(v_1, \ldots, v_n\) be the elements of the sequence after we sort them. The important step in the proof is to define indicator random variables \(X_{i,j}\) of whether \(v_i\) and \(v_j\) are compared at any point during the execution of the algorithm. We want to estimate \(\E[\sum_{i=1}^{n-1}\sum_{j=i+1}^n X_{i,j}]\).

The heart of the proof is to compute \(\E[X_{i,j}]\) by focusing on the elements \(S_{i,j}=\{v_i, \ldots, v_j\}\) and observing that

- the algorithm compares \(v_i\) with \(v_j\) if and only if the first element from \(S_{i,j}\) to be selected as pivot is either \(v_i\) or \(v_j\).

Given that the pivot elements are selected independently and uniformly at random, the probability for this to happen is \(2/(j-i+1)\). From this and the linearity of expectations, we get

\begin{align*} E\left[ \sum_{i=1}^{n-1}\sum_{j=i+1}^n X_{i,j} \right] &= \sum_{i=1}^{n-1}\sum_{j=i+1}^n \frac{2}{j-i+1} \\ &= \sum_{i=1}^{n-1}\sum_{k=2}^{n-i+1} \frac{2}{k} \\ &= \sum_{k=2}^n \sum_{i=1}^{n-k+1} \frac{2}{k} \\ &= \sum_{k=2}^n (n-k+1) \frac{2}{k} \\ &= (n+1)\sum_{k=2}^n \frac{2}{k} - 2(n-1)\\ &= 2(n+1)\sum_{k=1}^n \frac{1}{k} - 4n \\ &= 2(n+1)H_n - 4n. \end{align*}Since \(H_n=\ln n+O(1)\), we get that the expectation is \(2n\ln + O(n)\).

Sources

- Mitzenmacher and Upfal, 2nd edition, Section 2.5

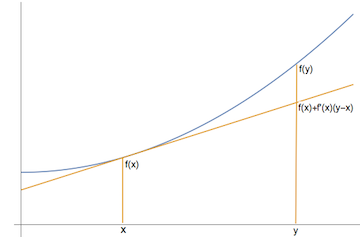

Jensen’s inequality

Another application of the linearity of expectations is a proof of Jensen’s inequality, a very general lemma and an important tool in probability theory.

Lemma: For every convex function \(f\) and every random value \(X\) with finite expectation:

\begin{align*} \E[f(X)] &\geq f(\E[X]). \end{align*}The theory of convex functions of one or more variables plays an important part in many fields and in particular in algorithms. A function is called convex if for every \(x\), \(y\), and \(\lambda\in[0,1]\): \(f(\lambda x+(1-\lambda) y)\leq \lambda f(x)+(1-\lambda) f(y)\).

Jensen’s inequality holds for every convex function \(f\), but for simplicity we will prove it only for differentiable \(f\). For differentiable functions, the definition of convexity is equivalent to: for every \(x\) and \(y\), \(f(y)\geq f(x)+f'(x)(y-x)\).

Figure 2: A convex function: \(f(y)\geq f(x)+f'(x)(y-x)\)

With this, Jensen’s inequality is a trivial application of the linearity of expectations:

Proof: Let \(\mu=\E[X]\). We then have \(f(X) \geq f(\mu)+f'(\mu)(X-\mu)\). This implies that

\begin{align*} \E[f(X)] & \geq f(\mu)+f'(\mu)(\E[X]-\mu) \\ & = f(\mu)+f'(\mu)(\mu-\mu) \\ & = f(\mu) \\ & = f(\E[X]). \end{align*}An immediate application of Jensen’s inequality is that for every random variable \(X\): \(\E[X^2]\geq (\E[X])^2\).

Sources

- Mitzenmacher and Upfal, 2nd edition, Section 2.1

Lecture 7: Markov’s and Chebyshev’s inequalities

Markov’s and Chebyshev’s inequalities

We have seen that in many cases we can easily compute exactly or approximately the expectation of random variables. For example, we have computed the expected value of the number of comparisons of Randomised Quicksort to be \(2n\ln n+O(n)\). But this is not very informative about the behaviour of the algorithm. For example, it may be that the running time of the algorithm is either approximately \(n\) or approximately \(n^2\), but never close to the expected running time. It would be much more informative if we new the distribution of the number of comparisons of the algorithm. Concentration bounds–also known as tail bounds–go a long way towards providing this kind of information: they tell us that some random variable takes values close to its expectation with high probability. We will see a few concentration bounds in this course, such as Markov’s and Chebyshev’s inequality and Chernoff’s bound. All these provide inequalities for probabilities.

Union bound

Before we turn our attention to concentration bounds, we will consider a simple bound on probabilities, the union bound. In most applications, it is not strong enough to give any useful result, but there are surprisingly many cases that the bound is useful and sometimes it is the only available tool.

Lemma [Union bound]: For any countable set of events \(E_1,E_2,\ldots\),

\begin{align*} \P(\cup_{i\geq 1} E_i)\leq \sum_{i\geq 1} \P(E_i). \end{align*}If the events \(E_i\) are mutually exclusive, the union bound holds with equality8.

Example [Ramsey numbers - lower bound]: The Ramsey number \(R(k,m)\) is the smallest integer \(n\) such that for every graph \(G\) of \(n\) vertices, either \(G\) has a clique of size \(k\) or the complement graph \(\overline{G}\) has a clique of size \(m\). For example \(R(3,3)=6\). Ramsey’s theorem, which can be proved by induction, states that \(R(k,m)\) is well defined. Of particular interest are the numbers \(R(k,k)\), and the following theorem based on the union bound is the best lower bound that we have on these numbers.

Theorem: If \(\binom{n}{k}<2^{\binom{k}{2}-1}\) then \(R(k,k)>n\). Therefore \(R(k,k)=\Omega(k 2^{k/2})\).

Proof: Consider the complete graph of \(n\) vertices and colour its edges independently and uniformly at random with two colours. If with positive probability there is no monochromatic clique of size \(k\), then \(R(k,k)>n\).

A fixed subset of \(k\) vertices is monochromatic with probability \(2^{1-\binom{k}{2}}\). There are \(\binom{n}{k}\) such subsets and, by the union bound, the probability that there exists a monochromatic clique of size \(k\) is at most \(\binom{n}{k} 2^{1-\binom{k}{2}}\). If this is less than 1, there exists a colouring that does not create a monochromatic triangle. Thus, if \(\binom{n}{k} 2^{1-\binom{k}{2}}<1\) then \(R(k,k)>n\). This proves the first part of the theorem. The second part is a simple consequence when we solve for \(n\).

Markov’s inequality

Markov’s inequality is the weakest concentration bound.

Theorem [Markov's inequality]: For every non-negative random variable \(X\) and every \(a>0\),

\begin{align*} \P(X\geq a)\leq \frac{\E[X]}{a}. \end{align*}Proof: The proof explores an idea that we have seen a few times: If \(Y\) is a 0-1 indicator variable, then \(\E[Y]=\P(Y)\). Indeed, let \(Y\) be the indicator variable of event \(X\geq a\). Observe that \(Y\leq X/a\) because

- if \(Y=1\) then \(X\geq a\)

- if \(Y=0\) then \(Y\leq X/a\), because \(X\geq 0\).

Therefore \(\P(X\geq a)=\P(Y)=\E[Y]\leq \E[X/a] = \E[X]/a\), which proves the lemma.

Although Markov’s inequality does not provide much information about the distribution of the random variable \(X\), it has some advantages:

- it requires that we only know the expected value \(\E[X]\)

- it applies to every non-negative random variable \(X\)

- it is a useful tool to obtain stronger inequalities when we know more about the random variable \(X\)

Example: Markov’s inequality is useful in turning bounds on expected running time to probability bounds. For example, the expected number of comparisons of Randomised Quicksort is \(2n\ln n+O(n)\leq 3n\ln n\), for large \(n\). Thererefore, the probability that the number of comparisons is more than \(6n\ln n\) is at most \(1/2\); more generally, the probability that the number of comparisons is more than \(3kn\ln n\) is at most \(1/k\), for every \(k\geq 1\).

Example: Let’s try to use Markov’s inequality to bound the probability of success of the randomised Max Cut algorithm. We have seen that the size \(X\) of the cut returned by the algorithm has expectation \(m/2\). What is the probability that the returned cut is at least \(m/4\)? By applying Markov’s inequality, we get that the probability is at most \(2\), a useless bound!

But here is a trick: let’s consider the number of edges that are not in the cut. This is a random variable \(Y=m-X\) whose expectation is also \(m/2\). We want to bound the probability \(\P(X\geq m/4)=\P(Y\leq 3m/4)=1-\P(Y>3m/4)\). By Markov’s inequality \(\P(Y>3m/4)\leq 2/3\), from which we get that the probability that the randomised Max Cut algorithm returns a cut of size \(X\geq m/4\) is at least \(1-2/3=1/3\). By a straightforward generalisation, we get that the probability that the randomised Max Cut algorithm returns a cut of size greater than \((1-\epsilon) m/2\) is at least \(\epsilon/(1+\epsilon)\). In particular, by taking \(\epsilon=1/m\) and using the fact that \(m\) is an integer, we get that the probability that the algorithm returns a cut of size at least \(m/2\) (i.e., greater than \((m-1)/2\)) is at least \(1/(m+1)\).

The technique of applying Markov’s inequality to a function of the random variable we are interested in, instead of the random variable itself, is very powerful and we will use it repeatedly.

Variance and moments

The \(k\)-th moment of a random variable \(X\) is \(\E[X^k]\).

The variance of a random variable \(X\) is

\begin{align*} \var[X]=\E[(X-\E[X])^2]=\E[X^2]-\E[X]^2. \end{align*}Its square root is called standard deviation: \(\sigma[X]=\sqrt{\var[X]}\).

The second equality holds because

\begin{align*} \E[(X-\E[X])^2]=\E[X^2-2X\E[X]+\E[X]^2]=\E[X^2]-2\E[X]\E[X]+\E[X]^2=\E[X^2]-\E[X]^2. \end{align*}The covariance of two random variables \(X\) and \(Y\) is

\begin{align*} \cov[X,Y]=\E[(X-\E[X])(Y-E[Y])]. \end{align*}We say that \(X\) and \(Y\) are positively (negatively) correlated when their covariance is positive (negative).

Lemma: For any random variables \(X\) and \(Y\):

\begin{align*} \var[X+Y]=\var[X]+\var[Y]+2\cov[X,Y]. \end{align*}If \(X\) and \(Y\) are independent then \(\cov[X,Y]=0\) and \(\var[X+Y]=\var[X]+\var[Y]\).

More generally, for pairwise independent random variables \(X_1\ldots,X_n\):

\begin{align*} \var\left[\sum_{i=1}^n X_i\right]=\sum_{i=1}^n \var[X_i]. \end{align*}Chebyshev’s inequality

Chebyshev’s inequality is a tail bound that involves the first two moments.

Theorem: For every random variable \(X\) and every \(a>0\),

\begin{align*} \P(|X-\E[X]|\geq a) \leq \frac{\var[X]}{a^2}. \end{align*}Proof: The proof is an application of Markov’s inequality to the (non-negative) random variable \(Y=(X-\E[X])^2\). Indeed, from the definition of \(\var[X]\) we have that \(\E[Y]=\var[X]\), and by applying Markov’s inequality we get

\begin{align*} \P((X-\E[X])^2\geq a^2)\leq \frac{\E[Y]}{a^2} = \frac{\var[X]}{a^2}, \end{align*}which proves the theorem.

Example [Binomial random variables]: We have seen that indicator random variables are very useful. An indicator random variable represents the outcome of a Bernoulli trial, which is a random experiment with two outcomes, success or failure.

A binomial random variable \(X\) with parameters \(n\) and \(p\) is the sum of \(n\) independent Bernoulli (indicator) random variables, each with probability \(p\); we write \(X\sim B(n,p)\). It follows immediately that for \(X\sim B(n,p)\),

\begin{align*} \P(X=i)=\binom{n}{i}p^i(1-p)^{n-i}. \end{align*}The expectation and variance of a binomial random variable \(X\) with parameters \(n\) and \(p\) is \(np\) and \(np(1-p)\), respectively.

We often need to estimate the probability \(\P(X\geq k)=\sum_{i=k}^n \P(X=i)=\sum_{i=k}^n \binom{n}{i}p^i(1-p)^{n-i}\). Instead of this complicated sum, we want a simple expression that approximates it. Let’s see what we get when we apply Markov’s and Chebyshev’s inequality to bound \(\P(X\geq 3n/4)\) when \(p=1/2\) (equivalent to the probability of getting more than \(3n/4\) heads when we throw a fair coin \(n\) times).

- Markov’s inequality

- \begin{align*} \P(X\geq 3n/4)\leq (n/2)/(3n/4) = 2/3 \end{align*}

- Chebyshev’s inequality

- \begin{align*} \P(X\geq 3n/4)\leq \P(|X-n/2| \geq n/4) \leq (n/4)/(n/4)^2 = 4/n. \end{align*}

Markov’s and Chebyshev’s inequalities provide some bounds on this probability, but they are not very accurate. We will see later that Chernoff’s bound gives a much better estimate.

Coupon collector’s problem - probability

In many applications, Chebyshev’s inequality is sufficient to get decent results.

Consider the coupon collector’s problem. We have seen that the time for collecting all coupons is the sum of \(n\) geometric variables \(X_i\), \(i=1,\ldots, n\), with parameters \((n-i+1)/n\) and \(\E[\sum_{i=1}^n X_i]=n H_n\).

The variance of a geometric variable with parameter \(p\) is \((1-p)/p^2\). Given that the \(X_i\)’s are independent, we have

\begin{align*} \var\left[\sum_{i=1}^n X_i\right] &=\sum_{i=1}^n \frac{n(i-1)}{(n-i+1)^2} \\ &\leq \sum_{i=1}^n \left(\frac{n}{n-i+1}\right)^2 \\ &= n^2 \sum_{i=1}^n \frac{1}{i^2} \\ &\leq \frac{\pi^2 n^2}{6}. \end{align*}With this, we can apply Chebyshev’s inequality to get

\begin{align*} \P(|X-n H_n|\geq nH_n)\leq \frac{\pi^2 n^2/6}{(n H_n)^2}=O\left(\frac{1}{\log^2 n}\right), \end{align*}which says that the probability to exceed the expectation by a factor of 2 is small. Although Chebyshev’s inequality provide a decent estimate in this case, we know much stronger concentration bounds for the coupon collector’s problem.

Sources

- Mitzenmacher and Upfal, 2nd edition, Sections 3.1

- Nick Harvey, Lecture2Notes.pdf (page 2)

- Lap Chi Lau, L02.pdf (page 4)

Lecture 8: Chernoff bounds

Chernoff bounds

One of most useful concentration bounds is Chernoff’s bound for binomial random variables and more generally for sums of \(0-1\) independent random variables.

Theorem: Let \(X_1,\ldots,X_n\) be 0-1 independent random variables such that \(\P(X_i=1)=p_i\). Let \(X=\sum_{i=1}^n X_i\) be their sum and \(\mu=E[X]\) its expected value. Then for \(\delta>0\),

\begin{align*} \P(X\geq (1+\delta)\mu) &\leq \left(\frac{e^{\delta}}{(1+\delta)^{1+\delta}}\right)^{\mu}, \end{align*}and for \(0<\delta<1\),

\begin{align*} \P(X\leq (1-\delta)\mu) &\leq \left(\frac{e^{-\delta}}{(1-\delta)^{1-\delta}}\right)^{\mu}. \end{align*}Proof: We will only prove the first inequality, as the proof of the second inequality is very similar. The proof is based on applying Markov’s inequality to the random variable \(e^{t X}\), for some appropriate parameter \(t\).

\begin{align*} \P(X\geq (1+\delta)\mu) &= \P(e^{t X}\geq e^{t(1+\delta)\mu}) \\ &\leq \frac{\E[e^{tX}]}{e^{t(1+\delta)\mu}} \\ &= \frac{\E[e^{t\sum_{i=1}^n X_i}]}{e^{t(1+\delta)\mu}} \\ &= \frac{\prod_{i=1}^n \E[e^{t X_i}]}{e^{t(1+\delta)\mu}} \\ &= \frac{\prod_{i=1}^n (p_i e^t+ (1-p_i))}{e^{t(1+\delta)\mu}}. \end{align*}The above inequality comes from Markov’s inequality, and the second-to-last equality follows from the fact that the \(X_i\)’s are independent. These are the two main ingredients of the proof; everything else in the proof consists of standard algebraic manipulations and elementary calculus.

Using the fact that for every \(x\), \(e^x\geq 1+x\), we compute

\begin{align*} p_i e^t + (1-p_i)&=1+p_i(e^t-1)\leq e^{p_i(e^t-1)}, \\ \prod_{i=1}^n (p_i e^t+ (1-p_i)) & \leq \prod_{i=1}^n e^{p_i(e^t-1)} = e^{\sum_{i=1}^n p_i(e^t-1)} = e^{\mu (e^t-1)} \end{align*}Therefore, for every \(t\),

\begin{align*} \P(X\geq (1+\delta)\mu) &\leq \frac{e^{\mu (e^t-1)}}{e^{t(1+\delta)\mu}}=\left( \frac{e^{e^t-1}}{e^{t(1+\delta)}} \right)^{\mu}. \end{align*}This is minimised when \(t=\ln(1+\delta)\), and substituting this value gives the first inequality.

The next corollary gives slightly weaker bounds that are simpler to apply. They can be derived directly from the theorem using the following inequalities for \(0<\delta<1\), which can be established by elementary calculus techniques:

\begin{align*} \delta-(1+\delta)\ln(1+\delta) &\leq -\delta^2/3, \\ -\delta+(1-\delta)\ln(1-\delta) &\leq -\delta^2/2. \end{align*}Corollary: Let \(X_1,\ldots,X_n\) be 0-1 independent random variables such that \(\P(X_i=1)=p_i\). Let \(X=\sum_{i=1}^n X_i\) and \(\mu=E[X]\). Then for \(0<\delta<1\),

\begin{align*} \P(X\geq (1+\delta)\mu) &\leq e^{-\mu \delta^2/3}, \\ \P(X\leq (1-\delta)\mu) &\leq e^{-\mu \delta^2/2}. \end{align*}Examples

Coin flips

Example [Coin flips]: Let’s analyse the number of heads of \(n\) independent fair coin flips. Let \(X_i\) be the indicator random variable for the \(i\)-th coin flip and let \(X=\sum_{i=1}^{n} X_i\) be the total number of heads. Applying the Chernoff bound we get

\begin{align*} \P\left(X-\frac{n}{2}\geq \frac{1}{2} \sqrt{6n\ln n}\right) \leq \exp\left(-\frac{1}{3} \frac{n}{2} \frac{6\ln n}{n}\right) =\frac{1}{n}. \end{align*}There is a similar bound for \(\P(\frac{n}{2}-X\geq \frac{1}{2} \sqrt{6n\ln n})\). This shows that the number of heads is tightly concentrated around the mean. The probability away from the mean drops exponentially. For example,

\begin{align*} P\left(X\geq 3n/4)\leq \exp(-\frac{1}{3} \frac{n}{2} \frac{1}{4}\right) =e^{-n/24}. \end{align*}Compare this with the bounds that we got using the Markov’s and Chebyshev’s inequalities:

- Markov’s inequality

- \begin{align*} \P(X\geq 3n/4)\leq (n/2)/(3n/4) = 2/3 \end{align*}

- Chebyshev’s inequality

- \begin{align*} \P(X\geq 3n/4)\leq \P(|X-n/2| \geq n/4) \leq (n/4)/(n/4)^2 = 4/n. \end{align*}

Random walk on a line

In many applications, we consider sums of random variables that take values \(\{-1,1\}\) instead of \(\{0,1\}\). We can translate the above Chernoff bounds to this case, but with a very similar direct proof we get slightly better bounds:

Theorem: Let \(X_1,\ldots,X_n\) be independent random variables with \(\P(X_i=1)=\P(X_i=-1)=1/2\), and let \(X=\sum_{i=1}^n X_i\). Then for \(a>0\),

\begin{align*} \P(X\geq a)\leq e^{-a^2/2n}. \end{align*}Example [Random walk]: Consider a particle that takes an unbiased random walk on a line: it starts at \(Z_0\) and at each time step \(t\) it moves from its current position \(Z_{t-1}\) either left \(Z_t=Z_{t-1}-1\) or right \(Z_t=Z_{t-1}+1\) with equal probability.

We will use Chernoff bounds to show that after \(n\) steps its position is with high probability in distance \(O(\sqrt{n\ln n})\) from the origin. Indeed \(X_i=Z_i-Z_{i-1}\) are independent random variables with \(\P(X_i=1)=\P(X_i=-1)=1/2\), and \(Z_i=\sum_{i=1}^n X_i\). Therefore

\begin{align*} \P(|Z_n|\geq \sqrt{a n\ln n})\leq 2 e^{-a n\ln n/2n}=2n^{-a/2}. \end{align*}For \(a=2\), the probability of being more than \(\sqrt{2 n\ln n}\) steps away from the origin is at most \(2/n\).

Some useful bounds that we often use:

- \(1+x \leq e^x\)

- Proof: By taking derivatives, the minimum of \(e^x-x-1\) is achieved when \(x=0\) and it is \(e^0-0-1=0\).

- \(\frac{e^x+e^{-x}}{2} \leq e^{x^2/2}\)

Proof: Consider the Taylor expansions of \(e^x\) and \(e^{-x}\):

\begin{align*} (e^x+e^{-x})/2 &= \left(\sum_{k=0}^{\infty} x^k/k! + \sum_{k=0}^{\infty} (-x)^k / k! \right)/2 \\ &= \sum_{k=0}^{\infty} x^{2k}/(2k)! \\ &\leq \sum_{k=0}^{\infty} (x^2/2)^{k}/k! \\ &= e^{x^2/2}. \end{align*}The inequality follows from term-wise comparison: \((2k)! \geq 2^k k!\).

- \(\left(\frac{n}{k} \right)^k \leq \binom{n}{k} \leq \left(\frac{e n}{k} \right)^k\)

Proof: For the lower bound,

\begin{align*} \binom{n}{k} = \frac{n(n-1)\cdots (n-k+1)}{k (k-1)\cdots 1} = \frac{n}{k}\cdots \frac{n-k+1}{1} \geq \frac{n}{k}\cdots\frac{n}{k}=\left(\frac{n}{k} \right)^k \end{align*}For the upper bound,

\begin{align*} \binom{n}{k} = \frac{n(n-1)\cdots (n-k+1)}{k (k-1)\cdots 1} \leq \frac{n^k}{k!} \leq \frac{n^{k}}{k^k/e^k}. \end{align*}To see the last inequality, take the Taylor expansion of \(e^k\) and observe that its \(k\)-th term is \(k^k/k!\), which shows \(e^k \geq k^k/k!\).

Sources

- Mitzenmacher and Upfal, 2nd edition, Sections 4.1-4.4

- Lap Chi Lau, L03.pdf (page 1)

Lecture 9: Applications of Chernoff bounds

Set Balancing

Example [Set balancing]:

Let \(A\) be an \(n \times m\) matrix with entries in \(\{0,1\}\). Columns represent individuals and rows represent features, and \(A_{i,j}\) indicates whether individual \(j\) has feature \(i\). The set balancing problem asks to partition the set of individuals into two classes such that each feature is as balanced as possible between the classes. For example, one might want to test a new drug and a placebo respectively on two groups that are as similar as possible.

We represent the partition by an \(m\)-dimensional vector \(b\) with entries in \(\{-1,1\}\), where the value of \(b_i\) indicates the class to which individual \(i\) belongs. Our goal is to minimise the discrepancy \(||A\cdot b||_{\infty}\),9 which is the maximum over all features of the difference between the respective numbers of people possessing the feature in the two groups.

Here is a simple randomised algorithm for selecting the vector \(b\):

Independently choose each entry \(b_j\) to be \(1\) or \(-1\), each with probability \(1/2\).

Note that this procedure ignores the entries of the feature matrix \(A\).

Theorem: For a random vector \(b\) with entries chosen independently and equiprobably from \(\{-1,1\}\), we have \(|| A\cdot b ||_{\infty} \geq \sqrt{4m \ln n}\) with probability at most \(2/n\).

Proof: We first focus on a specific row \(a_i\) of the matrix \(A\). We have two cases depending on the number of non-zero entries \(k\) of \(a_i\):

- \(k\leq \sqrt{4m \ln n}\). Then clearly \(|a_i\cdot b|\leq \sqrt{4m \ln n}\).

\(k\geq \sqrt{4m \ln n}\). In this case, we apply the Chernoff bound

\begin{align*} \P(|a_i \cdot b|\geq \sqrt{4 m\ln n})\leq 2 e^{-4 m\ln n/(2k)} \leq 2 e^{-4 m\ln n/(2n)}=2n^{-2}. \end{align*}Since there are \(n\) rows, the theorem follows from the union bound.

Balls and bins - maximum load

We throw \(n\) balls into \(n\) bins and we are interested in the maximum load.

First let’s consider a fixed bin, say bin \(1\). Its expected load is 1. Since the balls are thrown independently, we can use the Chernoff bounds. Here we have the sum of \(n\) 0-1 independent random variables, each with probability \(1/n\). In other words, the number of balls into bin \(1\) is \(Y_1\sim B(n,1/n)\). Chernoff bound gives (with \(\mu=\E[Y_1]=1\))

\begin{align*} \P(Y_1 \geq (1+\delta))\leq \frac{e^{\delta}}{(1+\delta)^{1+\delta}}. \end{align*}If we select \(\delta=\Theta(\ln n/\ln\ln n)\), the right-hand side is at most \(1/n^2\). Specifically for \(1+\delta=3\ln n/\ln\ln n\), the right-hand side is at most \(1/n^2\).

By the union bound, the probability that every bin has load at most \(1+\delta\) is at most \(n(1/n^2)=1/n\).

Theorem: When we throw \(n\) balls into \(n\) bins uniformly and independently, the probability that the maximum load is more than \(3\ln n/\ln\ln n\) is at most \(1/n\), for \(n\) sufficiently large.

To bound the expected maximum load, we provide an upper bound for the following two cases:

- with probability at least \(1-1/n\), the maximum load is at most \(3\ln n/\ln\ln n\)

- with probability at most \(1/n\), the maximum load is at most \(n\)

Therefore the expected maximum load is at most \((1-1/n)\cdot 3\ln n/\ln\ln n+1/n \cdot n\leq 1+3\ln n/\ln\ln n\).

This is an upper bound on the expected maximum load and it is tight. One can derive the opposite direction using the second moment method, but this is beyond today’s lecture. The following theorem is offered without proof.

Theorem: When we throw \(n\) balls into \(n\) bins uniformly and independently, the expected maximum load is \(\Theta(\log n/\log\log n)\).

In fact, we know of very precise bounds, that we will also not prove here. The expected maximum load is \(\ln n/\ln\ln n (1+o(1))\).

Sources

- Mitzenmacher and Upfal, 2nd edition, sections 5.1, 5.2

Randomness and non-uniformity

We consider here an application of Chernoff bounds to computational complexity. Recall the definition of the complexity class BPP.

The class Bounded-error Probabilistic-Polynomial-time (BPP) consists of all languages \(L\) for which there exists a deterministic algorithm \(A(x,r)\) with running time a polynomial \(p(\cdot)\) in the length of \(x\), such that for \(r\) with \(|r|\leq p(|x|)\) and for every \(x\):

- \(A(x,r)\) is correct about \(x\in L\) for at least \(3/4\) of the \(r\)’s.

Fix a problem that admits a randomised BPP algorithm \(A(x,r)\) with running time \(p(n)\). We will consider only inputs of length \(n\). Imagine a very large table with rows the \(2^n\) possible inputs \(x\) of size \(n\), columns the \(2^{p(n)}\) random strings \(r\), and 0-1 entries indicating whether the algorithm is correct for the given input and random string. The fact that this is a BPP algorithm is equivalent of saying that for every row of the table, the fraction of \(1\)’s is at least \(3/4\). Note that this immediately implies that there exists a column whose fraction of \(1\)’s is at least \(3/4\). Now if we run the algorithm three times and take the majority of answers, the probability of success increases to \(27/32\). This means that there exist 3 columns in the table such that for a fraction of \(27/32\) of the rows the majority in the three columns is 1. By running the algorithm many times, we can make the probability of success more than \(1-2^{-n}\), which implies that there are \(k\) columns such that for every row the majority is 1.

If we knew these \(k\) columns, we could use them to get the correct answer for every input. Reinterpreting this, we can say that we can derandomise the algorithm by running the algorithm with these particular \(k\) random strings and taking the majority.

We now show that \(k\) does not have to be very large. What is the probability of failure when we run the algorithm \(k\) times? Since we take the majority, it is equal to \(\P(X\leq k/2)\), where \(X\) is a binomial variable \(X\sim B(k,3/4)\). To apply a Chernoff bound, we compute \(E[X]=\mu=3k/4\), \(k/2=(1-\delta)\mu\) where \(\delta=1/3\).

\begin{align*} \P(X\leq k/2) &= \P(X\leq (1-\delta)\mu) \\ & \leq e^{-\delta^2\mu/2} \\ & = e^{-k/24}. \end{align*}We want this to be less than \(2^{-n}\), which shows that \(k=O(n)\). So, we have shown that

Theorem: If a language \(L\) admits a BPP algorithm \(A\) with running time \(O(p(n))\), there exists a BPP algorithm \(A'\) for \(L\) with running time \(O(n p(n))\) and strings \(r_n^{*}\), \(n=1,2,\ldots\), such that \(A'(x,r_n^{*})\) is correct for all \(x\) of size \(n\).

When we fix the random string to be \(r_n^{*}\), algorithm \(A'\) becomes a deterministic algorithm, but it works only for inputs \(x\) of length \(n\). This is an example of non-uniform computational complexity: for each input size we have a different algorithm. Non-uniform computation is not in general equivalent to uniform computational complexity. In particular, computing the strings \(r_n^{*}\), may be a substantially more difficult problem.

Similar considerations apply to RP, as well as to other computational models. In particular, the same reasoning shows that we don’t need to consider randomised circuits. Circuits is a typical non-uniform computational model: we need a different circuit for every input size. With a similar reasoning as with BPP, we get

Theorem: If a language (problem) has randomised circuits of size \(O(p(n))\) for inputs of size \(n\), then it has deterministic circuits of size \(O(n p(n))\).

Given that a language with running time \(O(p(n))\) has circuits of size \(O(p(n)^2)\), we get

Corollary: BPP has polynomial-size circuits.

Sources

- Luca Trevisan’s notes lecture08.pdf

Lecture 10: Martingales and stopping times

Martingales

A martingale is a sequence of random variables \(X_0,X_1,\ldots,\) of bounded expectation such that for every \(i\geq 0\),

\begin{align*} \E[X_{i+1} \, | \, X_0,\ldots, X_i]=X_i. \end{align*}More generally, a sequence of random variables \(Z_0,Z_1,\ldots\) is a martingale with respect to the sequence \(X_0,X_1,\ldots\) if for all \(n\geq 0\) the following conditions hold:

- \(Z_n\) is a function of \(X_0,\ldots,X_n\),

- \(\E[|Z_n|]<\infty\),

- \(\E[Z_{n+1} \,|\, X_0,\ldots, X_n]=Z_n\).

If we replace the last condition by \(\E[Z_{n+1} \,|\, X_0,\ldots, X_n] \leq Z_n\), we get a supermartingale. The inverse condition gives a submartingale.

Gambler’s fortune

Example [Gambler's fortune]: A gambler plays a sequence of fair games. Let \(X_i\) be the amount that the gambler wins on the \(i\)-th game; this will be positive or negative with probability \(1/2\). Let \(Z_i\) denote the gambler’s total winnings immediately after the \(i\)-th game. Since the games are fair, \(\E[X_i]=0\) and \(\E[Z_{i+1} \,|\, X_0,\ldots,X_i]=Z_i+E[X_{i+1}]=Z_i\), which shows that every finite10 sequence \(Z_0,Z_1,\ldots,Z_n\) is a martingale. This a generalization of the simple random walk on a line, because each bet can be arbitrary; in particular, the gambler can use the past outcomes and any algorithm to calculate the amount of the next bet.

In the case of unfair games in which the gambler is expected to lose money in each game we have a supermartingale.

Balls and bins

Example [Balls and bins]: Suppose that we throw \(m\) balls into \(n\) bins independently and uniformly at random. This is one of the most-studied random experiments and we usually ask questions about the expected maximum load or the expected number of empty bins.

Here we consider the expected number of empty bins. Let \(X_i\) be the random variable representing the bin into which the \(i\)-th ball falls. Let \(Y\) be a random variable representing the number of empty bins at the end. Then the sequence of random variables

\begin{align*} Z_i=\E[Y \,|\, X_1,\ldots,X_i] \end{align*}is a martingale. Clearly \(Z_i\) is a function of the \(X_1,\ldots,X_i\)’s and has bounded expectation. Furthermore

\begin{align*} \E[Z_{i+1} \,|\, X_1,\ldots,X_i] &=\E[\E[Y \,|\, X_1,\ldots,X_i,X_{i+1}] \,|\, X_1,\ldots,X_i] \\ &= \E[Y \,|\, X_1,\ldots,X_i] \\ &= Z_i. \end{align*}We can view \(Z_i\) as an estimate of \(Y\) after having observed the outcomes \(X_1,\ldots,X_i\). At the beginning \(Z_0\) is a crude estimate, simply the expectation of \(Y\). As we add more balls to the bins, \(Z_i\)’s give improved estimates of \(Y\), and at the end we get the exact value \(Z_m=Y\).

Doob martingales

Example [Doob martingales]: The balls and bins example is a typical Doob martingale. In general, Doob martingales are processes in which we obtain a sequence of improved estimates of the value of a random variable as information about it is revealed progressively. More precisely, suppose that \(Y\) is a random variable that is a function of random variables \(X_0,X_1,\ldots\). As we observe the sequence of random variables \(X_0,\ldots,X_n\), we improve our estimates of \(Y\). The sequence of the mean estimates

\begin{align*} Z_t=\E[Y\,|\, X_0,\ldots,X_t], \end{align*}form a martingale with respect to the sequence \(X_0,\ldots,X_n\) (provided that the \(Z_t\)’s are bounded). Indeed, when we argued that the balls and bins process is a martingale, we used no property of the experiment, therefore the following holds in general

\begin{align*} \E[Z_{t+1} \,|\, X_0,\ldots,X_t] &=\E[\E[Y\,|\, X_0,\ldots,X_t,X_{t+1}] \,|\, X_0,\ldots,X_t]] \\ &=\E[Y\,|\, X_0,\ldots,X_t] \\ &=Z_t. \end{align*}Stopping times

A nonnegative, integer-valued random variable \(T\) is a stopping time for the sequence \(Z_0,Z_1,\ldots\), if for every \(n\), the event \(T=n\) depends only on \(Z_0,Z_1,\ldots,Z_n\).

For example, in gambler’s fortune (equivalent to the random walk on the line), the first time that the gambler wins an amount \(k\) is a stopping time, but the first time that the gambler attains the maximum winnings during the first \(n\) games is not a stopping time.

Stopping times and martingales

Lemma: Let \(Z_0,\ldots,Z_t\) be a martingale with respect to \(X_0,\ldots,X_t\). Then

\begin{align*} \E[Z_t]=\E[Z_0]. \end{align*}Proof: From the definition of martingales, \(\E[Z_{i+1}\,|\,X_0,\ldots,X_i]=Z_i\). The lemma follows by taking expectations on both sides to get \(\E[Z_{i+1}]=\E[Z_i]\) and apply induction.

The question is whether the lemma applies not only at fixed times \(t\) but at other stopping times. The answer is negative as the following example shows: Consider again the gambler’s fortune and the first time that the gambler wins an amount \(k>0\). This is a stopping time but clearly the expectation at the end is \(k\) which is greater than the expectation at the beginning which is 0. However under certain conditions the lemma can be generalised to stopping times.

Theorem [Optional stopping theorem]: Let \(Z_0,Z_1,\ldots\) be a martingale with respect to \(X_1,\ldots\). If \(T\) is a stopping time for \(X_1,X_2,\ldots\) then

\begin{align*} \E[Z_T]=\E[Z_0], \end{align*}if one of the following conditions holds:

- there is a constant \(c\) such that \(|Z_i|\leq c\), for every \(i\)

- \(T\) is bounded

- \(\E[T]<\infty\) and there is a constant \(c\) such that \(\E[|Z_{i+1}-Z_i| \,|\, X_1,\ldots,X_i]\leq c\).

A similar conclusion holds for supermartingales, i.e., \(\E[Z_T]\leq \E[Z_0]\), and for submartingales, i.e., \(\E[Z_T]\geq \E[Z_0]\).

For example the gambler’s stopping rule of stopping when she is ahead an amount \(k\), i.e., stop when \(Z_t=k\), does not satisfy any of the conditions: the \(Z_i\)’s can be arbitrarily negative, the time \(T\) can be unbounded, and in fact the expected value of \(T\) is unbounded (see the example below and consider the case \(b\rightarrow\infty\)).

Example: Consider the random walk on the line. For \(a,b>0\), what is the probability that the random walk will reach \(a\) before it reaches \(-b\). This is equivalent to asking for the probability that a gambler wins if she indents to quit the first time she is either ahead by an amount \(a\) or behind by an amount \(b\).

Let \(X_i\), \(i=1,2,\ldots\) be the amount won in the \(i\)-th game, \(X_i\in\{-1,1\}\). Then \(Z_i=\sum_{j=1}^{i} X_j\) is a martingale with respect to \(X_i\). The first time \(T\) satisfies \(Z_T\in\{-b,a\}\) is a stopping time for \(X_1,X_2,\ldots\). We can apply the Optional stopping theorem, because \(|Z_{i+1}-Z_i|=1\) and \(\E[T]<\infty\) (why?). We then get

\begin{align*} \E[Z_T]=\E[Z_0]=0. \end{align*}On the other hand, if \(q\) is the probability of reaching \(a\) before reaching \(-b\), then \(E[Z_T]=q a + (1-q) (-b)\). Setting this to 0, we get

\begin{align*} q=\frac{b}{a+b}. \end{align*}Example: Consider again the random walk on the line of the previous example. We want to estimate the expected number of steps of the random walk to reach \(a\) or \(-b\). We define the random variable \(Y_t=Z_t^2-t\) and observe that it is a martingale with respect to \(X_1,X_2,\ldots\):