Publications

On Pathologies in KL-Regularized Reinforcement Learning from Expert Demonstrations

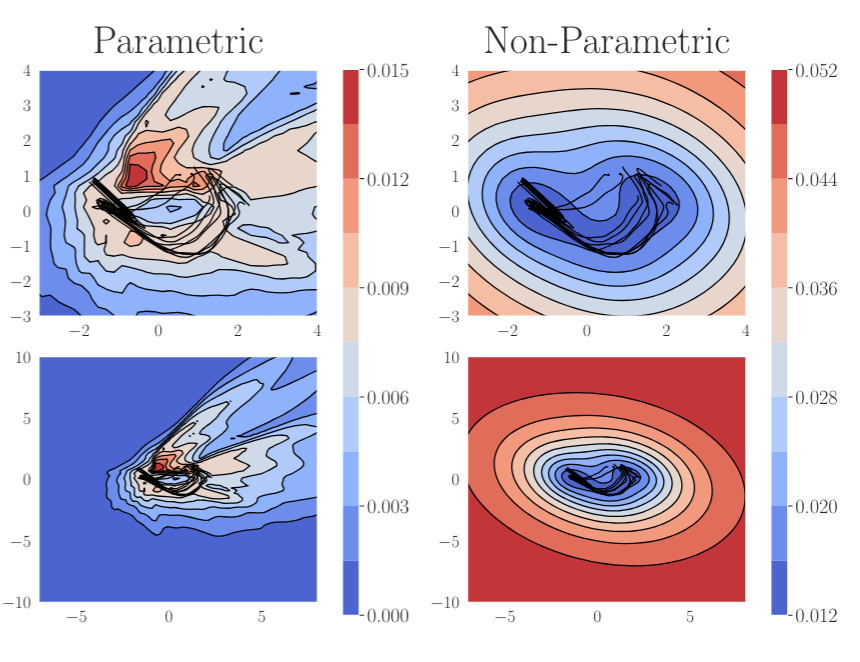

KL-regularized reinforcement learning from expert demonstrations has proved successful in improving the sample efficiency of deep reinforcement learning algorithms, allowing them to be applied to challenging physical real-world tasks. However, we show that KL-regularized reinforcement learning with behavioral policies derived from expert demonstrations suffers from hitherto unrecognized pathological behavior that can lead to slow, unstable, and suboptimal online training. We show empirically that the pathology occurs for commonly chosen behavioral policy classes and demonstrate its impact on sample efficiency and online policy performance. Finally, we show that the pathology can be remedied by specifying non-parametric behavioral policies and that doing so allows KL-regularized RL to significantly outperform state-of-the-art approaches on a variety of challenging locomotion and dexterous hand manipulation tasks.

Tim G. J. Rudner, Cong Lu, Michael A. Osborne, Yarin Gal, Yee Whye Teh

NeurIPS, 2021

ICLR Workshop on Robust and Reliable Machine Learning in the Real World, 2021

[OpenReview] [Website] [BibTex]

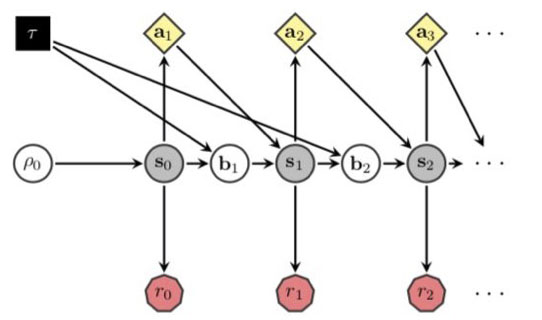

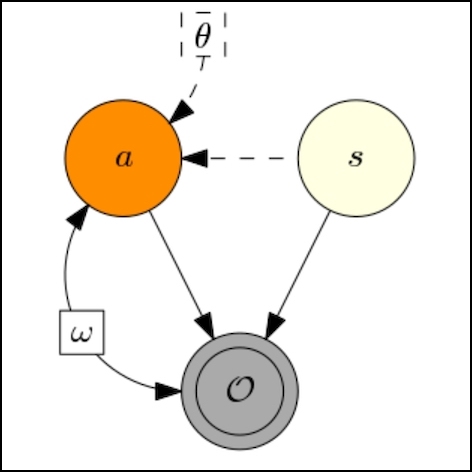

Outcome-Driven Reinforcement Learning via Variational Inference

While reinforcement learning algorithms provide automated acquisition of optimal policies, practical application of such methods requires a number of design decisions, such as manually designing reward functions that not only define the task, but also provide sufficient shaping to accomplish it. In this paper, we view reinforcement learning as inferring policies that achieve desired outcomes, rather than as a problem of maximizing rewards. To solve this inference problem, we establish a novel variational inference formulation that allows us to derive a well-shaped reward function which can be learned directly from environment interactions. From the corresponding variational objective, we also derive a new probabilistic Bellman backup operator and use it to develop an off-policy algorithm to solve goal-directed tasks. We empirically demonstrate that this method eliminates the need to hand-craft reward functions for a suite of diverse manipulation and locomotion tasks and leads to... [full abstract]

Tim G. J. Rudner, Vitchyr H. Pong, Rowan McAllister, Yarin Gal, Sergey Levine

NeurIPS, 2021

NeurIPS Workshop on Deep Reinforcement Learning, 2020

[arXiv] [OpenReview] [BibTex]

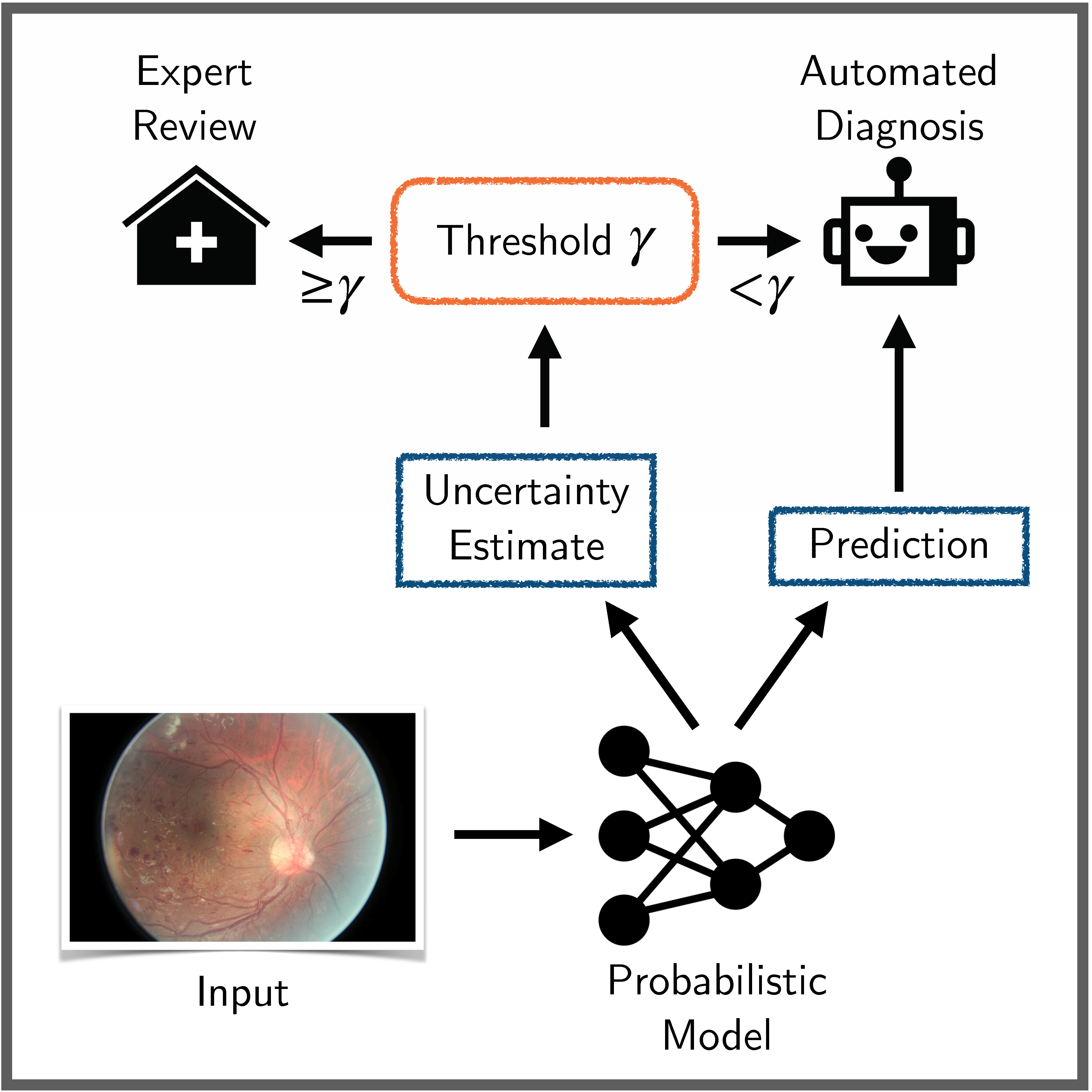

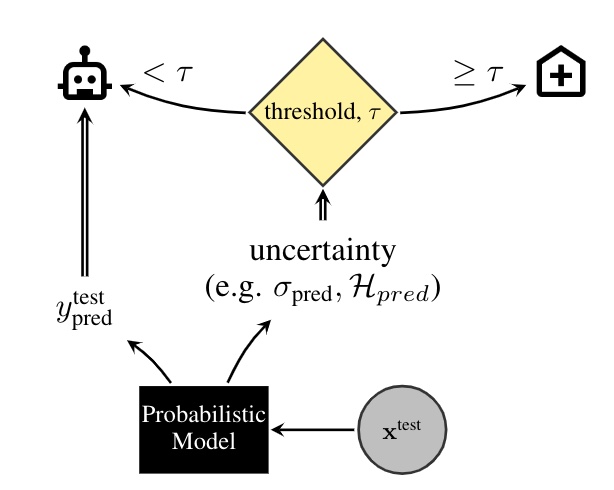

Benchmarking Bayesian Deep Learning on Diabetic Retinopathy Detection Tasks

Bayesian deep learning seeks to equip deep neural networks with the ability to precisely quantify their predictive uncertainty, and has promised to make deep learning more reliable for safety-critical real-world applications. Yet, existing Bayesian deep learning methods fall short of this promise; new methods continue to be evaluated on unrealistic test beds that do not reflect the complexities of downstream real-world tasks that would benefit most from reliable uncertainty quantification. We propose a set of real-world tasks that accurately reflect such complexities and are designed to assess the reliability of predictive models in safety-critical scenarios. Specifically, we curate two publicly available datasets of high-resolution human retina images exhibiting varying degrees of diabetic retinopathy, a medical condition that can lead to blindness, and use them to design a suite of automated diagnosis tasks that require reliable predictive uncertainty quantification. We use th... [full abstract]

Neil Band, Tim G. J. Rudner, Qixuan Feng, Angelos Filos, Zachary Nado, Michael W. Dusenberry, Ghassen Jerfel, Dustin Tran, Yarin Gal

NeurIPS Datasets and Benchmarks Track, 2021

Spotlight Talk, NeurIPS Workshop on Distribution Shifts, 2021

Symposium on Machine Learning for Health (ML4H) Extended Abstract Track, 2021

NeurIPS Workshop on Bayesian Deep Learning, 2021

[OpenReview] [Code] [BibTex]

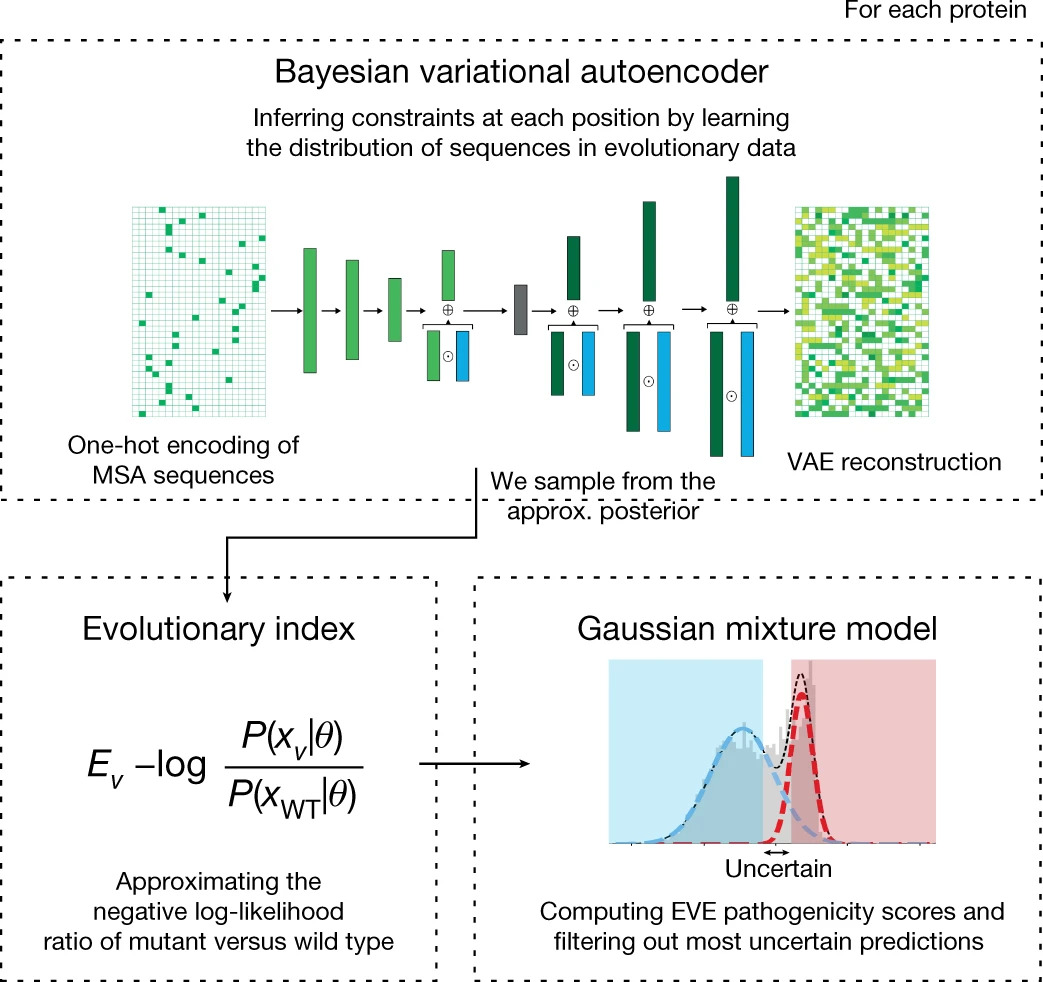

Disease variant prediction with deep generative models of evolutionary data

Quantifying the pathogenicity of protein variants in human disease-related genes would have a marked effect on clinical decisions, yet the overwhelming majority (over 98%) of these variants still have unknown consequences. In principle, computational methods could support the large-scale interpretation of genetic variants. However, state-of-the-art methods have relied on training machine learning models on known disease labels. As these labels are sparse, biased and of variable quality, the resulting models have been considered insufficiently reliable. Here we propose an approach that leverages deep generative models to predict variant pathogenicity without relying on labels. By modelling the distribution of sequence variation across organisms, we implicitly capture constraints on the protein sequences that maintain fitness. Our model EVE (evolutionary model of variant effect) not only outperforms computational approaches that rely on labelled data but also performs on par with,... [full abstract]

Jonathan Frazer, Pascal Notin, Mafalda Dias, Aidan Gomez, Joseph K. Min, Kelly Brock, Yarin Gal, Debora Marks

[Nature]

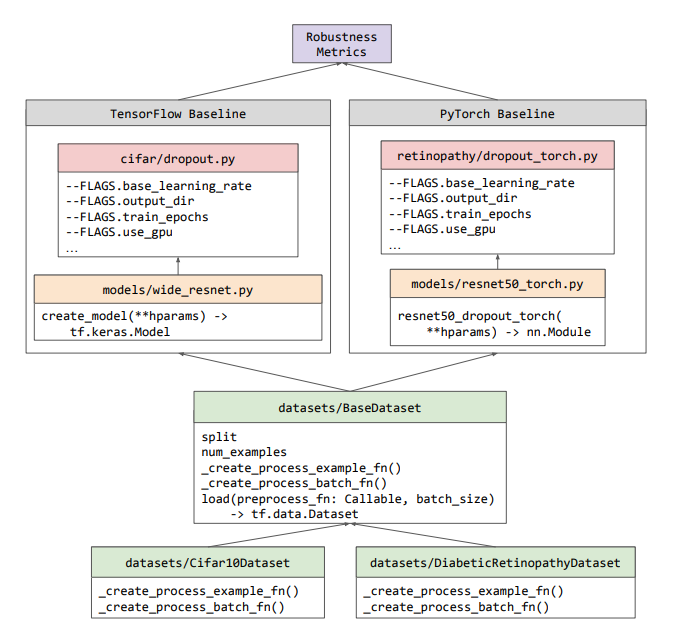

Uncertainty Baselines: Benchmarks for Uncertainty & Robustness in Deep Learning

High-quality estimates of uncertainty and robustness are crucial for numerous real-world applications, especially for deep learning which underlies many deployed ML systems. The ability to compare techniques for improving these estimates is therefore very important for research and practice alike. Yet, competitive comparisons of methods are often lacking due to a range of reasons, including: compute availability for extensive tuning, incorporation of sufficiently many baselines, and concrete documentation for reproducibility. In this paper we introduce Uncertainty Baselines: high-quality implementations of standard and state-of-the-art deep learning methods on a variety of tasks. As of this writing, the collection spans 19 methods across 9 tasks, each with at least 5 metrics. Each baseline is a self-contained experiment pipeline with easily reusable and extendable components. Our goal is to provide immediate starting points for experimentation with new methods or applications. A... [full abstract]

Zachary Nado, Neil Band, Mark Collier, Josip Djolonga, Michael W. Dusenberry, Sebastian Farquhar, Angelos Filos, Marton Havasi, Rodolphe Jenatton, Ghassen Jerfel, Jeremiah Liu, Zelda Mariet, Jeremy Nixon, Shreyas Padhy, Jie Ren, Tim G. J. Rudner, Yeming Wen, Florian Wenzel, Kevin Murphy, D. Sculley, Balaji Lakshminarayanan, Jasper Snoek, Yarin Gal, Dustin Tran

NeurIPS Workshop on Bayesian Deep Learning, 2021

[arXiv] [Code] [Blog Post (Google AI)] [BibTex]

Using Non-Linear Causal Models to Study Aerosol-Cloud Interactions in the Southeast Pacific

Aerosol-cloud interactions include a myriad of effects that all begin when aerosol enters a cloud and acts as cloud condensation nuclei (CCN). An increase in CCN results in a decrease in the mean cloud droplet size (r$_{e}$). The smaller droplet size leads to brighter, more expansive, and longer lasting clouds that reflect more incoming sunlight, thus cooling the earth. Globally, aerosol-cloud interactions cool the Earth, however the strength of the effect is heterogeneous over different meteorological regimes. Understanding how aerosol-cloud interactions evolve as a function of the local environment can help us better understand sources of error in our Earth system models, which currently fail to reproduce the observed relationships. In this work we use recent non-linear, causal machine learning methods to study the heterogeneous effects of aerosols on cloud droplet radius.

Andrew Jesson, Peter Manshausen, Alyson Douglas, Duncan Watson-Parris, Yarin Gal, Philip Stier

Workshops on Tackling Climate Change with Machine Learning, and Causal Inference & Machine Learning: Why now?, NeurIPS 2021

Understanding the effectiveness of government interventions against the resurgence of COVID-19 in Europe

Governments are attempting to control the COVID-19 pandemic with nonpharmaceutical interventions (NPIs). However, the effectiveness of different NPIs at reducing transmission is poorly understood. We gathered chronological data on the implementation of NPIs for several European, and other, countries between January and the end of May 2020. We estimate the effectiveness of NPIs, ranging from limiting gathering sizes, business closures, and closure of educational institutions to stay-at-home orders. To do so, we used a Bayesian hierarchical model that links NPI implementation dates to national case and death counts and supported the results with extensive empirical validation. Closing all educational institutions, limiting gatherings to 10 people or less, and closing face-to-face businesses each reduced transmission considerably. The additional effect of stay-at-home orders was comparatively small.

Mrinank Sharma, Sören Mindermann, Charlie Rogers-Smith, Gavin Leech, Benedict Snodin, Janvi Ahuja, Jonas B. Sandbrink, Joshua Teperowsky Monrad, George Altman, Gurpreet Dhaliwal, Lukas Finnveden, Alexander John Norman, Sebastian B. Oehm, Julia Fabienne Sandkühler, Laurence Aitchison, Tomas Gavenciak, Thomas Mellan, Jan Kulveit, Leonid Chindelevitch, Seth Flaxman, Yarin Gal, Swapnil Mishra, Samir Bhatt, Jan Brauner

Nature Communications (2021) 12: 5820

[Paper]

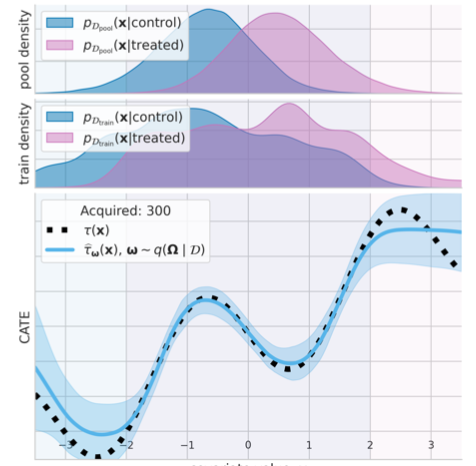

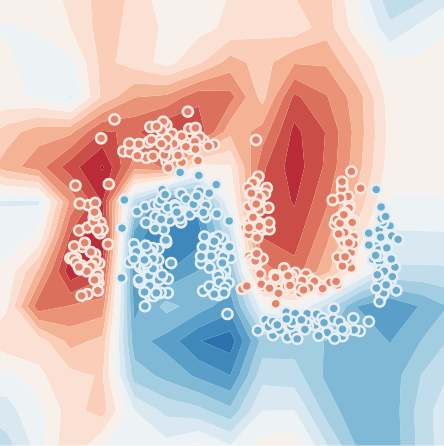

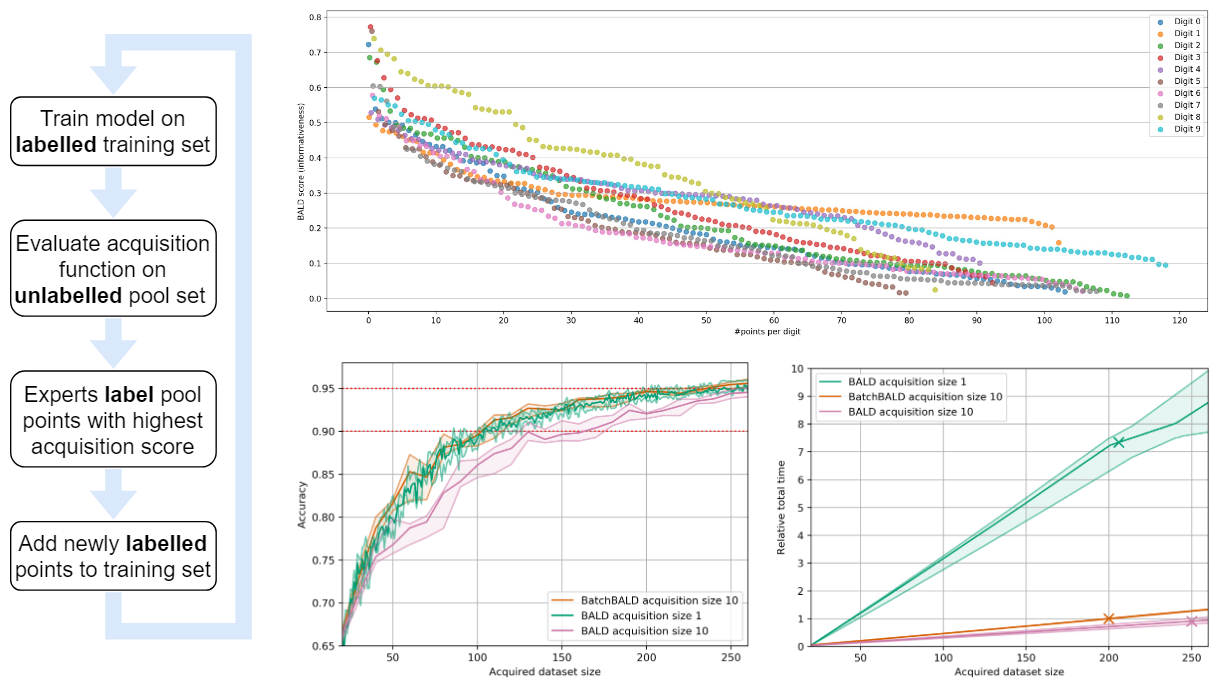

Causal-BALD: Deep Bauesian Active Learning of Outcomes to Infer Treatment-Effects

Estimating personalized treatment effects from high-dimensional observational data is essential in situations where experimental designs are infeasible, unethical or expensive. Existing approaches rely on fitting deep models on outcomes observed for treated and control populations, but when measuring the outcome for an individual is costly (e.g. biopsy) a sample efficient strategy for acquiring outcomes is required. Deep Bayesian active learning provides a framework for efficient data acquisition by selecting points with high uncertainty. However, naive application of existing methods selects training data that is biased toward regions where the treatment effect cannot be identified because there is non-overlapping support between the treated and control populations. To maximize sample efficiency for learning personalized treatment effects, we introduce new acquisition functions grounded in information theory that bias data acquisition towards regions where overlap is satisfied,... [full abstract]

Andrew Jesson, Panagiotis Tigas, Joost van Amersfoort, Andreas Kirsch, Uri Shalit, Yarin Gal

NeurIPS, 2021

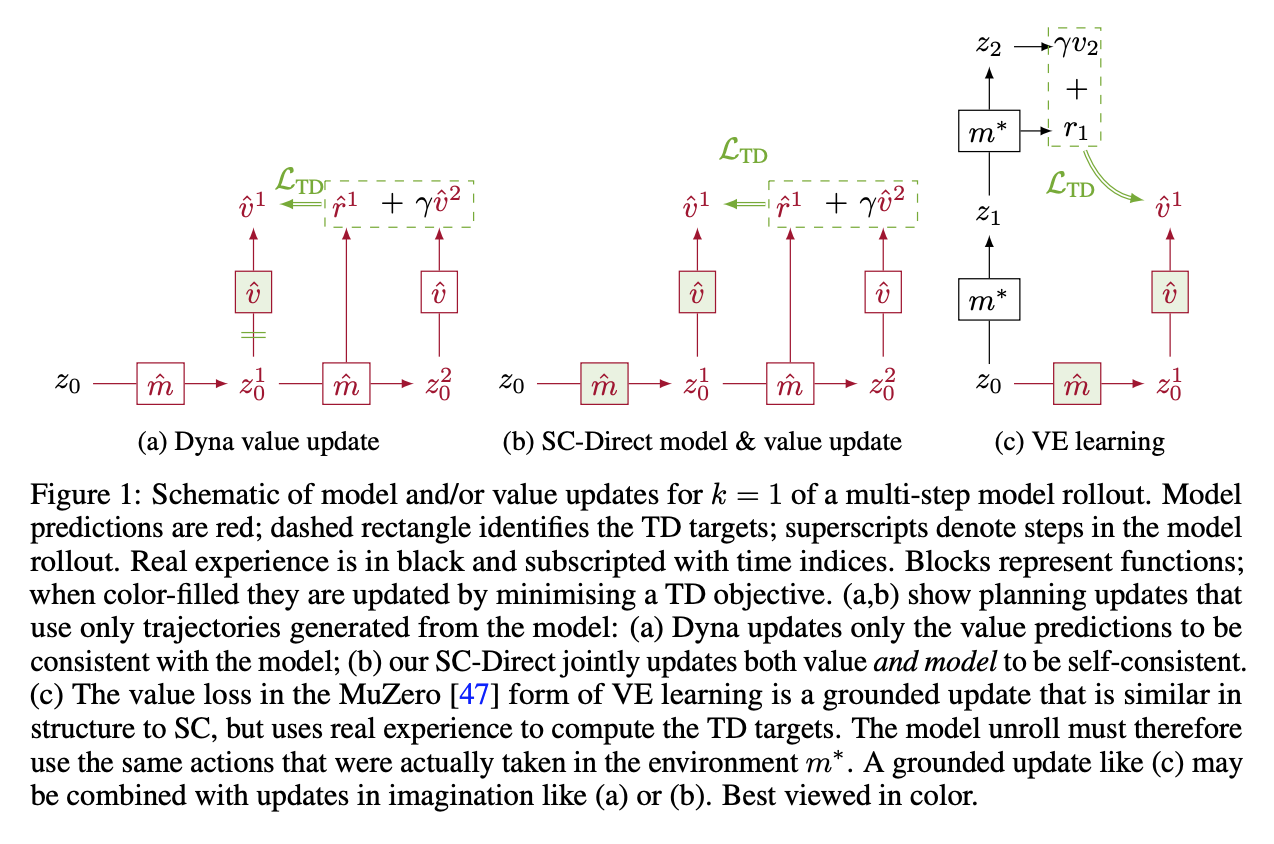

Self-Consistent Models and Values

Learned models of the environment provide reinforcement learning (RL) agents with flexible ways of making predictions about the environment. In particular, models enable planning, i.e. using more computation to improve value functions or policies, without requiring additional environment interactions. In this work, we investigate a way of augmenting model-based RL, by additionally encouraging a learned model and value function to be jointly self-consistent. Our approach differs from classic planning methods such as Dyna, which only update values to be consistent with the model. We propose multiple self-consistency updates, evaluate these in both tabular and function approximation settings, and find that, with appropriate choices, self-consistency helps both policy evaluation and control.

Gregory Farquhar, Kate Baumli, Zita Marinho, Angelos Filos, Matteo Hessel, Hado van Hasselt, David Silver

NeurIPS, 2021

[Paper]

Shifts: A Dataset of Real Distributional Shift Across Multiple Large-Scale Tasks

There has been significant research done on developing methods for improving robustness to distributional shift and uncertainty estimation. In contrast, only limited work has examined developing standard datasets and benchmarks for assessing these approaches. Additionally, most work on uncertainty estimation and robustness has developed new techniques based on small-scale regression or image classification tasks. However, many tasks of practical interest have different modalities, such as tabular data, audio, text, or sensor data, which offer significant challenges involving regression and discrete or continuous structured prediction. Thus, given the current state of the field, a standardized large-scale dataset of tasks across a range of modalities affected by distributional shifts is necessary. This will enable researchers to meaningfully evaluate the plethora of recently developed uncertainty quantification methods, as well as assessment criteria and state-of-the-art baselin... [full abstract]

Andrey Malinin, Neil Band, Alexander Ganshin, German Chesnokov, Yarin Gal, Mark J. F. Gales, Alexey Noskov, Andrey Ploskonosov, Liudmila Prokhorenkova, Ivan Provilkov, Vatsal Raina, Vyas Raina, Denis Roginskiy, Mariya Shmatova, Panagiotis Tigas, Boris Yangel

NeurIPS Datasets and Benchmarks Track, 2021

[arXiv] [BibTex] [Code]

[Competition Website] [Blog Post (OATML)] [Blog Post (Yandex Research)]

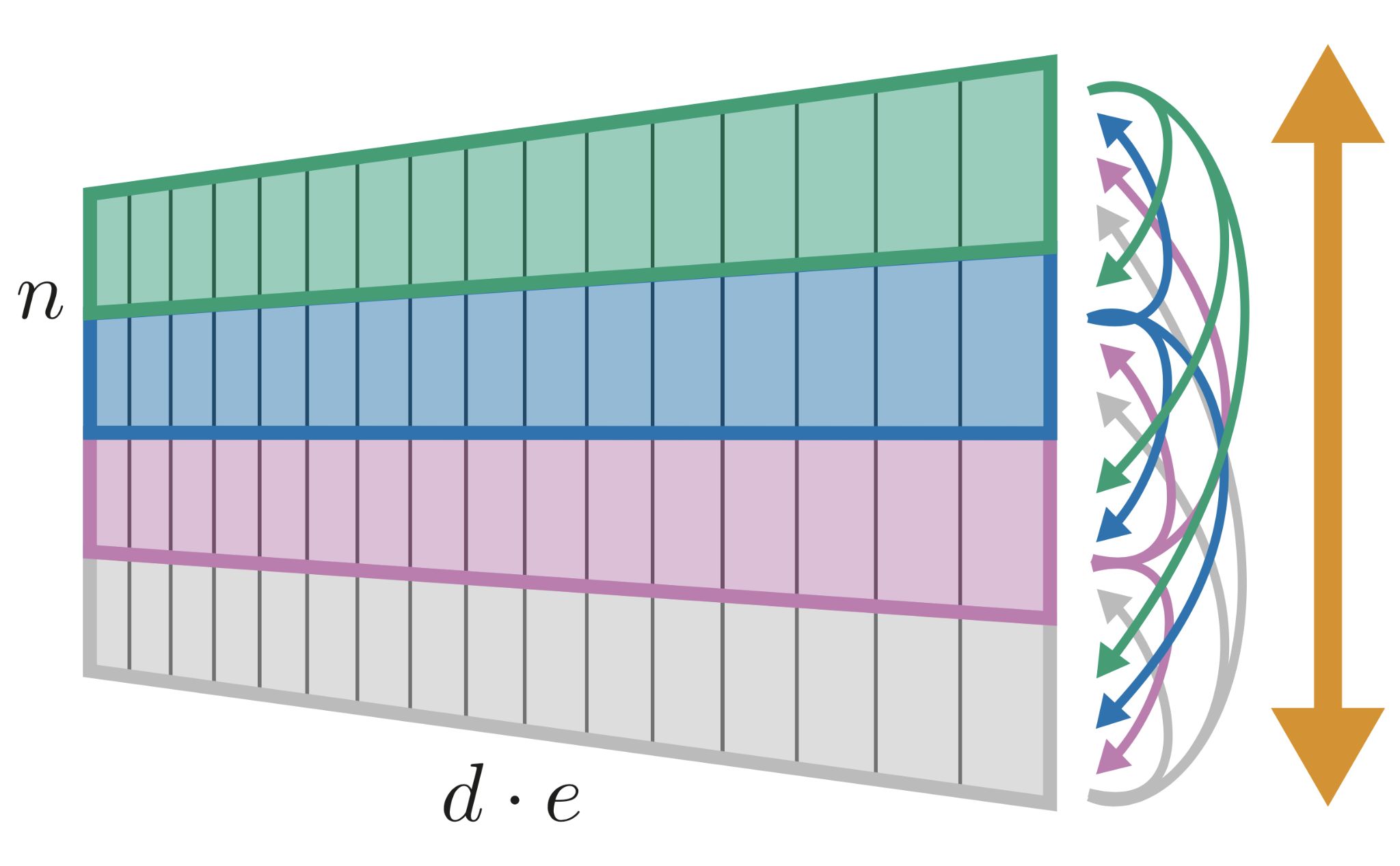

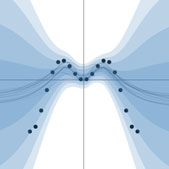

Self-Attention Between Datapoints: Going Beyond Individual Input-Output Pairs in Deep Learning

We challenge a common assumption underlying most supervised deep learning: that a model makes a prediction depending only on its parameters and the features of a single input. To this end, we introduce a general-purpose deep learning architecture that takes as input the entire dataset instead of processing one datapoint at a time. Our approach uses self-attention to reason about relationships between datapoints explicitly, which can be seen as realizing non-parametric models using parametric attention mechanisms. However, unlike conventional non-parametric models, we let the model learn end-to-end from the data how to make use of other datapoints for prediction. Empirically, our models solve cross-datapoint lookup and complex reasoning tasks unsolvable by traditional deep learning models. We show highly competitive results on tabular data, early results on CIFAR-10, and give insight into how the model makes use of the interactions between points.

Jannik Kossen, Neil Band, Clare Lyle, Aidan Gomez, Yarin Gal, Tom Rainforth

NeurIPS, 2021

[arXiv] [Code]

Changing composition of SARS-CoV-2 lineages and rise of Delta variant in England

Background Since its emergence in Autumn 2020, the SARS-CoV-2 Variant of Concern (VOC) B.1.1.7 (WHO label Alpha) rapidly became the dominant lineage across much of Europe. Simultaneously, several other VOCs were identified globally. Unlike B.1.1.7, some of these VOCs possess mutations thought to confer partial immune escape. Understanding when and how these additional VOCs pose a threat in settings where B.1.1.7 is currently dominant is vital.

Methods We examine trends in the prevalence of non-B.1.1.7 lineages in London and other English regions using passive-case detection PCR data, cross-sectional community infection surveys, genomic surveillance, and wastewater monitoring. The study period spans from 31st January 2021 to 15th May 2021.

Findings Across data sources, the percentage of non-B.1.1.7 variants has been increasing since late March 2021. This increase was initially driven by a variety of lineages with immune escape. From mid-April, B.1.617.2 (WHO label... [full abstract]

Swapnil Mishra, Sören Mindermann, Mrinank Sharma, Charles Whittaker, Thomas A. Mellan, Thomas Wilton, Dimitra Klapsa, Ryan Mate, Martin Fritzsche, Maria Zambon, Janvi Ahuja, Adam Howes, Xenia Miscouridou, Guy P. Nason, Oliver Ratmann, Elizaveta Semenova, Gavin Leech, Julia Fabienne Sandkühler, Charlie Rogers-Smith, Michaela Vollmer, H. Juliette T. Unwin, Yarin Gal, Meera Chand, Axel Gandy, Javier Martin, Erik Volz, Neil M. Ferguson, Samir Bhatt, Jan Brauner, Seth Flaxman

EClinicalMedicine (2021), 39:101064

[Paper]

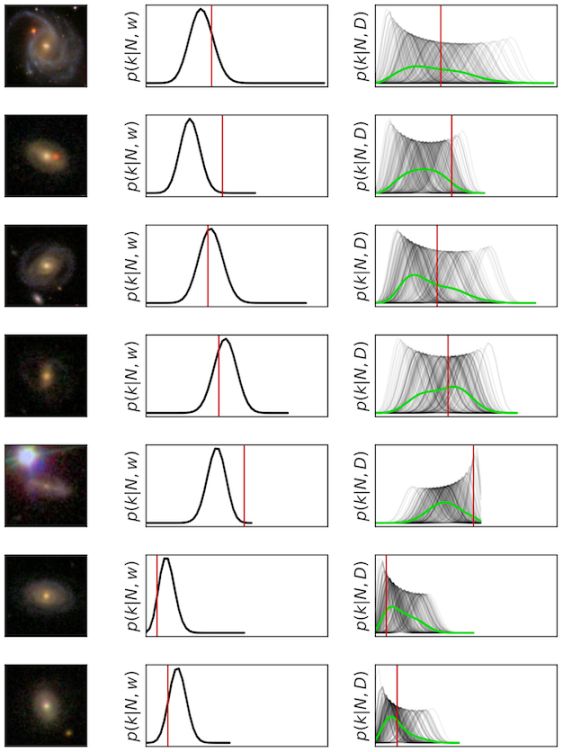

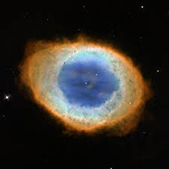

Galaxy Zoo DECaLS: Detailed visual morphology measurements from volunteers and Deep Learning for 314,000 galaxies

We present Galaxy Zoo DECaLS: detailed visual morphological classifications for Dark Energy Camera Legacy Survey images of galaxies within the SDSS DR8 footprint. Deeper DECaLS images (r = 23.6 versus r = 22.2 from SDSS) reveal spiral arms, weak bars, and tidal features not previously visible in SDSS imaging. To best exploit the greater depth of DECaLS images, volunteers select from a new set of answers designed to improve our sensitivity to mergers and bars. Galaxy Zoo volunteers provide 7.5 million individual classifications over 314 000 galaxies. 140 000 galaxies receive at least 30 classifications, sufficient to accurately measure detailed morphology like bars, and the remainder receive approximately 5. All classifications are used to train an ensmble of Bayesian convolutional neural networks (a state-of-the-art deep learning method) to predict posteriors for the detailed morphology of all 314 000 galaxies. We use active learning to focus our volunteer effort on the galaxies... [full abstract]

Mike Walmsley, Chris Lintott, Tobias Géron, Sandor Kruk, Coleman Krawczyk, Kyle W Willett, Steven Bamford, Lee S Kelvin, Lucy Fortson, Yarin Gal, William Keel, Karen L Masters, Vihang Mehta, Brooke D Simmons, Rebecca Smethurst, Lewis Smith, Elisabeth M Baeten, Christine Macmillan

Monthly Notices of the Royal Astronomical Society

[Paper]

Provable Guarantees on the Robustness of Decision Rules to Causal Interventions

Robustness of decision rules to shifts in the data-generating process is crucial to the successful deployment of decision-making systems. Such shifts can be viewed as interventions on a causal graph, which capture (possibly hypothetical) changes in the data-generating process, whether due to natural reasons or by the action of an adversary. We consider causal Bayesian networks and formally define the interventional robustness problem, a novel model-based notion of robustness for decision functions that measures worst-case performance with respect to a set of interventions that denote changes to parameters and/or causal influences. By relying on a tractable representation of Bayesian networks as arithmetic circuits, we provide efficient algorithms for computing guaranteed upper and lower bounds on the interventional robustness probabilities. Experimental results demonstrate that the methods yield useful and interpretable bounds for a range of practical networks, paving the way to... [full abstract]

Benjie Wang, Clare Lyle, Marta Kwiatkowska

IJCAI, 2021

[Paper]

Is the cure really worse than the disease? The health impacts of lockdowns during COVID-19

During the pandemic, there has been ongoing and contentious debate around the impact of restrictive government measures to contain SARS-CoV-2 outbreaks, often termed ‘lockdowns’. We define a ‘lockdown’ as a highly restrictive set of non-pharmaceutical interventions against COVID-19, including either stay-at-home orders or interventions with an equivalent effect on movement in the population through restriction of movement. While necessarily broad, this definition encompasses the strict interventions embraced by many nations during the pandemic, particularly those that have prevented individuals from venturing outside of their homes for most reasons.

The claims often include the idea that the benefits of lockdowns on infection control may be outweighed by the negative impacts on the economy, social structure, education and mental health. A much stronger claim that has still persistently appeared in the media as well as peer-reviewed research concerns only health effects: ... [full abstract]

Gideon Mayerowitz-Katz, Samir Bhatt, Oliver Ratmann, Jan Brauner, Seth Flaxman, Swapnil Mishra, Mrinank Sharma, Sören Mindermann, Valerie Bradley, Michaela Vollmer, Lea Merone, Gavin Yamey

BMJ Global Health, 2021, 6:e006653

[Paper]

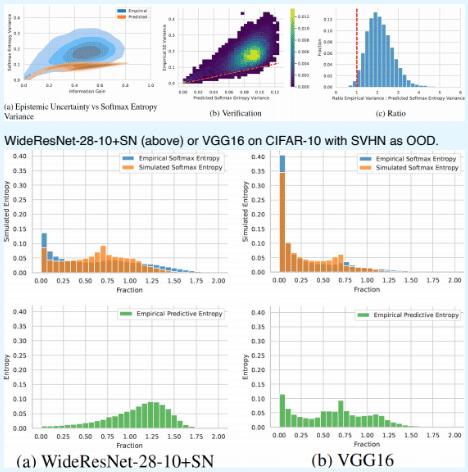

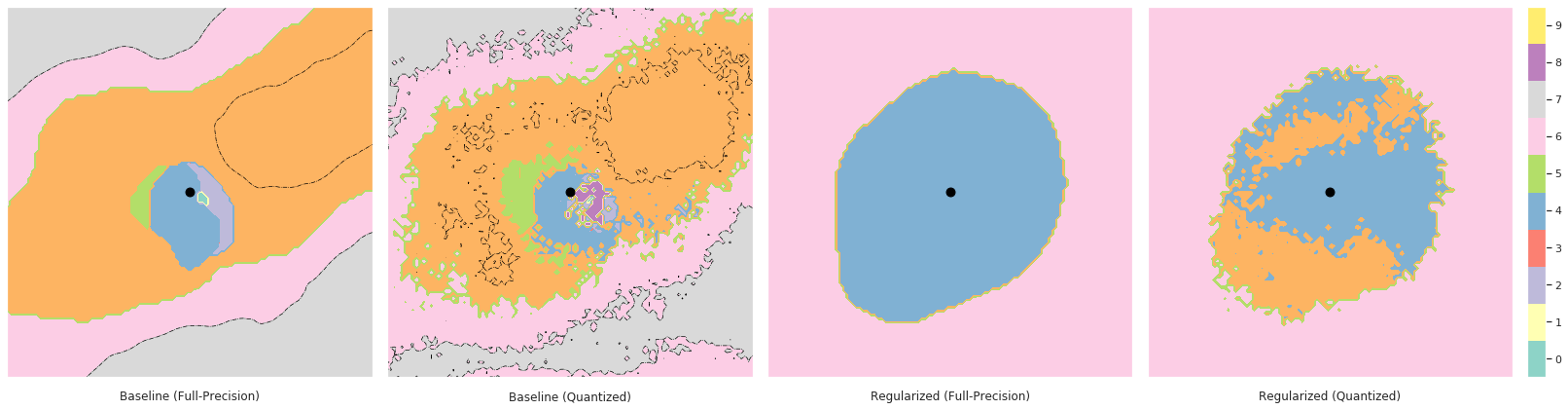

Deterministic Neural Networks with Inductive Biases Capture Epistemic and Aleatoric Uncertainty

While Deep Ensembles are the state-of-the art for uncertainty prediction, standard softmax neural nets suffer from feature collapse and cannot disentangle aleatoric and epistemic uncertainty. We show that a single softmax neural net with minimal changes can beat epistemic uncertainty predictions of Deep Ensembles and other complex single-forward-pass uncertainty approaches (DUQ and SNGP) while also disentangling uncertainties. Our Deep Deterministic Uncertainty (DDU) is based on three insights: i) predictive entropy confounds aleatoric and epistemic uncertainty, and softmax entropy is inconsistent for OoD points; ii) with appropriate inductive biases, i.e. residual connections and spectral normalization, feature-space density reliably captures epistemic uncertainty; and, iii) density estimation and classification objectives might have different optima. Thus, DDU disentangles aleatoric uncertainty using softmax entropy and epistemic uncertainty using a separate featur... [full abstract]

Jishnu Mukhoti, Andreas Kirsch, Joost van Amersfoort, Philip H.S. Torr, Yarin Gal

Uncertainty & Robustness in Deep Learning Workshop, ICML, 2021

[Paper] [BibTex] [Poster]

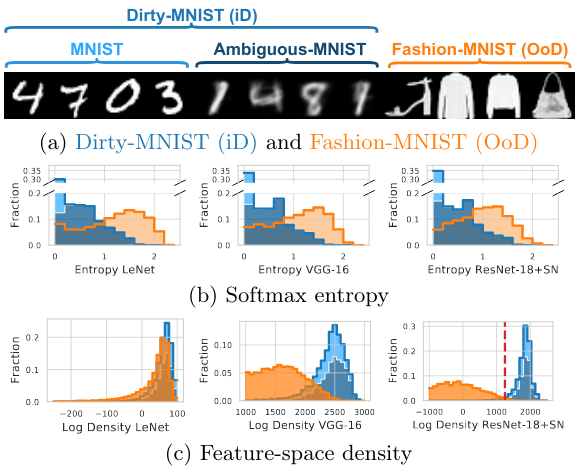

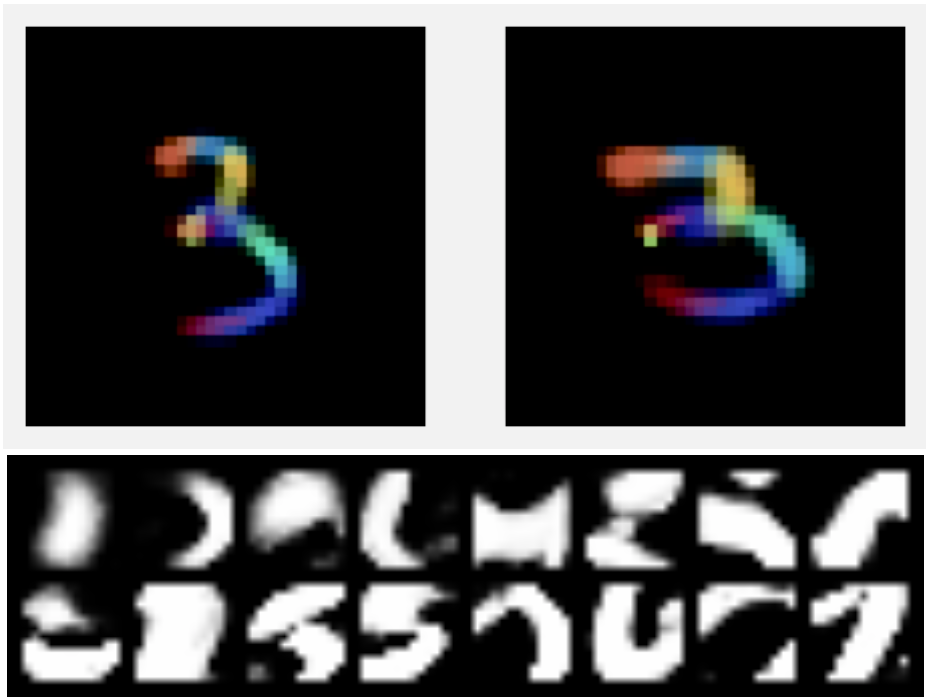

On Pitfalls in OoD Detection: Entropy Considered Harmful

Entropy of a predictive distribution averaged over an ensemble or several posterior weight samples is often used as a metric for Out-of-Distribution (OoD) detection. However, we show that predictive entropy is inappropriate for this task because it mistakes ambiguous in-distribution samples as OoD. This issue remains hidden on curated datasets commonly used for benchmarking. We introduce a new dataset, Dirty-MNIST, with a long tail of ambiguous samples, which exemplifies this problem. Additionally, we look at the entropy of single, deterministic, softmax models and show that it is unreliable exactly for OoD samples. In summary, we caution against using predictive or softmax entropy for OoD detection in practice and introduce several methods to evaluate the quantitative difference between several uncertainty metrics.

Andreas Kirsch, Jishnu Mukhoti, Joost van Amersfoort, Philip H.S. Torr, Yarin Gal

Uncertainty & Robustness in Deep Learning Workshop, ICML, 2021

[Paper] [BibTex] [Poster]

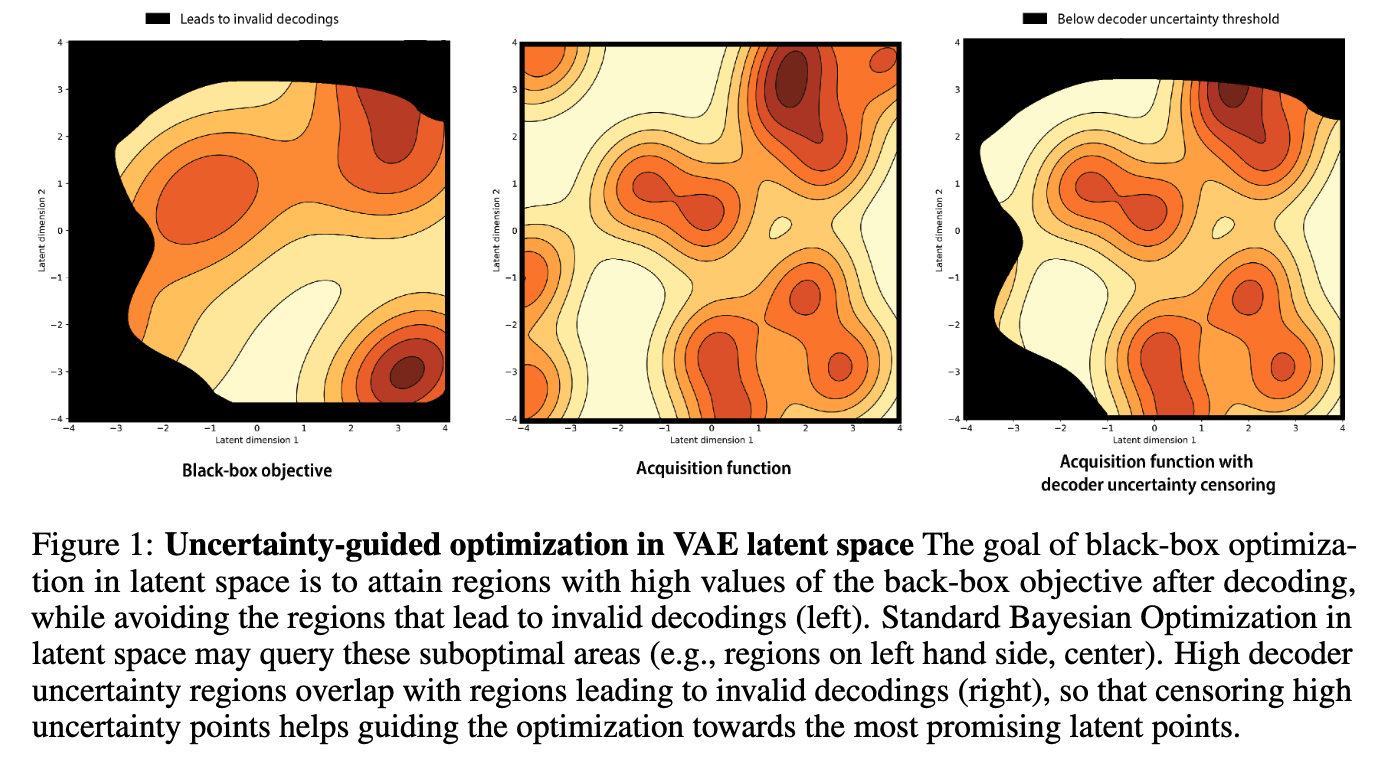

Improving black-box optimization in VAE latent space using decoder uncertainty

Optimization in the latent space of variational autoencoders is a promising approach to generate high-dimensional discrete objects that maximize an expensive black-box property (e.g., drug-likeness in molecular generation, function approximation with arithmetic expressions). However, existing methods lack robustness as they may decide to explore areas of the latent space for which no data was available during training and where the decoder can be unreliable, leading to the generation of unrealistic or invalid objects. We propose to leverage the epistemic uncertainty of the decoder to guide the optimization process. This is not trivial though, as a naive estimation of uncertainty in the high-dimensional and structured settings we consider would result in high estimator variance. To solve this problem, we introduce an importance sampling-based estimator that provides more robust estimates of epistemic uncertainty. Our uncertainty-guided optimization approach does not require modif... [full abstract]

Pascal Notin, José Miguel Hernández-Lobato, Yarin Gal

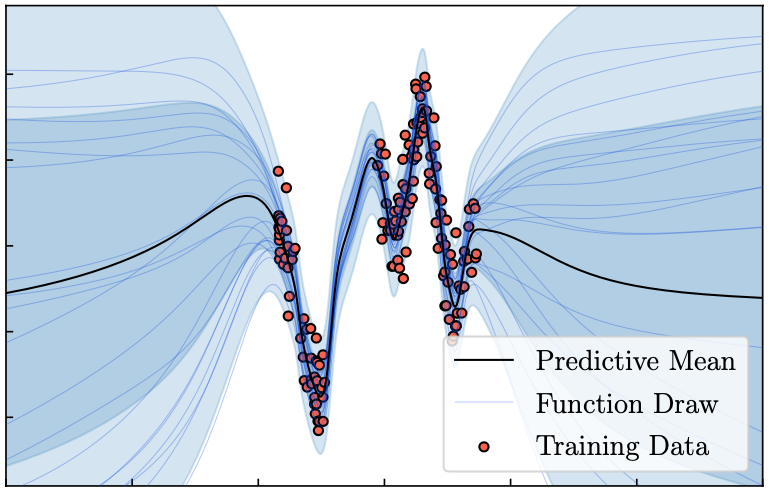

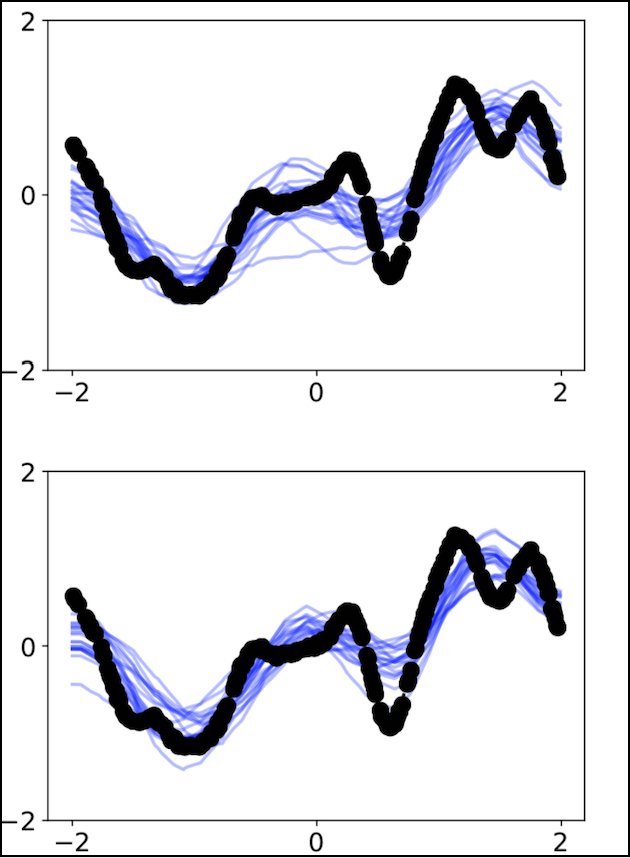

Tractable Function-Space Variational Inference in Bayesian Neural Networks

The most common approach to inference in Bayesian neural networks is to approximate the posterior distribution over the network parameters. However, explicit inference over the network parameters can make it difficult to incorporate meaningful prior information about the prediction task into the inference process. In this paper, we consider an alternative approach. Taking advantage of the fact that Bayesian neural networks define distributions over functions induced by distributions over parameters, we propose a scalable and tractable method for function-space variational inference. We evaluate the proposed method empirically and show that it leads to competitive predictive accuracy and reliable predictive uncertainty estimation on a range of prediction problems and performs well on safety-critical downstream tasks where reliable uncertainty estimation is essential.

Tim G. J. Rudner, Zonghao Chen, Yee Whye Teh, Yarin Gal

Symposium on Advances in Approximate Bayesian Inference, 2021

ICML Workshop on Uncertainty & Robustness in Deep Learning, 2021

[Preprint] [BibTex]

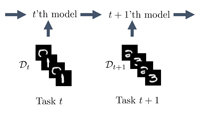

Continual Learning via Function-Space Variational Inference

Continual learning is the process of developing new abilities while retaining existing ones. Sequential Bayesian inference over predictive functions is a natural framework for doing this, but applying it to deep neural networks is challenging in practice. To address the drawbacks of existing approaches, we formulate continual learning as sequential function-space variational inference. From this formulation, we derive a tractable variational objective that explicitly encourages a neural network to fit data from new tasks while also matching posterior distributions over functions inferred from previous tasks. Crucially, the objective is expressed purely in terms of predictive functions. This way, the parameters of the neural network can vary widely during training, allowing easier adaptation to new tasks than is possible with techniques that directly regularize parameters. We demonstrate that, across a range of task sequences, neural networks trained via sequential function-space... [full abstract]

Tim G. J. Rudner, Freddie Bickford Smith, Qixuan Feng, Yee Whye Teh, Yarin Gal

ICML Workshop on Theory and Foundations of Continual Learning, 2021

ICML Workshop on Subset Selection in Machine Learning, From Theory to Applications, 2021

[Preprint] [BibTex]

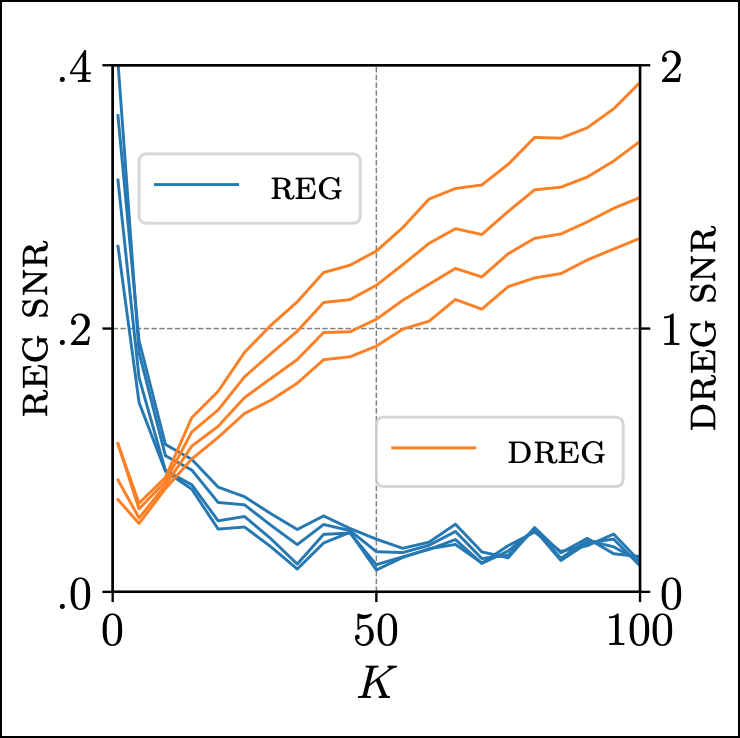

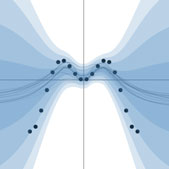

On Signal-to-Noise Ratio Issues in Variational Inference for Deep Gaussian Processes

We show that the gradient estimates used in training Deep Gaussian Processes (DGPs) with importance-weighted variational inference are susceptible to signal-to-noise ratio (SNR) issues. Specifically, we show both theoretically and empirically that the SNR of the gradient estimates for the latent variable’s variational parameters decreases as the number of importance samples increases. As a result, these gradient estimates degrade to pure noise if the number of importance samples is too large. To address this pathology, we show how doubly-reparameterized gradient estimators, originally proposed for training variational autoencoders, can be adapted to the DGP setting and that the resultant estimators completely remedy the SNR issue, thereby providing more reliable training. Finally, we demonstrate that our fix can lead to improvements in the predictive performance of the model’s predictive posterior.

Tim G. J. Rudner, Oscar Key, Yarin Gal, Tom Rainforth

ICML, 2021

[arXiv] [Code] [BibTex]

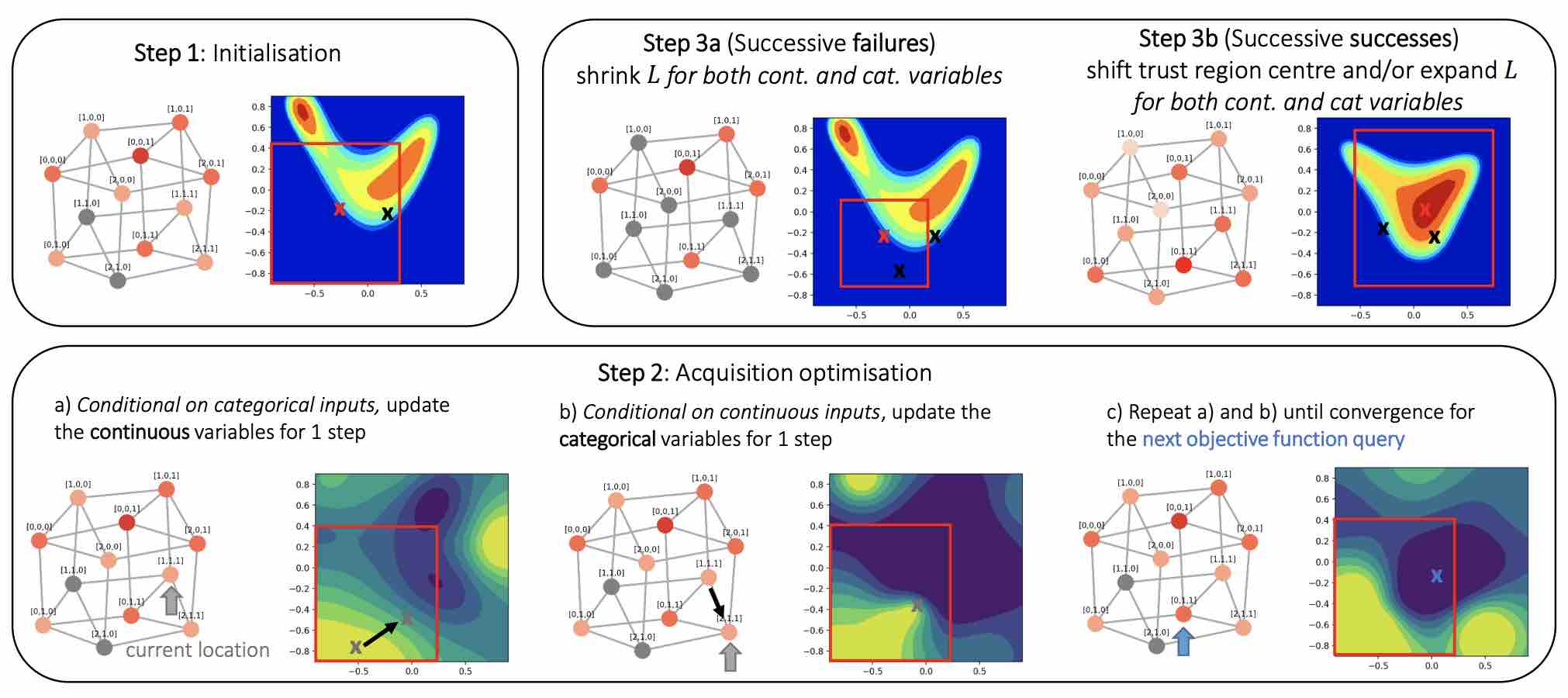

Think Global and Act Local: Bayesian Optimisation over High-Dimensional Categorical and Mixed Search Spaces

High-dimensional black-box optimisation remains an important yet notoriously challenging problem. Despite the success of Bayesian optimisation methods on continuous domains, domains that are categorical, or that mix continuous and categorical variables, remain challenging. We propose a novel solution – we combine local optimisation with a tailored kernel design, effectively handling highdimensional categorical and mixed search spaces, whilst retaining sample efficiency. We further derive convergence guarantee for the proposed approach. Finally, we demonstrate empirically that our method outperforms the current baselines on a variety of synthetic and real-world tasks in terms of performance, computational costs, or both.

Xingchen Wan, Vu Nguyen, Huong Ha, Binxin (Robin) Ru, Cong Lu, Michael A. Osborne

ICML, 2021

[Paper]

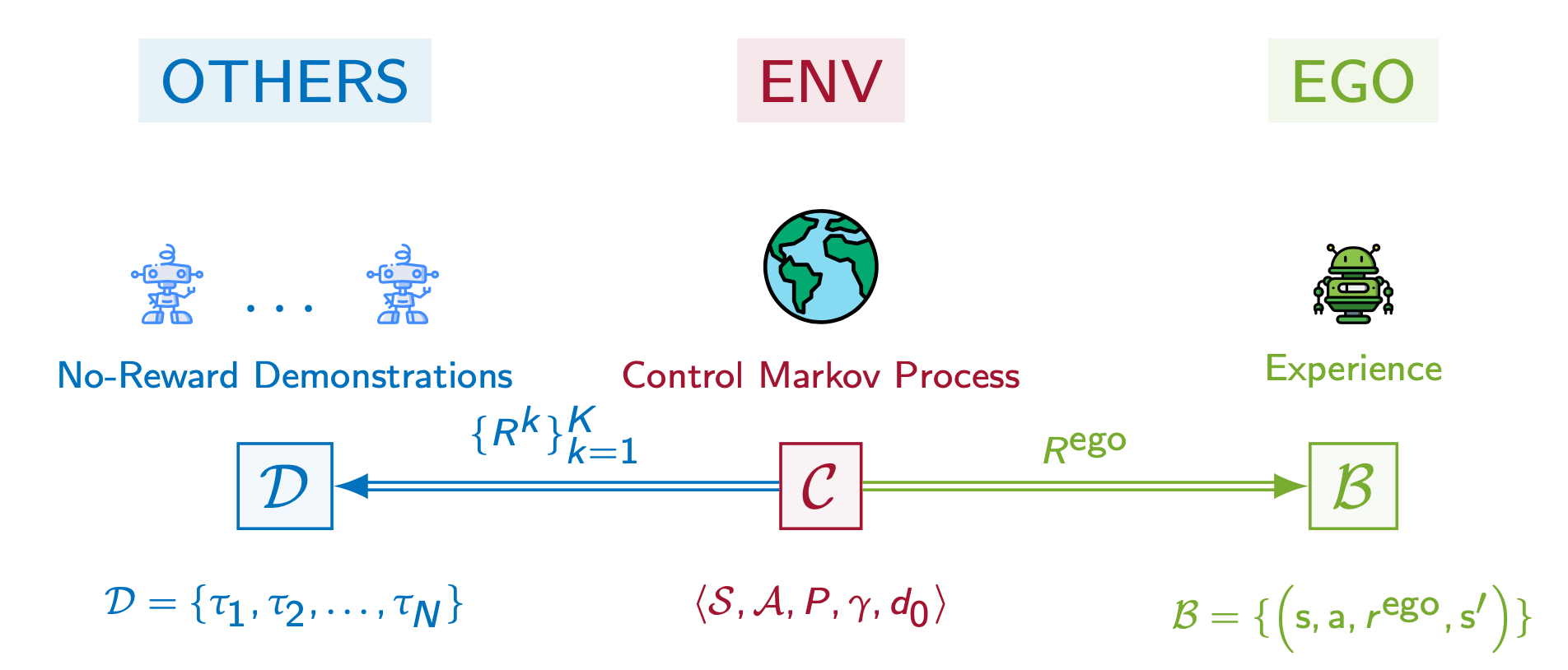

PsiPhi-Learning: Reinforcement Learning with Demonstrations using Successor Features and Inverse Temporal Difference Learning

We study reinforcement learning (RL) with no-reward demonstrations, a setting in which an RL agent has access to additional data from the interaction of other agents with the same environment. However, it has no access to the rewards or goals of these agents, and their objectives and levels of expertise may vary widely. These assumptions are common in multi-agent settings, such as autonomous driving. To effectively use this data, we turn to the framework of successor features. This allows us to disentangle shared features and dynamics of the environment from agent-specific rewards and policies. We propose a multi-task inverse reinforcement learning (IRL) algorithm, called inverse temporal difference learning (ITD), that learns shared state features, alongside per-agent successor features and preference vectors, purely from demonstrations without reward labels. We further show how to seamlessly integrate ITD with learning from online environment interactions, arriving at... [full abstract]

Angelos Filos, Clare Lyle, Yarin Gal, Sergey Levine, Natasha Jaques, Gregory Farquhar

ICML, 2021 (long talk)

[Paper]

Active Testing: Sample-Efficient Model Evaluation

We introduce active testing: a new framework for sample-efficient model evaluation. While approaches like active learning reduce the number of labels needed for model training, existing literature largely ignores the cost of labeling test data, typically unrealistically assuming large test sets for model evaluation. This creates a disconnect to real applications where test labels are important and just as expensive, e.g. for optimizing hyperparameters. Active testing addresses this by carefully selecting the test points to label, ensuring model evaluation is sample-efficient. To this end, we derive theoretically-grounded and intuitive acquisition strategies that are specifically tailored to the goals of active testing, noting these are distinct to those of active learning. Actively selecting labels introduces a bias; we show how to remove that bias while reducing the variance of the estimator at the same time. Active testing is easy to implement, effective, and can be applied to... [full abstract]

Jannik Kossen, Sebastian Farquhar, Yarin Gal, Tom Rainforth

ICML, 2021

[PMLR] [arXiv]

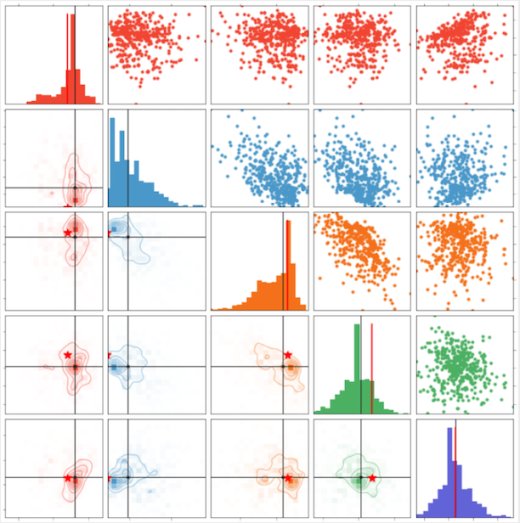

Quantifying Ignorance in Individual-Level Causal-Effect Estimates under Hidden Confounding

We study the problem of learning conditional average treatment effects (CATE) from high-dimensional, observational data with unobserved confounders. Unobserved confounders introduce ignorance – a level of unidentifiability – about an individual’s response to treatment by inducing bias in CATE estimates. We present a new parametric interval estimator suited for high-dimensional data, that estimates a range of possible CATE values when given a predefined bound on the level of hidden confounding. Further, previous interval estimators do not account for ignorance about the CATE stemming from samples that may be underrepresented in the original study, or samples that violate the overlap assumption. Our novel interval estimator also incorporates model uncertainty so that practitioners can be made aware of out-of-distribution data. We prove that our estimator converges to tight bounds on CATE when there may be unobserved confounding, and assess it using semi-synthetic, high-dimensional... [full abstract]

Andrew Jesson, Sören Mindermann, Yarin Gal, Uri Shalit

ICML, 2021

[arXiv]

Deep Adaptive Design: Amortizing Sequential Bayesian Experimental Design

We introduce Deep Adaptive Design (DAD), a general method for amortizing the cost of performing sequential adaptive experiments using the framework of Bayesian optimal experimental design (BOED). Traditional sequential BOED approaches require substantial computational time at each stage of the experiment. This makes them unsuitable for most real-world applications, where decisions must typically be made quickly. DAD addresses this restriction by learning an amortized design network upfront and then using this to rapidly run (multiple) adaptive experiments at deployment time. This network takes as input the data from previous steps, and outputs the next design using a single forward pass; these design decisions can be made in milliseconds during the live experiment. To train the network, we introduce contrastive information bounds that are suitable objectives for the sequential setting, and propose a customized network architecture that exploits key symmetries. We demonstrate tha... [full abstract]

Adam Foster, Desi R. Ivanova, Ilyas Malik, Tom Rainforth

ICML, 2021

[arXiv]

Probabilistic Programs with Stochastic Conditioning

We tackle the problem of conditioning probabilistic programs on distributions of observable variables. Probabilistic programs are usually conditioned on samples from the joint data distribution, which we refer to as deterministic conditioning. However, in many real-life scenarios, the observations are given as marginal distributions, summary statistics, or samplers. Conventional probabilistic programming systems lack adequate means for modeling and inference in such scenarios. We propose a generalization of deterministic conditioning to stochastic conditioning, that is, conditioning on the marginal distribution of a variable taking a particular form. To this end, we first define the formal notion of stochastic conditioning and discuss its key properties. We then show how to perform inference in the presence of stochastic conditioning. We demonstrate potential usage of stochastic conditioning on several case studies which involve various kinds of stochastic conditioning and are d... [full abstract]

David Tolpin, Yuan Zhou, Tom Rainforth, Hongseok Yang

ICML, 2021

[arXiv]

Kessler : A machine learning library for spacecraft collision avoidance

As megaconstellations are launched and the space sector grows, space debris pollution is posing an increasing threat to operational spacecraft. Low Earth orbit is a junkyard of dead satellites, rocket bodies, shrapnels, and other debris that travel at very high speed in an uncontrolled manner. Collisions at orbital speeds can generate fragments and potentially trigger a cascade of more collisions endangering the whole population, a scenario known since the late 1970s as the Kessler syndrome. In this work we present Kessler: an open-source Python package for machine learning (ML) applied to collision avoidance. Kessler provides functionalities to import and export conjunction data messages (CDMs) in their standard format and predict the evolution of conjunction events based on explainable ML models. In Kessler we provide Bayesian recurrent neural networks that can be trained with existing collections of CDM data and then deployed in order to predict the contents of future CDMs in... [full abstract]

Giacomo Acciarini, Francesco Pinto, Francesca Letizia, José A. Martinez-Heras, Klaus Merz, Christopher Bridges, Atılım Güneş Baydin

8th European Conference on Space Debris

[Paper]

Understanding the effectiveness of government interventions in Europe's second wave of COVID-19

As European governments face resurging waves of COVID-19, non-pharmaceutical interventions (NPIs) continue to be the primary tool for infection control. However, updated estimates of their relative effectiveness have been absent for Europe’s second wave, largely due to a lack of collated data that considers the increased subnational variation and diversity of NPIs. We collect the largest dataset of NPI implementation dates in Europe, spanning 114 subnational areas in 7 countries, with a systematic categorisation of interventions tailored to the second wave. Using a hierarchical Bayesian transmission model, we estimate the effectiveness of 17 NPIs from local case and death data. We manually validate the data, address limitations in modelling from previous studies, and extensively test the robustness of our estimates. The combined effect of all NPIs was smaller relative to estimates from the first half of 2020, indicating the strong influence of safety measures and individual prot... [full abstract]

Mrinank Sharma, Sören Mindermann, Charlie Rogers-Smith, Gavin Leech, Benedict Snodin, Janvi Ahuja, Jonas B. Sandbrink, Joshua Teperowski Monrad, George Altman, Gurpreet Dhaliwal, Lukas Finnveden, Alexander John Norman, Sebastian B. Oehm, Julia Fabienne Sandkühler, Thomas Mellan, Jan Kulveit, Leonid Chindelevitch, Seth Flaxman, Yarin Gal, Swapnil Mishra, Jan Brauner, Samir Bhatt

MedRxiv

[Paper]

Generating Interpretable Counterfactual Explanations By Implicit Minimisation of Epistemic and Aleatoric Uncertainties

Counterfactual explanations (CEs) are a practical tool for demonstrating why machine learning classifiers make particular decisions. For CEs to be useful, it is important that they are easy for users to interpret. Existing methods for generating interpretable CEs rely on auxiliary generative models, which may not be suitable for complex datasets, and incur engineering overhead. We introduce a simple and fast method for generating interpretable CEs in a white-box setting without an auxiliary model, by using the predictive uncertainty of the classifier. Our experiments show that our proposed algorithm generates more interpretable CEs, according to IM1 scores, than existing methods. Additionally, our approach allows us to estimate the uncertainty of a CE, which may be important in safety-critical applications, such as those in the medical domain.

Lisa Schut, Oscar Key, Rory McGrath, Luca Costabello, Bogdan Sacaleanu, Medb Corcoran, Yarin Gal

AISTATS, 2021

[Paper] [Code]

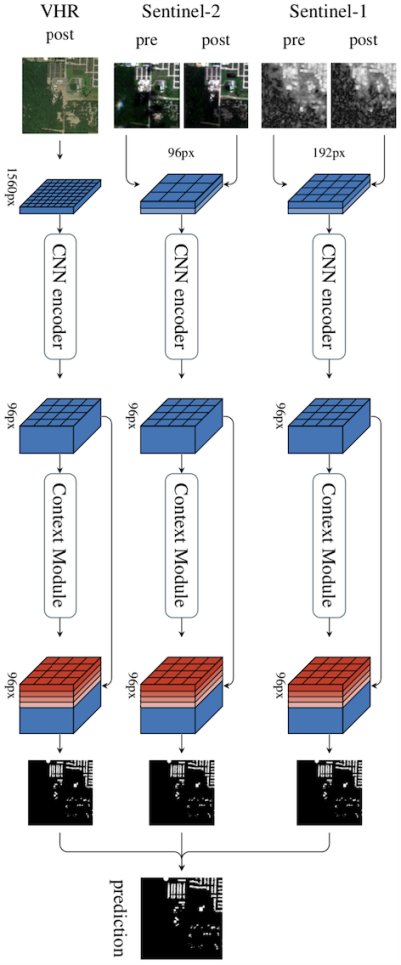

Towards global flood mapping onboard low cost satellites with machine learning

Spaceborne Earth observation is a key technology for flood response, offering valuable information to decision makers on the ground. Very large constellations of small, nano satellites— ’CubeSats’ are a promising solution to reduce revisit time in disaster areas from days to hours. However, data transmission to ground receivers is limited by constraints on power and bandwidth of CubeSats. Onboard processing offers a solution to decrease the amount of data to transmit by reducing large sensor images to smaller data products. The ESA’s recent PhiSat-1 mission aims to facilitate the demonstration of this concept, providing the hardware capability to perform onboard processing by including a power-constrained machine learning accelerator and the software to run custom applications. This work demonstrates a flood segmentation algorithm that produces flood masks to be transmitted instead of the raw images, while running efficiently on the accelerator aboard the PhiSat-1. Our models ar... [full abstract]

Gonzalo Mateo-Garcia, Joshua Veitch-Michealis, Lewis Smith, Silviu Oprea, Guy Schumann, Yarin Gal, Atılım Güneş Baydin, Dietmar Backes

Nature Scientific Reports, 2021

[Paper]

COIN: COmpression with Implicit Neural representations

We propose a new simple approach for image compression: instead of storing the RGB values for each pixel of an image, we store the weights of a neural network overfitted to the image. Specifically, to encode an image, we fit it with an MLP which maps pixel locations to RGB values. We then quantize and store the weights of this MLP as a code for the image. To decode the image, we simply evaluate the MLP at every pixel location. We found that this simple approach outperforms JPEG at low bit-rates, even without entropy coding or learning a distribution over weights. While our framework is not yet competitive with state of the art compression methods, we show that it has various attractive properties which could make it a viable alternative to other neural data compression approaches.

Emilien Dupont, Adam Goliński, Milad Alizadeh, Yee Whye Teh, Arnaud Doucet

Neural Compression Workshop, ICLR 2021 (Spotlight)

[arXiv]

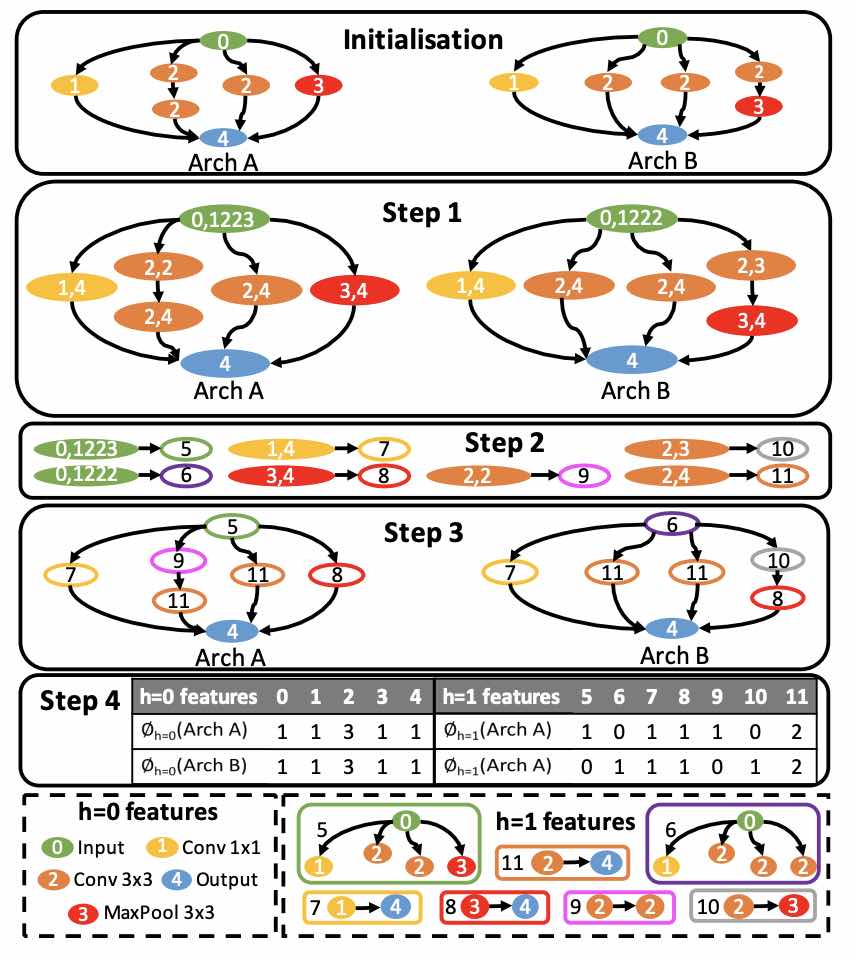

Interpretable Neural Architecture Search via Bayesian Optimisation with Weisfeiler-Lehman Kernels

Current neural architecture search (NAS) strategies focus only on finding a single, good, architecture. They offer little insight into why a specific network is performing well, or how we should modify the architecture if we want further improvements. We propose a Bayesian optimisation (BO) approach for NAS that combines the Weisfeiler-Lehman graph kernel with a Gaussian process surrogate. Our method not only optimises the architecture in a highly data-efficient manner, but also affords interpretability by discovering useful network features and their corresponding impact on the network performance. Moreover, our method is capable of capturing the topological structures of the architectures and is scalable to large graphs, thus making the high-dimensional and graph-like search spaces amenable to BO. We demonstrate empirically that our surrogate model is capable of identifying useful motifs which can guide the generation of new architectures. We finally show that our method outpe... [full abstract]

Binxin (Robin) Ru, Xingchen Wan, Xiaowen Dong, Michael A. Osborne

ICLR, 2021

[Paper]

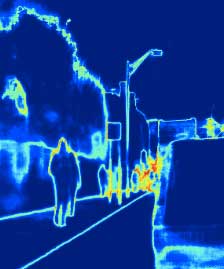

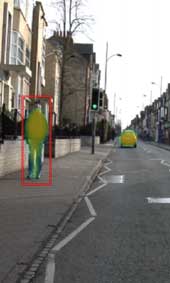

Invariant Representations for Reinforcement Learning without Reconstruction

We study how representation learning can accelerate reinforcement learning from rich observations, such as images, without relying either on domain knowledge or pixel-reconstruction. Our goal is to learn representations that provide for effective downstream control and invariance to task-irrelevant details. Bisimulation metrics quantify behavioral similarity between states in continuous MDPs, which we propose using to learn robust latent representations which encode only the task-relevant information from observations. Our method trains encoders such that distances in latent space equal bisimulation distances in state space. We demonstrate the effectiveness of our method at disregarding task-irrelevant information using modified visual MuJoCo tasks, where the background is replaced with moving distractors and natural videos, while achieving SOTA performance. We also test a first-person highway driving task where our method learns invariance to clouds, weather, and time of day. F... [full abstract]

Amy Zhang, Rowan McAllister, Roberto Calandra, Yarin Gal, Sergey Levine

ICLR, 2021 (Oral)

[Paper]

RainBench: Towards Global Precipitation Forecasting from Satellite Imagery

Extreme precipitation events, such as violent rainfall and hail storms, routinely ravage economies and livelihoods around the developing world. Climate change further aggravates this issue. Data-driven deep learning approaches could widen the access to accurate multi-day forecasts, to mitigate against such events. However, there is currently no benchmark dataset dedicated to the study of global precipitation forecasts. In this paper, we introduce \textbf{RainBench}, a new multi-modal benchmark dataset for data-driven precipitation forecasting. It includes simulated satellite data, a selection of relevant meteorological data from the ERA5 reanalysis product, and IMERG precipitation data. We also release \textbf{PyRain}, a library to process large precipitation datasets efficiently. We present an extensive analysis of our novel dataset and establish baseline results for two benchmark medium-range precipitation forecasting tasks. Finally, we discuss existing data-driven weather for... [full abstract]

Christian Schroeder de Witt, Catherine Tong, Valentina Zantedeschi, Daniele De Martini, Freddie Kalaitzis, Matthew Chantry, Duncan Watson-Parris, Piotr Bilinski

AAAI, 2021

[arXiv]

Improving VAEs' Robustness to Adversarial Attack

Variational autoencoders (VAEs) have recently been shown to be vulnerable to adversarial attacks, wherein they are fooled into reconstructing a chosen target image. However, how to defend against such attacks remains an open problem. We make significant advances in addressing this issue by introducing methods for producing adversarially robust VAEs. Namely, we first demonstrate that methods proposed to obtain disentangled latent representations produce VAEs that are more robust to these attacks. However, this robustness comes at the cost of reducing the quality of the reconstructions. We ameliorate this by applying disentangling methods to hierarchical VAEs. The resulting models produce high–fidelity autoencoders that are also adversarially robust. We confirm their capabilities on several different datasets and with current state-of-the-art VAE adversarial attacks, and also show that they increase the robustness of downstream tasks to attack.

Matthew JF Willetts, Alexander Camuto, Tom Rainforth, Steve Roberts, Christopher Holmes

ICLR, 2021

[Paper]

Multi-Channel Auto-Calibration for the Atmospheric Imaging Assembly using Machine Learning

Solar activity plays a quintessential role in influencing the interplanetary medium and space-weather around the Earth. Remote sensing instruments onboard heliophysics space missions provide a pool of information about the Sun’s activity via the measurement of its magnetic field and the emission of light from the multi-layered, multi-thermal, and dynamic solar atmosphere. Extreme UV (EUV) wavelength observations from space help in understanding the subtleties of the outer layers of the Sun, namely the chromosphere and the corona. Unfortunately, such instruments, like the Atmospheric Imaging Assembly (AIA) onboard NASA’s Solar Dynamics Observatory (SDO), suffer from time-dependent degradation, reducing their sensitivity. Current state-of-the-art calibration techniques rely on periodic sounding rockets, which can be infrequent and rather unfeasible for deep-space missions. We present an alternative calibration approach based on convolutional neural networks (CNNs). We use SDO-AIA ... [full abstract]

Luiz F. G. Dos Santos, Souvik Bose, Valentina Salvatelli, Brad Neuberg, Mark C. M. Cheung, Miho Janvier, Meng Jin, Yarin Gal, Paul Boerner, Atılım Güneş Baydin

Astronomy & Astrophysics, 2021

[Paper] [arXiv]

Capturing Label Characteristics in VAEs

We present a principled approach to incorporating labels in variational autoencoders (VAEs) that captures the rich characteristic information associated with those labels. While prior work has typically conflated these by learning latent variables that directly correspond to label values, we argue this is contrary to the intended effect of supervision in VAEs—capturing rich label characteristics with the latents. For example, we may want to capture the characteristics of a face that make it look young, rather than just the age of the person. To this end, we develop a novel VAE model, the characteristic capturing VAE (CCVAE), which “reparameterizes” supervision through auxiliary variables and a concomitant variational objective. Through judicious structuring of mappings between latent and auxiliary variables, we show that the CCVAE can effectively learn meaningful representations of the characteristics of interest across a variety of supervision schemes. In particular, we show th... [full abstract]

Tom Joy, Sebastian Schmon, Philip Torr, Siddharth N, Tom Rainforth

ICLR, 2021

[Paper]

Improving Transformation Invariance in Contrastive Representation Learning

We propose methods to strengthen the invariance properties of representations obtained by contrastive learning. While existing approaches implicitly induce a degree of invariance as representations are learned, we look to more directly enforce invariance in the encoding process. To this end, we first introduce a training objective for contrastive learning that uses a novel regularizer to control how the representation changes under transformation. We show that representations trained with this objective perform better on downstream tasks and are more robust to the introduction of nuisance transformations at test time. Second, we propose a change to how test time representations are generated by introducing a feature averaging approach that combines encodings from multiple transformations of the original input, finding that this leads to across the board performance gains. Finally, we introduce the novel Spirograph dataset to explore our ideas in the context of a differentiable g... [full abstract]

Adam Foster, Rattana Pukdee, Tom Rainforth

ICLR, 2021

[Paper]

On Statistical Bias In Active Learning: How and When to Fix It

Active learning is a powerful tool when labelling data is expensive, but it introduces a bias because the training data no longer follows the population distribution. We formalize this bias and investigate the situations in which it can be harmful and sometimes even helpful. We further introduce novel corrective weights to remove bias when doing so is beneficial. Through this, our work not only provides a useful mechanism that can improve the active learning approach, but also an explanation for the empirical successes of various existing approaches which ignore this bias. In particular, we show that this bias can be actively helpful when training overparameterized models—like neural networks—with relatively modest dataset sizes.

Sebastian Farquhar, Yarin Gal, Tom Rainforth

ICLR, 2021 (Spotlight)

[Paper]

Black-Box Optimization with Local Generative Surrogates

We propose a novel method for gradient-based optimization of black-box simulators using differentiable local surrogate models. In fields such as physics and engineering, many processes are modeled with non-differentiable simulators with intractable likelihoods. Optimization of these forward models is particularly challenging, especially when the simulator is stochastic. To address such cases, we introduce the use of deep generative models to iteratively approximate the simulator in local neighborhoods of the parameter space. We demonstrate that these local surrogates can be used to approximate the gradient of the simulator, and thus enable gradient-based optimization of simulator parameters. In cases where the dependence of the simulator on the parameter space is constrained to a low dimensional submanifold, we observe that our method attains minima faster than baseline methods, including Bayesian optimization, numerical optimization, and approaches using score function gradient... [full abstract]

Sergey Shirobokov, Vladislav Belavin, Michael Kagan, Andrey Ustyuzhanin, Atılım Güneş Baydin

Advances in Neural Information Processing Systems 34 (NeurIPS)

[Paper]

Inferring the effectiveness of government interventions against COVID-19

Governments are attempting to control the COVID-19 pandemic with nonpharmaceutical interventions (NPIs). However, the effectiveness of different NPIs at reducing transmission is poorly understood. We gathered chronological data on the implementation of NPIs for several European, and other, countries between January and the end of May 2020. We estimate the effectiveness of NPIs, ranging from limiting gathering sizes, business closures, and closure of educational institutions to stay-at-home orders. To do so, we used a Bayesian hierarchical model that links NPI implementation dates to national case and death counts and supported the results with extensive empirical validation. Closing all educational institutions, limiting gatherings to 10 people or less, and closing face-to-face businesses each reduced transmission considerably. The additional effect of stay-at-home orders was comparatively small.

Jan Brauner, Sören Mindermann, Mrinank Sharma, David Johnston, John Salvatier, Tomáš Gavenčiak, Anna B Stephenson, Gavin Leech, George Altman, Vladimir Mikulik, Alexander John Norman, Joshua Teperowski Monrad, Tamay Besiroglu, Hong Ge, Meghan A Hartwick, Yee Whye Teh, Leonid Chindelevitch, Yarin Gal, Jan Kulveit

Science (2020): eabd9338

[Paper]

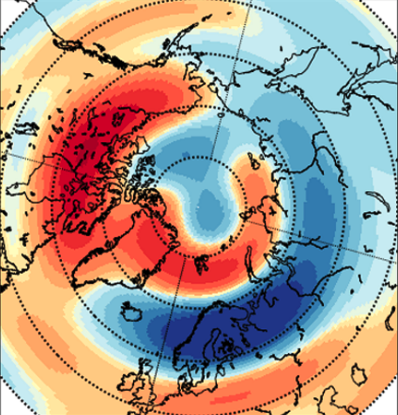

Global Earth Magnetic Field Modeling andForecasting with Spherical Harmonics Decomposition

Modeling and forecasting the solar wind-driven global magnetic field perturbations is an open challenge. Current approaches depend on simulations of computationally demanding models like the Magnetohydrodynamics (MHD) model or sampling spatially and temporally through sparse ground-based stations (SuperMAG). In this paper, we develop a Deep Learning model that forecasts in Spherical Harmonics space, replacing reliance on MHD models and providing global coverage at oneminute cadence, improving over the current state-of-the-art which relies on feature engineering. We evaluate the performance in SuperMAG dataset (improved by 14.53%) and MHD simulations (improved by 24.35%). Additionally, we evaluate the extrapolation performance of the spherical harmonics reconstruction based on sparse ground-based stations (SuperMAG), showing that spherical harmonics can reliably reconstruct the global magnetic field as evaluated on MHD simulation

Panagiotis Tigas, Téo Bloch, Vishal Upendran, Banafsheh Ferdoushi, Yarin Gal, Siddha Ganju, Ryan M. McGranaghan, Mark C. M. Cheung, Asti Bhatt

Machine Learning and the Physical Sciences Workshop - 34th NeurIPS 2020 [Paper]

Determining new representations of “Geoeffectiveness” using deep learning - AGU 2020

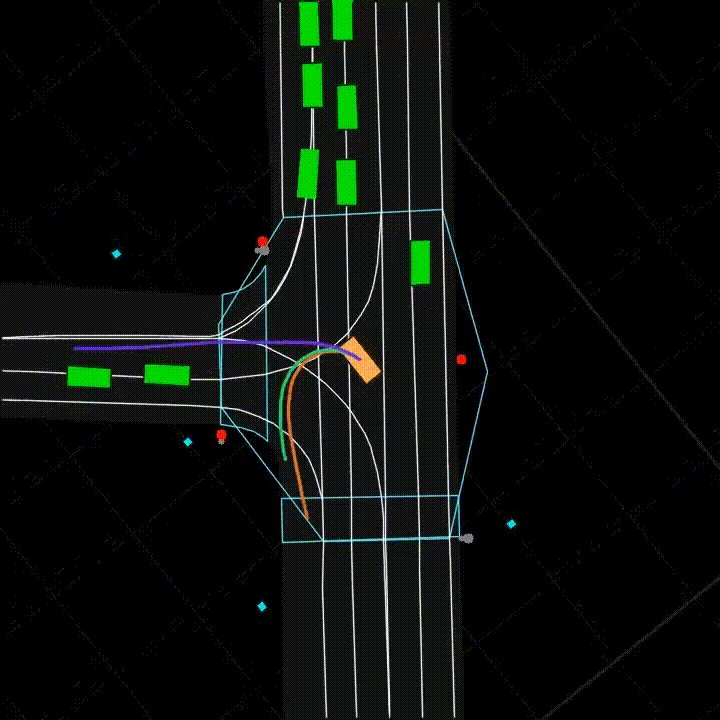

Real2sim: Automatic Generation of Open Street Map Towns For Autonomous Driving Benchmarks

Research in machine learning for autonomous driving (AD) is a constantly evolving field as researchers strive to build a Level 5 autonomous driving system. However, current benchmarks for such learning algorithms do not satisfactorily allow researchers to evaluate and compare performance across safety-critical metrics such as generalizability, out-of-distribution performance, etc. Reasons for this include the expensive nature of data collection from the real-world for autonomous driving and the limitations of software tools currently available for autonomous driving simulators. We develop a pipeline that allows for automatic generation of new town maps for simulator environments from OpenStreetMap [Haklay and Weber, 2008]. We demonstrate that our pipeline is capable of generating towns that, when perceived via LiDAR , share similar footprint to real-world gathered datasets like NuScenes [Caesar et al., 2020]. Additionally, we learn a realistic noise augmentation via Conditional ... [full abstract]

Avishek Mondal, Panagiotis Tigas, Yarin Gal

Machine Learning for Autonomous Driving Workshop at the 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Vancouver, Canada. [Paper]

A Bayesian Perspective on Training Speed and Model Selection

We take a Bayesian perspective to illustrate a connection between training speed and the marginal likelihood in linear models. This provides two major insights: first, that a measure of a model’s training speed can be used to estimate its marginal likelihood. Second, that this measure, under certain conditions, predicts the relative weighting of models in linear model combinations trained to minimize a regression loss. We verify our results in model selection tasks for linear models and for the infinite-width limit of deep neural networks. We further provide encouraging empirical evidence that the intuition developed in these settings also holds for deep neural networks trained with stochastic gradient descent. Our results suggest a promising new direction towards explaining why neural networks trained with stochastic gradient descent are biased towards functions that generalize well.

Clare Lyle, Lisa Schut, Binxin (Robin) Ru, Yarin Gal, Mark van der Wilk

NeurIPS, 2020

[Paper] [Code] [BibTex]

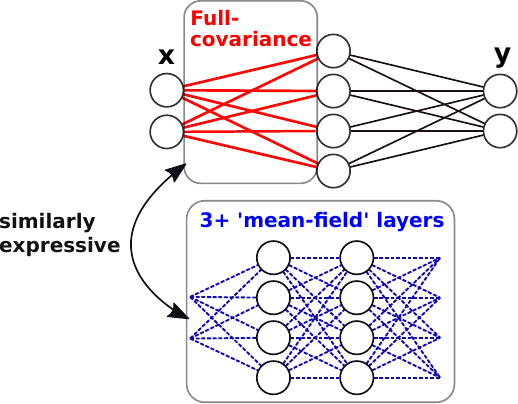

Liberty or Depth: Deep Bayesian Neural Nets Do Not Need Complex Weight Posterior Approximations

We challenge the longstanding assumption that the mean-field approximation for variational inference in Bayesian neural networks is severely restrictive, and show this is not the case in deep networks. We prove several results indicating that deep mean-field variational weight posteriors can induce similar distributions in function-space to those induced by shallower networks with complex weight posteriors. We validate our theoretical contributions empirically, both through examination of the weight posterior using Hamiltonian Monte Carlo in small models and by comparing diagonal- to structured-covariance in large settings. Since complex variational posteriors are often expensive and cumbersome to implement, our results suggest that using mean-field variational inference in a deeper model is both a practical and theoretically justified alternative to structured approximations.

Sebastian Farquhar, Lewis Smith, Yarin Gal

NeurIPS, 2020

[Paper] [arXiv]

Spatial Assembly: Generative Architecture With Reinforcement Learning, Self Play and Tree Search

With this work we investigate the use of Reinforcement Learning (RL) for generation of spatial assemblies, by combining ideas from Procedural Generation algorithms (Wave Function Collapse algorithm (WFC)) and RL for Game Solving. WFC is a Generative Design algorithm, inspired by Constraint Satisfaction Solvers. In WFC,one defines a set of tiles/blocks and constraints and the algorithm generates an assembly that satisfies these constraints. Casting the problem of generation of spatial assemblies as a Markov Decision Process whose states transitions are defined by WFC, we propose an algorithm that uses Reinforcement Learning and Self-Play to learn a policy that generates assemblies which maximize objectives set by the designer. We demonstrate the use of our Spatial Assembly algorithm in Architecture Design.

Panagiotis Tigas, Tyson Hosmer

Workshop on Machine Learning for Creativity and Design at the 34rd Conference on Neural Information Processing Systems (NeurIPS 2020) [Paper]

Uncertainty-Aware Counterfactual Explanations for Medical Diagnosis

While deep learning algorithms can excel at predicting outcomes, they often act as black-boxes rendering them uninterpretable for healthcare practitioners. Counterfactual explanations (CEs) are a practical tool for demonstrating why machine learning models make particular decisions. We introduce a novel algorithm that leverages uncertainty to generate trustworthy counterfactual explanations for white-box models. Our proposed method can generate more interpretable CEs than the current benchmark (Van Looveren and Klaise, 2019) for breast cancer diagnosis. Further, our approach provides confidence levels for both the diagnosis as well as the explanation.

Lisa Schut, Oscar Key, Rory McGrath, Luca Costabello, Bogdan Sacaleanu, Medb Corcoran, Yarin Gal

ML4H: Machine Learning for Health Workshop NeurIPS, 2020

[Paper] [BibTex]

On the robustness of effectiveness estimation of nonpharmaceutical interventions against COVID-19 transmission

There remains much uncertainty about the relative effectiveness of different nonpharmaceutical interventions (NPIs) against COVID-19 transmission. Several studies attempt to infer NPI effectiveness with cross-country, data-driven modelling, by linking from NPI implementation dates to the observed timeline of cases and deaths in a country. These models make many assumptions. Previous work sometimes tests the sensitivity to variations in explicit epidemiological model parameters, but rarely analyses the sensitivity to the assumptions that are made by the choice the of model structure (structural sensitivity analysis). Such analysis would ensure that the inferences made are consistent under plausible alternative assumptions. Without it, NPI effectiveness estimates cannot be used to guide policy. We investigate four model structures similar to a recent state-of-the-art Bayesian hierarchical model. We find that the models differ considerably in the robustness of their NPI effectivene... [full abstract]

Mrinank Sharma, Sören Mindermann, Jan Brauner, Gavin Leech, Anna B. Stephenson, Tomáš Gavenčiak, Jan Kulveit, Yee Whye Teh, Leonid Chindelevitch, Yarin Gal

NeurIPS, 2020

[Paper]

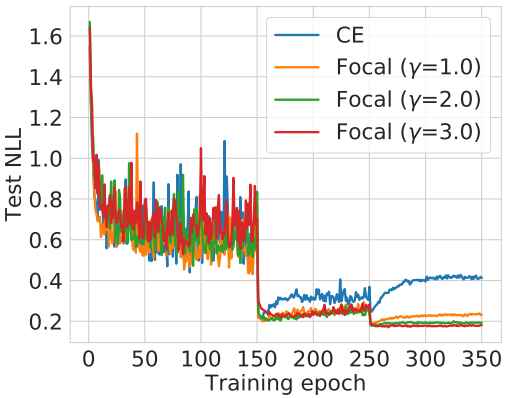

Calibrating Deep Neural Networks using Focal Loss

Miscalibration – a mismatch between a model’s confidence and its correctness – of Deep Neural Networks (DNNs) makes their predictions hard to rely on. Ideally, we want networks to be accurate, calibrated and confident. We show that, as opposed to the standard cross-entropy loss, focal loss (Lin et al., 2017) allows us to learn models that are already very well calibrated. When combined with temperature scaling, whilst preserving accuracy, it yields state-of-the-art calibrated models. We provide a thorough analysis of the factors causing miscalibration, and use the insights we glean from this to justify the empirically excellent performance of focal loss. To facilitate the use of focal loss in practice, we also provide a principled approach to automatically select the hyperparameter involved in the loss function. We perform extensive experiments on a variety of computer vision and NLP datasets, and with a wide variety of network architectures, and show that our approach achieves ... [full abstract]

Jishnu Mukhoti, Viveka Kulharia, Amartya Sanyal, Stuart Golodetz, Philip H.S. Torr, Puneet K. Dokania

NeurIPS, 2020

[Paper]

AutoSimulate: (Quickly) Learning Synthetic Data Generation

Simulation is increasingly being used for generating large labelled datasets in many machine learning problems. Recent methods have focused on adjusting simulator parameters with the goal of maximising accuracy on a validation task, usually relying on REINFORCE-like gradient estimators. However these approaches are very expensive as they treat the entire data generation, model training, and validation pipeline as a black-box and require multiple costly objective evaluations at each iteration. We propose an efficient alternative for optimal synthetic data generation, based on a novel differentiable approximation of the objective. This allows us to optimize the simulator, which may be non-differentiable, requiring only one objective evaluation at each iteration with a little overhead. We demonstrate on a state-of-the-art photorealistic renderer that the proposed method finds the optimal data distribution faster (up to 50×), with significantly reduced training data generation (up t... [full abstract]

Harkirat Singh Behl, Atılım Güneş Baydin, Ran Gal, Philip H.S. Torr, Vibhav Vineet

16th European Conference Computer Vision (ECCV 2020)

[arXiv] [BibTex]

Identifying Causal Effect Inference Failure with Uncertainty-Aware Models

Recommending the best course of action for an individual is a major application of individual-level causal effect estimation. This application is often needed in safety-critical domains such as healthcare, where estimating and communicating uncertainty to decision-makers is crucial. We introduce a practical approach for integrating uncertainty estimation into a class of state-of-the-art neural network methods used for individual-level causal estimates. We show that our methods enable us to deal gracefully with situations of “no-overlap”, common in high-dimensional data, where standard applications of causal effect approaches fail. Further, our methods allow us to handle covariate shift, where test distribution differs to train distribution, common when systems are deployed in practice. We show that when such a covariate shift occurs, correctly modeling uncertainty can keep us from giving overconfident and potentially harmful recommendations. We demonstrate our methodology with a... [full abstract]

Andrew Jesson, Sören Mindermann, Uri Shalit, Yarin Gal

NeurIPS, 2020

[arXiv] [BibTex]

Capsule Networks: A Generative Probabilistic Perspective

‘Capsule’ models try to explicitly represent the poses of objects, enforcing a linear relationship between an objects pose and those of its constituent parts. This modelling assumption should lead to robustness to viewpoint changes since the object-component relationships are invariant to the poses of the object. We describe a probabilistic generative model that encodes these assumptions. Our probabilistic formulation separates the generative assumptions of the model from the inference scheme, which we derive from a variational bound. We experimentally demonstrate the applicability of our unified objective, and the use of test time optimisation to solve problems inherent to amortised inference.

Lewis Smith, Lisa Schut, Yarin Gal, Mark van der Wilk

Object Oriented Learning Workshop, ICML 2020

[Paper]

Principled Uncertainty Estimation for High Dimensional Data

The ability to quantify the uncertainty in the prediction of a Bayesian deep learning model has significant practical implications—from more robust machine-learning based systems to more effective expert-in-the loop processes. While several general measures of model uncertainty exist, they are often intractable in practice when dealing with high dimensional data such as long sequences. Instead, researchers often resort to ad hoc approaches or to introducing independence assumptions to make computation tractable. We introduce a principled approach to estimate uncertainty in high dimensions that circumvents these challenges, and demonstrate its benefits in de novo molecular design.

Pascal Notin, José Miguel Hernández-Lobato, Yarin Gal

Uncertainty & Robustness in Deep Learning Workshop, ICML, 2020

[Paper]

SliceOut: Training Transformers and CNNs faster while using less memory

We demonstrate 10-40% speedups and memory reduction with Wide ResNets, EfficientNets, and Transformer models, with minimal to no loss in accuracy, using SliceOut—a new dropout scheme designed to take advantage of GPU memory layout. By dropping contiguous sets of units at random, our method preserves the regularization properties of dropout while allowing for more efficient low-level implementation, resulting in training speedups through (1) fast memory access and matrix multiplication of smaller tensors, and (2) memory savings by avoiding allocating memory to zero units in weight gradients and activations. Despite its simplicity, our method is highly effective. We demonstrate its efficacy at scale with Wide ResNets & EfficientNets on CIFAR10/100 and ImageNet, as well as Transformers on the LM1B dataset. These speedups and memory savings in training can lead to CO2 emissions reduction of up to 40% for training large models.

Pascal Notin, Aidan Gomez, Joanna Yoo, Yarin Gal

Under review

[Paper]

On using Focal Loss for Neural Network Calibration

Miscalibration – a mismatch between a model’s confidence and its correctness – of Deep Neural Networks (DNNs) makes their predictions hard to rely on. Ideally, we want networks to be accurate and calibrated. In this work, we study focal loss as an alternative to the conventional cross-entropy loss and show that, focal loss allows us to learn models that are comparitively well calibrated while preserving accuracy. We provide a thorough analysis of the factors causing miscalibration, and use the insights we glean from this to justify the superior performance of focal loss. Finally, we perform extensive experiments on a variety of datasets, and with a wide variety of network architectures, and show that focal loss indeed achieves excellent calibration without compromising on accuracy in almost all cases.

Jishnu Mukhoti, Viveka Kulharia, Amartya Sanyal, Stuart Golodetz, Philip H.S. Torr, Puneet K. Dokania

Uncertainty and Robustness in Deep Learning Workshop, ICML 2020

[Paper]

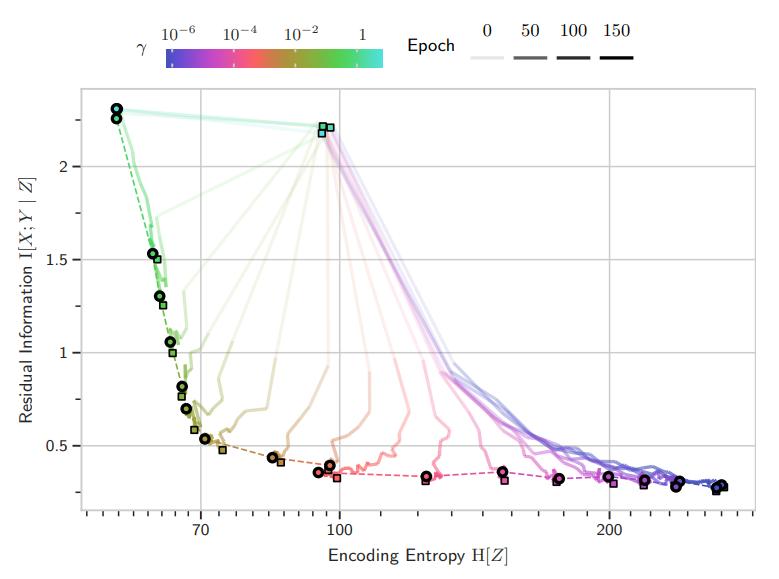

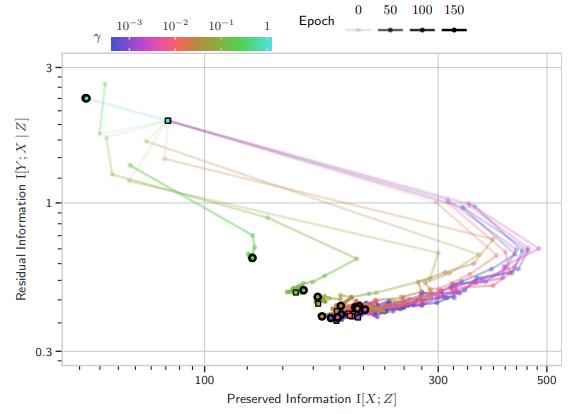

Unpacking Information Bottlenecks: Unifying Information-Theoretic Objectives in Deep Learning

The Information Bottleneck principle offers both a mechanism to explain how deep neural networks train and generalize, as well as a regularized objective with which to train models. However, multiple competing objectives are proposed in the literature, and the information-theoretic quantities used in these objectives are difficult to compute for large deep neural networks, which in turn limits their use as a training objective. In this work, we review these quantities and compare and unify previously proposed objectives, which allows us to develop surrogate objectives more friendly to optimization without relying on cumbersome tools such as density estimation. We find that these surrogate objectives allow us to apply the information bottleneck to modern neural network architectures. We demonstrate our insights on MNIST, CIFAR-10 and Imagenette with modern DNN architectures (ResNets).

Andreas Kirsch, Clare Lyle, Yarin Gal

Uncertainty & Robustness in Deep Learning Workshop, ICML, 2020

[Paper] [BibTex] [Poster]

Learning CIFAR-10 with a Simple Entropy Estimator Using Information Bottleneck Objectives

The Information Bottleneck (IB) principle characterizes learning and generalization in deep neural networks in terms of the change in two information theoretic quantities and leads to a regularized objective function for training neural networks. These quantities are difficult to compute directly for deep neural networks. We show that it is possible to backpropagate through a simple entropy estimator to obtain an IB training method that works for modern neural network architectures. We evaluate our approach empirically on the CIFAR-10 dataset, showing that IB objectives can yield competitive performance on this dataset with a conceptually simple approach while also performing well against adversarial attacks out-of-the-box.

Andreas Kirsch, Clare Lyle, Yarin Gal

Uncertainty & Robustness in Deep Learning Workshop, ICML, 2020

[Paper] [BibTex]

Invariant Causal Prediction for Block MDPs

Generalization across environments is critical to the successful application of reinforcement learning algorithms to real-world challenges. In this paper, we consider the problem of learning abstractions that generalize in block MDPs, families of environments with a shared latent state space and dynamics structure over that latent space, but varying observations. We leverage tools from causal inference to propose a method of invariant prediction to learn model-irrelevance state abstractions (MISA) that generalize to novel observations in the multi-environment setting. We prove that for certain classes of environments, this approach outputs with high probability a state abstraction corresponding to the causal feature set with respect to the return. We further provide more general bounds on model error and generalization error in the multi-environment setting, in the process showing a connection between causal variable selection and the state abstraction framework for MDPs. We giv... [full abstract]

Amy Zhang, Clare Lyle, Shagun Sodhani, Angelos Filos, Marta Kwiatkowska, Joelle Pineau, Yarin Gal, Doina Precup

Causal Learning for Decision Making Workshop at ICLR, 2020

[Paper]

ICML, 2020

[Paper]

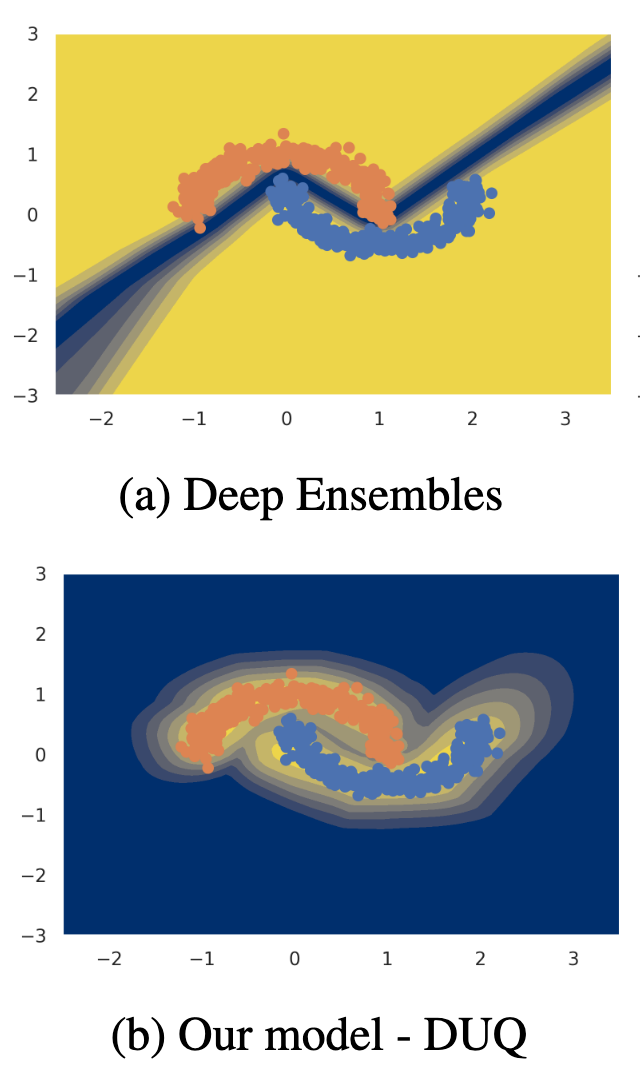

Uncertainty Estimation Using a Single Deep Deterministic Neural Network

We propose a method for training a deterministic deep model that can find and reject out of distribution data points at test time with a single forward pass. Our approach, deterministic uncertainty quantification (DUQ), builds upon ideas of RBF networks. We scale training in these with a novel loss function and centroid updating scheme and match the accuracy of softmax models. By enforcing detectability of changes in the input using a gradient penalty, we are able to reliably detect out of distribution data. Our uncertainty quantification scales well to large datasets, and using a single model, we improve upon or match Deep Ensembles in out of distribution detection on notable difficult dataset pairs such as FashionMNIST vs. MNIST, and CIFAR-10 vs. SVHN.

Joost van Amersfoort, Lewis Smith, Yee Whye Teh, Yarin Gal

ICML, 2020

[Paper] [BibTex]

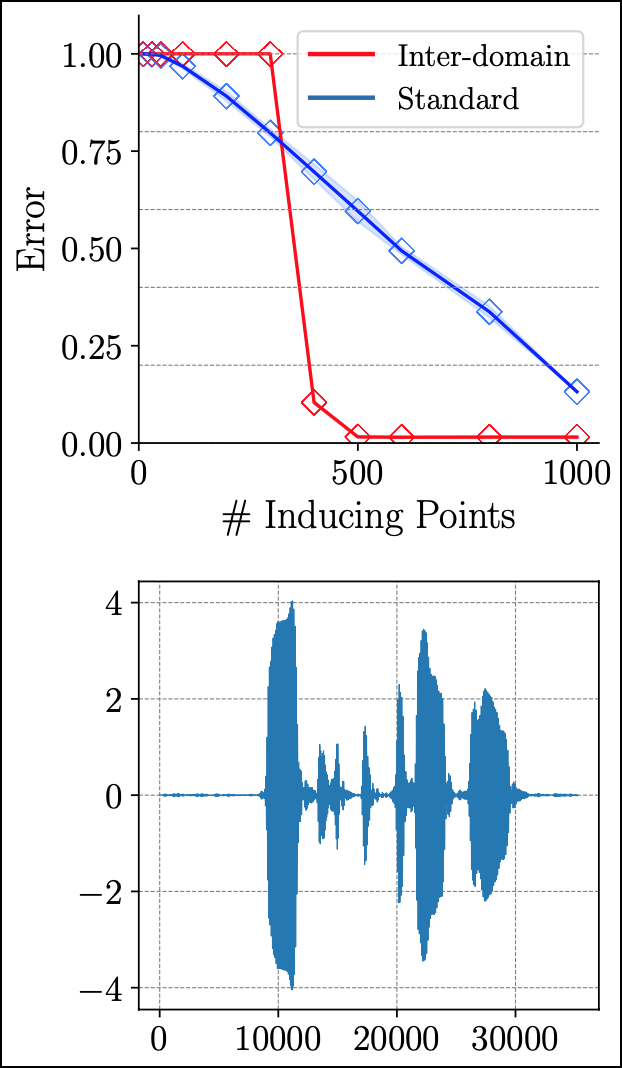

Inter-domain Deep Gaussian Processes

Inter-domain Gaussian processes (GPs) allow for high flexibility and low computational cost when performing approximate inference in GP models. They are particularly suitable for modeling data exhibiting global structure but are limited to stationary covariance functions and thus fail to model non-stationary data effectively. We propose Inter-domain Deep Gaussian Processes, an extension of inter-domain shallow GPs that combines the advantages of inter-domain and deep Gaussian processes (DGPs), and demonstrate how to leverage existing approximate inference methods to perform simple and scalable approximate inference using inter-domain features in DGPs. We assess the performance of our method on a range of regression tasks and demonstrate that it outperforms inter-domain shallow GPs and conventional DGPs on challenging large-scale real-world datasets exhibiting both global structure as well as a high-degree of non-stationarity.

Tim G. J. Rudner, Dino Sejdinovic, Yarin Gal

ICML, 2020

[arXiv] [Website] [Talk] [Slides] [BibTex]

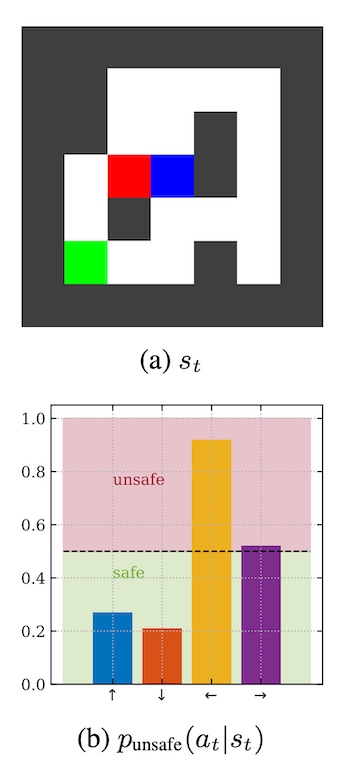

Can Autonomous Vehicles Identify, Recover From, and Adapt to Distribution Shifts?

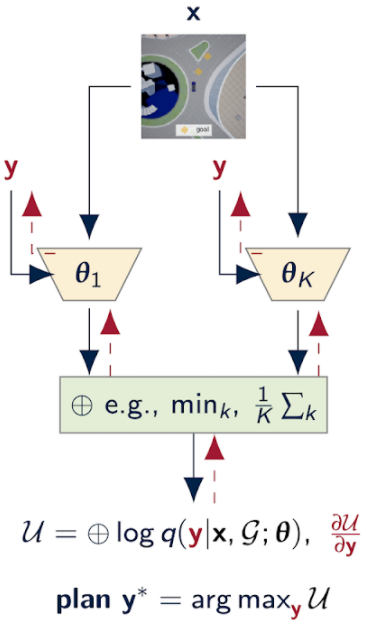

Out-of-training-distribution (OOD) scenarios are a common challenge of learning agents at deployment, typically leading to arbitrary deductions and poorly-informed decisions. In principle, detection of and adaptation to OOD scenes can mitigate their adverse effects. In this paper, we highlight the limitations of current approaches to novel driving scenes and propose an epistemic uncertainty-aware planning method, called robust imitative planning (RIP). Our method can detect and recover from some distribution shifts, reducing the overconfident and catastrophic extrapolations in OOD scenes. If the model’s uncertainty is too great to suggest a safe course of action, the model can instead query the expert driver for feedback, enabling sample-efficient online adaptation, a variant of our method we term adaptive robust imitative planning (AdaRIP). Our methods outperform current state-of-the-art approaches in the nuScenes prediction challenge, but since no be... [full abstract]

Angelos Filos, Panagiotis Tigas, Rowan McAllister, Nicholas Rhinehart, Sergey Levine, Yarin Gal

ICML, 2020

[Paper] [Code] [Website]

Divide, Conquer, and Combine: a New Inference Strategy for Probabilistic Programs with Stochastic Support

Universal probabilistic programming systems (PPSs) provide a powerful framework for specifying rich and complex probabilistic models. They further attempt to automate the process of drawing inferences from these models, but doing this successfully is severely hampered by the wide range of non–standard models they can express. As a result, although one can specify complex models in a universal PPS, the provided inference engines often fall far short of what is required. In particular, we show they produce surprisingly unsatisfactory performance for models where the support may vary between executions, often doing no better than importance sampling from the prior. To address this, we introduce a new inference framework: Divide, Conquer, and Combine, which remains efficient for such models, and show how it can be implemented as an automated and general-purpose PPS inference engine. We empirically demonstrate substantial performance improvements over existing approaches on two examp... [full abstract]

Yuan Zhou, Hongseok Yang, Yee Whye Teh, Tom Rainforth

ICML, 2020

[Paper]

Model And Data Uncertainty For Satellite Time Series Forecasting With Deep Recurrent Models

Deep Learning is often criticized as black-box method which often provides accurate predictions, but limited explanation of the underlying processes and no indication when to not trust those predictions. Equipping existing deep learning models with an (approximate) notion of uncertainty can help mitigate both these issues therefore their use should be known more broadly in the community. The Bayesian deep learning community has developed model-agnostic and easyto-implement methodology to estimate both data and model uncertainty within deep learning models which is hardly applied in the remote sensing community. In this work, we adopt this methodology for deep recurrent satellite time series forecasting, and test its assumptions on data and model uncertainty. We demonstrate its effectiveness on two applications on climate change, and event change detection and outline limitations.

Marc Rußwurm, Syed Mohsin Ali, Xiao Xiang Zhu, Yarin Gal, Marco Körner

Student Paper Competition Finalists (out of 250 submissions), IGARSS 2020

[Paper]

Uncertainty Evaluation Metric for Brain Tumour Segmentation

In this paper, we develop a metric designed to assess and rank uncertainty measures for the task of brain tumour sub-tissue segmentation in the BraTS 2019 sub-challenge on uncertainty quantification. The metric is designed to: (1) reward uncertainty measures where high confidence is assigned to correct assertions, and where incorrect assertions are assigned low confidence and (2) penalize measures that have higher percentages of under-confident correct assertions. Here, the workings of the components of the metric are explored based on a number of popular uncertainty measures evaluated on the BraTS 2019 dataset.

Raghav Mehta, Angelos Filos, Yarin Gal, Tal Arbel

MIDL, 2020

[Paper]

Uncertainty Quantification with Statistical Guarantees in End-to-End Autonomous Driving Control

Deep neural network controllers for autonomous driving have recently benefited from significant performance improvements, and have begun deployment in the real world. Prior to their widespread adoption, safety guarantees are needed on the controller behaviour that properly take account of the uncertainty within the model as well as sensor noise. Bayesian neural networks, which assume a prior over the weights, have been shown capable of producing such uncertainty measures, but properties surrounding their safety have not yet been quantified for use in autonomous driving scenarios. In this paper, we develop a framework based on a state-of-the-art simulator for evaluating end-to-end Bayesian controllers. In addition to computing pointwise uncertainty measures that can be computed in real time and with statistical guarantees, we also provide a method for estimating the probability that, given a scenario, the controller keeps the car safe within a finite horizon. We experimentally ev... [full abstract]

Rhiannon Michelmore, Matthew Wicker, Luca Laurenti, Luca Cardelli, Yarin Gal, Marta Kwiatkowska

2020 International Conference on Robotics and Automation (ICRA)

[arXiv]

Try Depth Instead of Weight Correlations: Mean-field is a Less Restrictive Assumption for Deeper Networks