Publications

Targeted Dropout

Neural networks are extremely flexible models due to their large number of parameters, which is beneficial for learning, but also highly redundant. This makes it possible to compress neural networks without having a drastic effect on performance. We introduce targeted dropout, a strategy for post hoc pruning of neural network weights and units that builds the pruning mechanism directly into learning. At each weight update, targeted dropout selects a candidate set for pruning using a simple selection criterion, and then stochastically prunes the network via dropout applied to this set. The resulting network learns to be explicitly robust to pruning, comparing favourably to more complicated regularization schemes while at the same time being extremely simple to implement, and easy to tune.Aidan N. Gomez, Ivan Zhang, Kevin Swersky, Yarin Gal, Geoffrey E. Hinton

NIPS 2018 workshop on Compact Deep Neural Networks with industrial applications

[Paper] [BibTex]

On the Importance of Strong Baselines in Bayesian Deep Learning

Like all sub-fields of machine learning, Bayesian Deep Learning is driven by empirical validation of its theoretical proposals. Given the many aspects of an experiment, it is always possible that minor or even major experimental flaws can slip by both authors and reviewers. One of the most popular experiments used to evaluate approximate inference techniques is the regression experiment on UCI datasets. However, in this experiment, models which have been trained to convergence have often been compared with baselines trained only for a fixed number of iterations. What we find is that if we take a well-established baseline and evaluate it under the same experimental settings, it shows significant improvements in performance. In fact, it outperforms or performs competitively with numerous to several methods that when they were introduced claimed to be superior to the very same baseline method. Hence, by exposing this flaw in experimental procedure, we highlight the importance of using identical experimental setups to evaluate, compare and benchmark methods in Bayesian Deep Learning.Jishnu Mukhoti, Pontus Stenetorp, Yarin Gal

Bayesian Deep Learning workshop, NIPS, 2018

[Paper] [arXiv] [BibTex]

Evaluating Uncertainty Quantification in End-to-End Autonomous Driving Control

Self-driving has benefited from significant performance improvements with the rise of deep learning, with millions of miles having been driven with no human intervention. Despite this, crashes and erroneous behaviours still occur, in part due to the complexity of verifying the correctness of DNNs and a lack of safety guarantees. In this paper, we demonstrate how quantitative measures of uncertainty can be extracted in real-time, and their quality evaluated in end-to-end controllers for self-driving cars. We propose evaluation techniques for the uncertainty on two separate architectures which use the uncertainty to predict crashes up to five seconds in advance. We find that mutual information, a measure of uncertainty in classification networks, is a promising indicator of forthcoming crashes.Rhiannon Michelmore, Marta Kwiatkowska, Yarin Gal

In submission

[arXiv] [BibTex]

Using Bayesian Optimization to Find Asteroids' Pole Directions

Near-Earth asteroids (NEAs) are being discovered much faster than their shapes and other physical properties can be characterized in detail. One of the best ways to spatially resolve NEAs from the ground is with planetary radar observations. Radar echoes can be decoded in round-trip travel time and frequency to produce two-dimensional delay-Doppler images of the asteroid. Given a series of such images acquired over the course of the asteroid's rotation, one can search for the shape and other physical properties that best match the observations. However, reconstructing asteroid shapes from radar data is, like many inverse problems, a computationally intensive task. Shape modeling also requires extensive human oversight to ensure that the fitting process is finding physically reasonable results. In this paper we use Bayesian optimisation for this difficult task.Marshall, Sean; Cobb, Adam; Raïssi, Chedy; Gal, Yarin; Rozek, Agata; Busch, Michael W.; Young, Grace; McGlasson, Riley

American Astronomical Society (AAS), 2018

[Citation] [BibTex]

Sufficient Conditions for Idealised Models to Have No Adversarial Examples: a Theoretical and Empirical Study with Bayesian Neural Networks

We prove, under two sufficient conditions, that idealised models can have no adversarial examples. We discuss which idealised models satisfy our conditions, and show that idealised Bayesian neural networks (BNNs) satisfy these. We continue by studying near-idealised BNNs using HMC inference, demonstrating the theoretical ideas in practice. We experiment with HMC on synthetic data derived from MNIST for which we know the ground-truth image density, showing that near-perfect epistemic uncertainty correlates to density under image manifold, and that adversarial images lie off the manifold in our setting. This suggests why MC dropout, which can be seen as performing approximate inference, has been observed to be an effective defence against adversarial examples in practice; We highlight failure-cases of non-idealised BNNs relying on dropout, suggesting a new attack for dropout models and a new defence as well. Lastly, we demonstrate the defence on a cats-vs-dogs image classification task with a VGG13 variant.Yarin Gal, Lewis Smith

arXiv, 2018

[arXiv] [BibTex]

Differentially private continual learning

Catastrophic forgetting can be a significant problem for institutions that must delete historic data for privacy reasons. For example, hospitals might not be able to retain patient data permanently. But neural networks trained on recent data alone will tend to forget lessons learned on old data. We present a differentially private continual learning framework based on variational inference. We estimate the likelihood of past data given the current model using differentially private generative models of old datasets. The differentially private training has no detrimental impact on our architecture's continual learning performance, and still outperforms the current state-of-the-art non-private continual learning.Sebastian Farquhar, Yarin Gal

Privacy in Machine Learning and Artificial Intelligence workshop, ICML, 2018

[Paper] [BibTex]

Loss-Calibrated Approximate Inference in Bayesian Neural Networks

Current approaches in approximate inference for Bayesian neural networks minimise the Kullback-Leibler divergence to approximate the true posterior over the weights. However, this approximation is without knowledge of the final application, and therefore cannot guarantee optimal predictions for a given task. To make more suitable task-specific approximations, we introduce a new loss-calibrated evidence lower bound for Bayesian neural networks in the context of supervised learning, informed by Bayesian decision theory. By introducing a lower bound that depends on a utility function, we ensure that our approximation achieves higher utility than traditional methods for applications that have asymmetric utility functions. Furthermore, in using dropout inference, we highlight that our new objective is identical to that of standard dropout neural networks, with an additional utility-dependent penalty term. We demonstrate our new loss-calibrated model with an illustrative medical example and a restricted model capacity experiment, and highlight failure modes of the comparable weighted cross entropy approach. Lastly, we demonstrate the scalability of our method to real world applications with per-pixel semantic segmentation on an autonomous driving data set.Adam D. Cobb, Stephen J. Roberts, Yarin Gal

Theory of deep learning workshop, ICML, 2018

[arXiv] [Code] [BibTex]

Towards Robust Evaluations of Continual Learning

Continual learning experiments used in current deep learning papers do not faithfully assess fundamental challenges of learning continually, masking weak-points of the suggested approaches instead. We study gaps in such existing evaluations, proposing essential experimental evaluations that are more representative of continual learning's challenges, and suggest a re-prioritization of research efforts in the field. We show that current approaches fail with our new evaluations and, to analyse these failures, we propose a variational loss which unifies many existing solutions to continual learning under a Bayesian framing, as either 'prior-focused' or 'likelihood-focused'. We show that while prior-focused approaches such as EWC and VCL perform well on existing evaluations, they perform dramatically worse when compared to likelihood-focused approaches on other simple tasks.Sebastian Farquhar, Yarin Gal

Lifelong Learning: A Reinforcement Learning Approach workshop, ICML, 2018

[arXiv] [BibTex]

Fast and Scalable Bayesian Deep Learning by Weight-Perturbation in Adam

Uncertainty computation in deep learning is essential to design robust and reliable systems. Variational inference (VI) is a promising approach for such computation, but requires more effort to implement and execute compared to maximum-likelihood methods. In this paper, we propose new natural-gradient algorithms to reduce such efforts for Gaussian mean-field VI. Our algorithms can be implemented within the Adam optimizer by perturbing the network weights during gradient evaluations, and uncertainty estimates can be cheaply obtained by using the vector that adapts the learning rate. This requires lower memory, computation, and implementation effort than existing VI methods, while obtaining uncertainty estimates of comparable quality. Our empirical results confirm this and further suggest that the weight-perturbation in our algorithm could be useful for exploration in reinforcement learning and stochastic optimization.Mohammad Emtiyaz Khan, Didrik Nielsen, Voot Tangkaratt, Wu Lin, Yarin Gal, Akash Srivastava

ICML, 2018

[Paper] [arXiv] [BibTex]

Understanding Measures of Uncertainty for Adversarial Example Detection

Measuring uncertainty is a promising technique for detecting adversarial examples, crafted inputs on which the model predicts an incorrect class with high confidence. But many measures of uncertainty exist, including predictive en- tropy and mutual information, each capturing different types of uncertainty. We study these measures, and shed light on why mutual information seems to be effective at the task of adversarial example detection. We highlight failure modes for MC dropout, a widely used approach for estimating uncertainty in deep models. This leads to an improved understanding of the drawbacks of current methods, and a proposal to improve the quality of uncertainty estimates using probabilistic model ensembles. We give illustrative experiments using MNIST to demonstrate the intuition underlying the different measures of uncertainty, as well as experiments on a real world Kaggle dogs vs cats classification dataset.Lewis Smith, Yarin Gal

UAI, 2018

[Paper] [arXiv] [BibTex]

Using Pre-trained Full-Precision Models to Speed Up Training Binary Networks For Mobile Devices

Binary Neural Networks (BNNs) are well-suited for deploying Deep Neural Networks (DNNs) to small embedded devices but state-of-the-art BNNs need to be trained from scratch. We show how weights from a trained full-precision model can be used to speed-up training binary networks. We show that for CIFAR-10, accuracies within 1% of the full-precision model can be achieved in just 5 epochs.Milad Alizadeh, Nicholas D. Lane, Yarin Gal

16th ACM International Conference on Mobile Systems (MobiSys), 2018

[Abstract] [BibTex]

BRUNO: A Deep Recurrent Model for Exchangeable Data

We present a novel model architecture which leverages deep learning tools to perform exact Bayesian inference on sets of high dimensional, complex observations. Our model is provably exchangeable, meaning that the joint distribution over observations is invariant under permutation: this property lies at the heart of Bayesian inference. The model does not require variational approximations to train, and new samples can be generated conditional on previous samples, with cost linear in the size of the conditioning set. The advantages of our architecture are demonstrated on learning tasks that require generalisation from short observed sequences while modelling sequence variability, such as conditional image generation, few-shot learning, and anomaly detection.Iryna Korshunova, Jonas Degrave, Ferenc Huszár, Yarin Gal, Arthur Gretton, Joni Dambre

arXiv, 2018

[arXiv] [BibTex]

Automating Asteroid Shape Modeling From Radar Images

Characterizing the shapes and spin states of near-Earth asteroids is essential both for trajectory predictions to rule out potential future Earth impacts and for planning spacecraft missions. But reconstructing objects’ shapes and spins from delay-Doppler data is a computationally intensive inversion problem. We implement a Bayesian optimization routine that uses SHAPE to autonomously search the space of spin-state parameters, yielding spin state constraints within a factor of 3 less computer runtime and minimal human supervision. These routines are now being incorporated into radar data processing pipelines at Arecibo.Michael W. Busch, Agata Rozek, Sean Marshall, Grace Young, Adam Cobb, Chedy Raissi, Yarin Gal, Lance Benner, Shantanu Naidu, Marina Brozovic, Patrick Taylor

COSPAR (Committee on Space Research) Assembly, 2018

[Program] [Blog Post (Adam Cobb)] [BibTex]

Vprop: Variational Inference using RMSprop

Many computationally-efficient methods for Bayesian deep learning rely on continuous optimization algorithms, but the implementation of these methods requires significant changes to existing code-bases. In this paper, we propose Vprop, a method for variational inference that can be implemented with two minor changes to the off-the-shelf RMSprop optimizer. Vprop also reduces the memory requirements of Black-Box Variational Inference by half. We derive Vprop using the conjugate-computation variational inference method, and establish its connections to Newton’s method, natural-gradient methods, and extended Kalman filters. Overall, this paper presents Vprop as a principled, computationally-efficient, and easy-to-implement method for Bayesian deep learning.Mohammad Emtiyaz Khan, Zuozhu Liu, Voot Tangkaratt, Yarin Gal

Bayesian Deep Learning workshop, NIPS, 2017

[Paper] [arXiv] [BibTex]

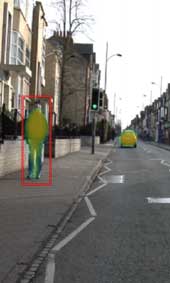

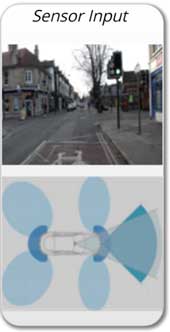

Concrete problems for autonomous vehicle safety: Advantages of Bayesian deep learning

Autonomous vehicle (AV) software is typically composed of a pipeline of individual components, linking sensor inputs to motor outputs. Erroneous component outputs propagate downstream, hence safe AV software must consider the ultimate effect of each component's errors. Further, improving safety alone is not sufficient. Passengers must also feel safe to trust and use AV systems. To address such concerns, we investigate three under-explored themes for AV research: safety, interpretability, and compliance. Safety can be improved by quantifying the uncertainties of component outputs and propagating them forward through the pipeline. Interpretability is concerned with explaining what the AV observes and why it makes the decisions it does, building reassurance with the passenger. Compliance refers to maintaining some control for the passenger.Rowan McAllister, Yarin Gal, Alex Kendall, Mark van der Wilk, Amar Shah, Roberto Cipolla, Adrian Vivian Weller

IJCAI, 2017

[Paper] [BibTex]

Multi-Task Learning Using Uncertainty to Weigh Losses for Scene Geometry and Semantics

Numerous deep learning applications benefit from multi-task learning with multiple regression and classification objectives. In this paper we make the observation that the performance of such systems is strongly dependent on the relative weighting between each task's loss. Tuning these weights by hand is a difficult and expensive process, making multi-task learning prohibitive in practice. We propose a principled approach to multi-task deep learning which weighs multiple loss functions by considering the homoscedastic uncertainty of each task. This allows us to simultaneously learn various quantities with different units or scales in both classification and regression settings. We demonstrate our model learning per-pixel depth regression, semantic and instance segmentation from a monocular input image. Perhaps surprisingly, we show our model can learn multi-task weightings and outperform separate models trained individually on each task.Alex Kendall, Yarin Gal, Roberto Cipolla

arXiv, 2017

[arXiv] [Software] [BibTex]

CVPR (Spotlight), 2018

[Paper] [BibTex]

Real Time Image Saliency for Black Box Classifiers

In this work we develop a fast saliency detection method that can be applied to any differentiable image classifier. We train a masking model to manipulate the scores of the classifier by masking salient parts of the input image. Our model generalises well to unseen images and requires a single forward pass to perform saliency detection, therefore suitable for use in real-time systems. We test our approach on CIFAR-10 and ImageNet datasets and show that the produced saliency maps are easily interpretable, sharp, and free of artifacts. We suggest a new metric for saliency and test our method on the ImageNet object localisation task. We achieve results outperforming other weakly supervised methods.Piotr Dabkowski, Yarin Gal

arXiv, 2017

[arXiv] [BibTex]

NIPS, 2017

[Paper] [BibTex]

Concrete Dropout

Dropout is used as a practical tool to obtain uncertainty estimates in large vision models and reinforcement learning (RL) tasks. But to obtain well-calibrated uncertainty estimates, a grid-search over the dropout probabilities is necessary - a prohibitive operation with large models, and an impossible one with RL. We propose a new dropout variant which gives improved performance and better calibrated uncertainties. Relying on recent developments in Bayesian deep learning, we use a continuous relaxation of dropout's discrete masks. Together with a principled optimisation objective, this allows for automatic tuning of the dropout probability in large models, and as a result faster experimentation cycles. In RL this allows the agent to adapt its uncertainty dynamically as more data is observed. We analyse the proposed variant extensively on a range of tasks, and give insights into common practice in the field where larger dropout probabilities are often used in deeper model layers.Yarin Gal, Jiri Hron, Alex Kendall

arXiv, 2017

[arXiv] [Software] [BibTex]

NIPS, 2017

[Paper] [BibTex]

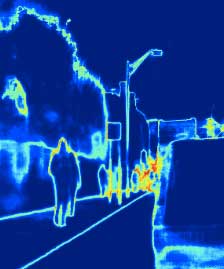

What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision?

There are two major types of uncertainty one can model. Aleatoric uncertainty captures noise inherent in the observations. On the other hand, epistemic uncertainty accounts for uncertainty in the model -- uncertainty which can be explained away given enough data. Traditionally it has been difficult to model epistemic uncertainty in computer vision, but with new Bayesian deep learning tools this is now possible. We study the benefits of modeling epistemic vs. aleatoric uncertainty in Bayesian deep learning models for vision tasks. For this we present a Bayesian deep learning framework combining input-dependent aleatoric uncertainty together with epistemic uncertainty. We study models under the framework with per-pixel semantic segmentation and depth regression tasks. Further, our explicit uncertainty formulation leads to new loss functions for these tasks, which can be interpreted as learned attenuation. This makes the loss more robust to noisy data, also giving new state-of-the-art results on segmentation and depth regression benchmarks.Alex Kendall, Yarin Gal

arXiv, 2017

[arXiv] [BibTex]

NIPS (Spotlight), 2017

[Paper] [BibTex]

Dropout Inference in Bayesian Neural Networks with Alpha-divergences

To obtain uncertainty estimates with real-world Bayesian deep learning models, practical inference approximations are needed. Dropout variational inference (VI) for example has been used for machine vision and medical applications, but VI can severely underestimates model uncertainty. Alpha-divergences are alternative divergences to VI’s KL objective, which are able to avoid VI’s uncertainty underestimation. But these are hard to use in practice: existing techniques can only use Gaussian approximating distributions, and require existing models to be changed radically, thus are of limited use for practitioners. We propose a re-parametrisation of the alpha-divergence objectives, deriving a simple inference technique which, together with dropout, can be easily implemented with existing models by simply changing the loss of the model. We demonstrate improved uncertainty estimates and accuracy compared to VI in dropout networks. We study our model’s epistemic uncertainty far away from the data using adversarial images, showing that these can be distinguished from non-adversarial images by examining our model’s uncertainty.Yingzhen Li, Yarin Gal

ICML, 2017

[Paper] [arXiv] [BibTex]

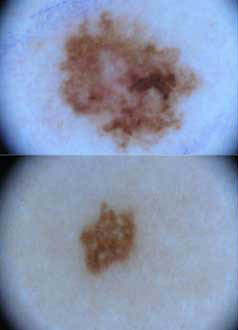

Deep Bayesian Active Learning with Image Data

Even though active learning forms an important pillar of machine learning, deep learning tools are not prevalent within it. Relying on Bayesian approaches to deep learning, in this paper we combine recent advances in Bayesian deep learning into the active learning framework in a practical way. We develop an active learning framework for high dimensional data, a task which has been extremely challenging so far with very sparse existing literature, and demonstrate it in melanoma (skin cancer) diagnosis.Yarin Gal, Riashat Islam, Zoubin Ghahramani

Bayesian Deep Learning workshop, NIPS, 2016

[PDF] [Poster] [Code] [BibTex]

ICML, 2017

[Paper] [arXiv] [BibTex]

Thesis: Uncertainty in Deep Learning

So I finally submitted my PhD thesis. In it I organised the already published results on how to obtain uncertainty in deep learning, and collected lots of bits and pieces of new research I had lying around (which I hadn't had the time to publish yet).Yarin Gal

PhD Thesis, 2016

[PDF] [Blog post] [BibTex]

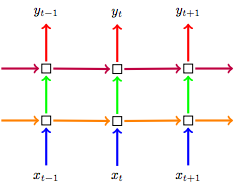

A Theoretically Grounded Application of Dropout in Recurrent Neural Networks

We present a new technique for recurrent neural network regularisation, relying on recent results at the intersection of Bayesian modelling and deep learning. Our RNN dropout variant is theoretically motivated and its effectiveness is demonstrated empirically, with the new approach improving on the single model state-of-the-art in language modelling with the Penn Treebank (73.4 test perplexity). This extends our arsenal of variational tools in deep learning.Yarin Gal, Zoubin Ghahramani

arXiv, 2015

[arXiv] [Software] [BibTex]

Data-Efficient Machine Learning workshop, ICML, 2016

[Paper] [Poster]

NIPS, 2016

[Paper] [BibTex]

Improving PILCO with Bayesian Neural Network Dynamics Models

We attempt to answer PILCO's shortcomings by replacing its Gaussian process with a Bayesian deep dynamics model, while maintaining the framework’s probabilistic nature and its data-efficiency benefits. This task poses several interesting difficulties. First, we have to handle small data, and neural networks are notoriously known for their tendency to overfit. Furthermore, we must retain PILCO's ability to capture 1) dynamics model output uncertainty and 2) input uncertainty.Yarin Gal, Rowan Mcallister and Carl E. Rasmussen

Data-Efficient Machine Learning workshop, ICML, 2016

[Paper] [Abstract] [Poster] [BibTex]

On Modern Deep Learning and Variational Inference

Bayesian modelling and variational inference are rooted in Bayesian statistics, and easily benefit from the vast literature in the field. In contrast, deep learning lacks a solid mathematical grounding. Instead, empirical developments in deep learning are often justified by metaphors, evading the unexplained principles at play. In this paper we extend previous results casting modern deep learning models as performing approximate variational inference in a Bayesian setting, and survey open problems to research.Yarin Gal, Zoubin Ghahramani

Advances in Approximate Bayesian Inference workshop, NIPS, 2015

[PDF] [Poster] [BibTex]

We thank the workshop organisers for the travel award.

Rapid Prototyping of Probabilistic Models: Emerging Challenges in Variational Inference

Perhaps ironically, the deep learning community is far closer to our vision of ``automated modelling'' than the probabilistic modelling community. Many complex models in deep learning can be easily implemented and tested, while variational inference (VI) techniques require specialised knowledge and long development cycles, making them extremely challenging for non-experts. We discuss a possible solution lifted from manufacturing. Similar ideas in deep learning have led to rapid development in model complexity, speeding up the innovation cycle.Yarin Gal

Advances in Approximate Bayesian Inference workshop, NIPS, 2015

[PDF] [Poster] [BibTex]

We thank the workshop organisers for the travel award.

Bayesian Convolutional Neural Networks with Bernoulli Approximate Variational Inference

We present an efficient Bayesian convolutional neural network (convnet). The model offers better robustness to over-fitting on small data and achieves a considerable improvement in classification accuracy compared to previous approaches. We give state-of-the-art results on CIFAR-10 following our insights.Yarin Gal, Zoubin Ghahramani

arXiv, 2015

[arXiv] [Software] [BibTex]

ICLR workshop, 2016

[CMT Reviews] [OpenReview] [BibTex]

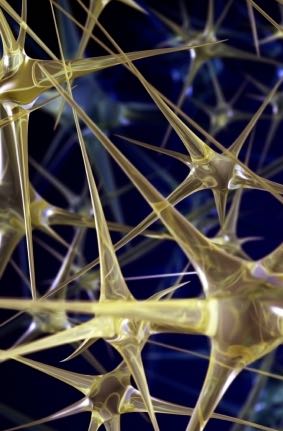

Dropout as a Bayesian Approximation: Representing Model Uncertainty in Deep Learning

We show that dropout in multilayer perceptron models (MLPs) can be interpreted as a Bayesian approximation. Results are obtained for modelling uncertainty for dropout MLP models - extracting information that has been thrown away so far, from existing models. This mitigates the problem of representing uncertainty in deep learning without sacrificing computational performance or test accuracy.Yarin Gal, Zoubin Ghahramani

arXiv, 2015

[arXiv] [BibTex] [Appendix] [BibTex] [Software]

Invited for presentation at the First Deep Learning Symposium at NIPS 2015.

ICML, 2016

[Paper] [Presentation] [Poster] [BibTex]

We thank ICML for the travel award.

Dropout as a Bayesian Approximation: Insights and Applications

Deep learning techniques lack the ability to reason about uncertainty over the features. We show that a multilayer perceptron (MLP) with arbitrary depth and non-linearities, with dropout applied after every weight layer, is mathematically equivalent to an approximation to a well known Bayesian model. This paper is a short version of the appendix of "Dropout as a Bayesian Approximation: Representing Model Uncertainty in Deep Learning".Yarin Gal, Zoubin Ghahramani

Deep Learning Workshop, ICML, 2015

[PDF] [Poster] [BibTex]

An Infinite Product of Sparse Chinese Restaurant Processes

We define a new process that gives a natural generalisation of the Indian buffet process (used for binary feature allocation) into categorical latent features. For this we take advantage of different limit parametrisations of the Dirichlet process and its generalisation the Pitman–Yor process.Yarin Gal, Tomoharu Iwata, Zoubin Ghahramani

10th Conference on Bayesian Nonparametrics (BNP), 2015

[Presentation] [BibTex]

We thank BNP for the travel award.

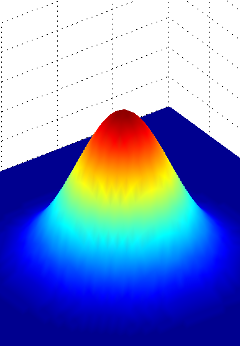

Improving the Gaussian Process Sparse Spectrum Approximation by Representing Uncertainty in Frequency Inputs

Standard sparse pseudo-input approximations to the Gaussian process (GP) cannot handle complex functions well. Sparse spectrum alternatives attempt to answer this but are known to over-fit. We use variational inference for the sparse spectrum approximation to avoid both issues. We extend the approximate inference to the distributed and stochastic domains.Yarin Gal, Richard Turner

ICML, 2015

[PDF] [Presentation] [Poster] [Software] [BibTex]

Latent Gaussian Processes for Distribution Estimation of Multivariate Categorical Data

Multivariate categorical data occur in many applications of machine learning. One of the main difficulties with these vectors of categorical variables is sparsity. The number of possible observations grows exponentially with vector length, but dataset diversity might be poor in comparison. Recent models have gained significant improvement in supervised tasks with this data. These models embed observations in a continuous space to capture similarities between them. Building on these ideas we propose a Bayesian model for the unsupervised task of distribution estimation of multivariate categorical data.Yarin Gal, Yutian Chen, Zoubin Ghahramani

Workshop on Advances in Variational Inference, NIPS, 2014

[PDF] [Poster] [Presentation] [BibTex]

ICML, 2015

[PDF] [Presentation] [Poster] [Software] [BibTex]

We thank Google DeepMind for the travel award.

Distributed Variational Inference in Sparse Gaussian Process Regression and Latent Variable Models

We develop parallel inference for sparse Gaussian process regression and latent variable models. These processes are used to model functions in a principled way and for non-linear dimensionality reduction in linear time complexity. Using parallel inference we allow the models to work on much larger datasets than before.Yarin Gal, Mark van der Wilk, Carl E. Rasmussen

Workshop on New Learning Models and Frameworks for Big Data, ICML, 2014

[arXiv] [Presentation] [Software] [BibTex]

NIPS, 2014

[PDF] [BibTex]

We thank NIPS for the travel award.

Feature Partitions and Multi-View Clusterings

We define a new combinatorial structure that unifies Kingman's random partitions and Broderick, Pitman, and Jordan's feature frequency models. This structure underlies non-parametric multi-view clustering models, where data points are simultaneously clustered into different possible clusterings. The de Finetti measure is a product of paintbox constructions. Studying the properties of feature partitions allows us to understand the relations between the models they underlie and share algorithmic insights between them.Yarin Gal, Zoubin Ghahramani

International Society for Bayesian Analysis (ISBA), 2014

[Link] [Poster]

We thank ISBA for the travel award.

Dirichlet Fragmentation Processes

We introduce a new class of models over trees based on the theory of fragmentation processes. The Dirichlet Fragmentation Process Mixture Model is an example model derived from this new class. This model has efficient and simple inference, and significantly outperforms existing approaches for hierarchical clustering and density modelling.Hong Ge, Yarin Gal, Zoubin Ghahramani

In submission, 2014

[PDF] [BibTex]

Pitfalls in the use of Parallel Inference for the Dirichlet Process

We show that the recently suggested parallel inference for the Dirichlet process is conceptually invalid. The Dirichlet process is important for many fields such as natural language processing. However the suggested inference would not work in most real-world applications.Yarin Gal, Zoubin Ghahramani

Workshop on Big Learning, NIPS, 2013

[PDF] [Presentation] [BibTex]

ICML, 2014

[PDF] [Talk] [Presentation] [Poster] [BibTex]

Variational Inference in the Gaussian Process Latent Variable Model and Sparse GP Regression – a Gentle Tutorial

We present an in-depth and self-contained tutorial for sparse Gaussian Process (GP) regression. We also explain GP latent variable models, a tool for non-linear dimensionality reduction. The sparse approximation reduces the time complexity of the models from cubic to linear but its development is scattered across the literature. The various results are collected here.Yarin Gal, Mark van der Wilk

Tutorial, 2014

[arXiv] [BibTex]

Semantics, Modelling, and the Problem of Representation of Meaning – a Brief Survey of Recent Literature

Over the past 50 years many have debated what representation should be used to capture the meaning of natural language utterances. Recently new needs of such representations have been raised in research. Here I survey some of the interesting representations suggested to answer for these new needs.Yarin Gal

Literature survey, 2013

[arXiv] [BibTex]

A Systematic Bayesian Treatment of the IBM Alignment Models

We used a non-parametric process — the hierarchical Pitman–Yor process — in models that align words between pairs of sentences. These alignment models are used at the core of all machine translation systems. We obtained a significant improvement in translation using the process.Yarin Gal, Phil Blunsom

Association for Computational Linguistics (NA-ACL), 2013

[PDF] [Presentation] [BibTex]

Relaxing HMM Alignment Model Assumptions for Machine Translation Using a Bayesian Approach

We used a non-parametric process — the hierarchical Pitman–Yor process — to relax some of the restricting assumptions often used in machine translation. When a long history of word alignments is not available the process falls-back onto shorter histories in a principled way.Yarin Gal

Master's Dissertation, 2012

[PDF] [BibTex]

Overcoming Alpha-Beta Limitations Using Evolved Artificial Neural Networks

We trained a feed-forward neural network to play checkers. The network acts as both the value function for a min-max algorithm and a heuristic for pruning tree branches in a reinforcement learning setting. We used no supervised signal for training - a set of networks was assessed by playing against each-other and the winning networks' weights were adapted following the ES algorithm.Yarin Gal, Mireille Avigal

Machine Learning and Applications (IEEE), 2010

[Paper] [BibTex]