Blog

Machine learning blog. And such.

Towards a Science of AI Evaluations

In 2015 the American Environmental Protection Agency (EPA) found that when Volkswagen cars were tested for pollutants under lab conditions, the cars changed their mode of operation into a "do not pollute mode" [1]. In this mode, the engine artificially reduced the amounts of pollutants it produced. This reduced pollution was only during tests though. Once on the road, the engines switched back to normal mode, leading to emissions of nitrogen oxide pollutants 40 times above what is allowed in the US. ...Post

Uncertainty in Deep Learning (PhD Thesis)

So I finally submitted my PhD thesis, collecting already published results on how to obtain uncertainty in deep learning, and lots of bits and pieces of new research I had lying around...Post (Comments)

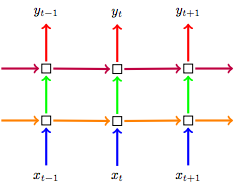

Code and discussion for the paper "A Theoretically Grounded Application of Dropout in Recurrent Neural Networks"

These are just some comments and updates on the code from the paper.Post

Homoscedastic and heteroscedastic regression with dropout uncertainty

During a talk I gave at Google recently, I was asked about a peculiar behaviour of the uncertainty estimates we get from dropout networks.Post

The Science of Deep Learning

I've decided to play with a new idea – encouraging an interactive discussion to try to support or falsify a hypothesis in deep learning. This followed some ideas I've had about the interaction between theoretical and experimental research approaches in our field. This post contains the discussion board for the paper "A theoretically grounded application of dropout in recurrent neural networks" if you have any comments.Post (Comments)

What my deep model doesn't know...

I recently spent some time trying to understand why dropout deep learning models work so well – trying to relate them to new research from the last couple of years. I was quite surprised to see how close these were to Gaussian processes. I was even more surprised to see that we can get uncertainty information from these deep learning models for free – without changing a thing.Post (Comments)