Talks

Bayesian Deep Learning

Bayesian models are rooted in Bayesian statistics and easily benefit from the vast literature in the field. In contrast, deep learning lacks a solid mathematical grounding. Instead, empirical developments in deep learning are often justified by metaphors, evading the unexplained principles at play. These two fields are perceived as fairly antipodal to each other in their respective communities. It is perhaps astonishing then that most modern deep learning models can be cast as performing approximate inference in a Bayesian setting. The implications of this are profound: we can use the rich Bayesian statistics literature with deep learning models, explain away many of the curiosities with this technique, combine results from deep learning into Bayesian modeling, and much more.Yarin Gal

In this talk I will review a new theory linking Bayesian modeling and deep learning and demonstrate the practical impact of the framework with a range of real-world applications. I will also explore open problems for future research—problems that stand at the forefront of this new and exciting field..

Invited talk: NASA Frontier Development Lab, 2017

Invited talk: O'Reilly Artificial Intelligence in New York, 2017

Invited talk: Robotics: Science and Systems (RSS 2017), New Frontiers for Deep Learning in Robotics workshop

[Presentation]

Modern Deep Learning through Bayesian Eyes

Bayesian models are rooted in Bayesian statistics, and easily benefit from the vast literature in the field. In contrast, deep learning lacks a solid mathematical grounding. Instead, empirical developments in deep learning are often justified by metaphors, evading the unexplained principles at play. These two fields are perceived as fairly antipodal to each other in their respective communities. It is perhaps astonishing then that most modern deep learning models can be cast as performing approximate inference in a Bayesian setting. The implications of this statement are profound: we can use the rich Bayesian statistics literature with deep learning models, explain away many of the curiosities with these, combine results from deep learning into Bayesian modelling, and much more.Yarin Gal

Invited talk: ``Computational Statistics and Machine Learning'' seminar series, UCL, 2015

Invited talk: ETH, Zurich, 2015

Invited talk: ``Alan Turing Institute Deep Learning Scoping Workshop'', Edinburgh, 2015

Invited talk: ``Microsoft Research Cambridge'' public talks series, Cambridge, 2015

Invited talk: Google, Mountain View, 2016

Invited talk: Natural Language and Information Processing seminar series, Cambridge, 2016

Invited talk: OpenAI, San Francisco, 2016

Invited talk: London Machine Learning Meetup, London, 2016

[Presentation] [Video]

Deep Learning 101 — a Hands-on Tutorial

This is an introductory hands-on tutorial to deep learning, with practical elements using Theano and Keras. This tutorial was given at NASA Frontier Development Lab.Yarin Gal

Invited talk: NASA Frontier Development Lab, 2016

[Presentation]

Dropout as a Bayesian Approximation

We show that dropout in multilayer perceptron models (MLPs) can be interpreted as a Bayesian approximation. Results are obtained for modelling uncertainty for dropout MLP models - extracting information that has been thrown away so far, from existing models. This mitigates the problem of representing uncertainty in deep learning without sacrificing computational performance or test accuracy.Yarin Gal

Invited talk: 33rd International Conference on Machine Learning, 2016

[Presentation]

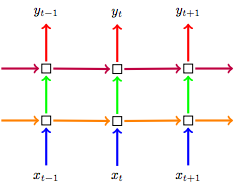

Dropout in RNNs Following a VI Interpretation

A long strand of empirical research has claimed that dropout cannot be applied between the recurrent connections of a recurrent neural network (RNN). In this paper we show that a recently developed theoretical framework, casting dropout as approximate Bayesian inference, can give us mathematically grounded tools to apply dropout within such recurrent layers.Yarin Gal

Invited talk: ''Alan Turing Institute Deep Generative Models Workshop'', Royal Society, London, 2016

[Presentation]

What my deep model doesn’t know...

If you give me pictures of various dog breeds – and then you ask me to classify a new dog photo – I should return a prediction with rather high confidence. But if you give me a photo of a cat and force my hand to decide what dog breed it belongs to – I better return a prediction with very low confidence. Similar problems occur in medicine as well: you would not want to falsely diagnose a patient just because your model is uncertain about its output. Even though deep learning tools have achieved tremendous success in applied machine learning, these tools do not capture this kind of information. In this talk I will explore a new theoretical framework casting dropout training in neural networks as approximate Bayesian inference. A direct result of this theory gives us tools to model uncertainty with dropout networks – extracting information from existing models that has been thrown away so far. The practical impact of the framework has already been demonstrated in applications as diverse as deep reinforcement learning, camera localisation, and predicting DNA methylation, applications which I will survey towards the end of the talk.Yarin Gal

Invited talk: ``Alan Turing Institute Deep Learning Open Workshop'', Edinburgh, 2015

Invited talk: Adaptive Brain Lab, Department of Psychology, Cambridge, 2016

Invited talk: MRC Cognition and Brain Science Unit, Cambridge, 2016

[Presentation]

Bayesian Convolutional Neural Networks with Bernoulli Approximate Variational Inference

We offer insights into why recent state-of-the-art models in image processing work so well. We start with an approximation of a well known BNP model and through a (beautiful) derivation obtain insights into getting state-of-the-art results on the CIFAR-10 dataset.Yarin Gal

Invited talk: ``Bayesian Nonparametrics in the North'' workshop, Ecole Centrale de Lille, 2015

[Presentation]

Representations of Meaning

We discuss various formal representations of meaning, including Gentzen sequent calculus, vector spaces over the real numbers, and symmetric closed monoidal categories.Yarin Gal

Invited talk: Trinity College Mathematical Society, University of Cambridge, 2015

[Presentation]

Latent Gaussian Processes for Distribution Estimation of Multivariate Categorical Data

We discuss the issues with representing high dimensional vectors of discrete variables, and existing models that attempt to estimate the distribution of such. We then present our approach which relies on a continuous latent representation for the discrete data.Yarin Gal

Workshop on Advances in Variational Inference, NIPS, 2014

32nd International Conference on Machine Learning, 2015

Invited talk: Microsoft Research, Cambridge, 2015

Invited talk: NTT Labs, Kyoto, Japan, 2015

[Presentation] [Video — Microsoft] [Video — ICML]

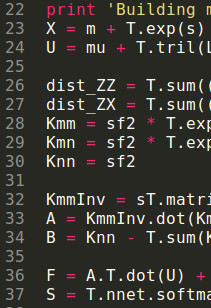

Improving the Gaussian Process Sparse Spectrum Approximation by Representing Uncertainty in Frequency Inputs

Standard sparse pseudo-input approximations to the Gaussian process (GP) cannot handle complex functions well. Sparse spectrum alternatives attempt to answer this but are known to over-fit. We use variational inference for the sparse spectrum approximation to avoid both issues. We extend the approximate inference to the distributed and stochastic domains.Yarin Gal

32nd International Conference on Machine Learning, 2015

[Presentation] [Video]

An Infinite Product of Sparse Chinese Restaurant Processes

We define a new process that gives a natural generalisation of the Indian buffet process (used for binary feature allocation) into categorical latent features. For this we take advantage of different limit parametrisations of the Dirichlet process and its generalisation the Pitman–Yor process.Yarin Gal

10th Conference on Bayesian Nonparametrics (BNP), 2015

[Presentation]

Distributed Variational Inference in Sparse Gaussian Process Regression and Latent Variable Models

We develop parallel inference for sparse Gaussian process regression and latent variable models. These processes are used to model functions in a principled way and for non-linear dimensionality reduction in linear time complexity. Using parallel inference we allow the models to work on much larger datasets than before.Yarin Gal

Workshop on New Learning Models and Frameworks for Big Data, ICML, 2014

[Presentation]

Pitfalls in the use of Parallel Inference for the Dirichlet Process

We show that the recently suggested parallel inference for the Dirichlet process is conceptually invalid. The Dirichlet process is important for many fields such as natural language processing. However the suggested inference would not work in most real-world applications.Yarin Gal

Workshop on Big Learning, NIPS, 2013

31st International Conference on Machine Learning, 2014

[Presentation]

Symbolic Differentiation for Rapid Model Prototyping in Machine Learning and Data Analysis – a Hands-on Tutorial

We talk about the theory of symbolic differentiation and demonstrate its use through the Theano Python package. We give two example models: logistic regression and a deep net, and continue to talk about rapid prototyping of probabilistic models with SVI. The talk is based in part on the Theano online tutorial.Yarin Gal

MLG Seminar, 2014

[Presentation]

Rapid Prototyping of Probabilistic Models using Stochastic Variational Inference

In data analysis we have to develop new models which can often be a lengthy process. We need to derive appropriate inference which often involves cumbersome implementation which changes regularly. Rapid prototyping answers similar problems in manufacturing, where it is used for quick fabrication of scale models of physical parts. We present Stochastic Variational Inference (SVI) as a tool for rapid prototyping of probabilistic models.Yarin Gal

Short talk, 2014

[Presentation]

Distributed Inference in Bayesian Nonparametrics – the Dirichlet Process and the Gaussian Process

I present distributed inference methodologies for two major processes in Bayesian nonparametrics. Pitfalls in the use of parallel inference for the Dirichlet process are discussed, and distributed variational inference in sparse Gaussian process regression and latent variable models is presented.Yarin Gal

Invited talk: NTT Labs, Kyoto, Japan, 2014

Emergent Communication for Collaborative Reinforcement Learning

Slides from a seminar introducing collaborative reinforcement learning and how learning communication can improve collaboration. We use game theory to motivate the use of collaboration in a multi-agent setting. We then define multi-agent and decentralised multi-agent Markov decision processes. We discuss issues with these definitions and possible ways to overcome them. We then transition to emergent languages. We explain how the use of an emergent communication protocol could aid in collaborative reinforcement learning. Reviewing a range of emergent communication models developed from a linguistic motivation to a pragmatic view, we finish with an assessment of the problems left unanswered in the field.Yarin Gal, Rowan McAllister

MLG Seminar, 2014

[Presentation]

The Borel–Kolmogorov paradox

Slides from a short talk explaining the the Borel–Kolmogorov paradox, alluding to possible pitfalls in probabilistic modelling. The slides are partly based on Jaynes, E.T. (2003) "Probability Theory: The Logic of Science".Yarin Gal

Short talk, 2014

[Presentation]

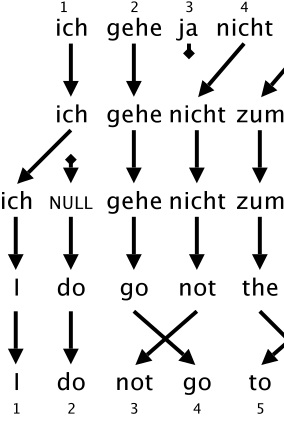

A Systematic Bayesian Treatment of the IBM Alignment Models

We used a non-parametric process — the hierarchical Pitman–Yor process — in models that align words between pairs of sentences. These alignment models are used at the core of all machine translation systems. We obtained a significant improvement in translation using the process.Yarin Gal

Association for Computational Linguistics (NA-ACL), 2013

[Presentation]

Bayesian Nonparametrics in Real-World Applications: Statistical Machine Translation and Language Modelling on Big Datasets

Slides from a seminar introducing statistical machine translation and language modelling as real-world applications of Bayesian nonparametrics. We give a friendly introduction to statistical machine translation and language modelling, and then describe how recent developments in the field of Bayesian nonparametrics can be exploited for these tasks. The first part of the presentation is based on the lecture notes by Dr Phil Blunsom.Yarin Gal

MLG Seminar, 2013

[Presentation]